Difference between revisions of "S16: Ahava"

Proj user10 (talk | contribs) (→Connection Matrix) |

Proj user10 (talk | contribs) (→Compute Module) |

||

| Line 219: | Line 219: | ||

==== Car Controller ==== | ==== Car Controller ==== | ||

==== Compute Module ==== | ==== Compute Module ==== | ||

| + | Compute module provides a dual camera interface that is suitable for both mono and stereo vision based image processing. Combined with a HDMI display support, this makes it a suitable platform to prototype simple image based applications. The underlying architecture of the Raspberry Pi also has a VPU which can be used for relatively faster computations. The overall form factor of the platform and the camera board are also small and suitable for mounting on a RC car like the one used in this project. | ||

| + | |||

| + | The downside that we faced after selecting this platform was that there is not enough documentation or support to fully exploit the features of the compute module. As per available information online, using the VPU requires us to rewrite the imaging algorithms in assembly code. This task was not possible within the time frame that was available to implement this project. Moreover, we intended to use OpenCV support for building our applications. In the end, we had an OpenCV application running on a single-core CPU which was not responsive enough for a stereo based application. Our final implementation uses a single camera based object-tracking algorithm using OpenCV. | ||

| + | |||

| + | ===== Platform Setup ===== | ||

| + | Setting up the platform required building the necessary kernel and OS utilities, library packages, openCV library and the root file-system. We utilized the buildroot package to configure the software required by the platform. There were many dependencies that needed to be resolved to be able to finally bring up the board for OpenCV application development. | ||

| + | |||

| + | Buildroot provides the framework to download and cross-compile all the packages that are selected in the configuration file. Toolchain for the platform is also built on the host system by buildroot. We saved our buildroot configuration in a file named “compute_module_defconfig”. | ||

| + | |||

| + | Most of the board bring-up time was spent in enabling OpenCV support for the platform. There were many dependencies that were needed to be resolved. OpenCV requires the support of libraries such as Xorg Windowing System, GTK2 GUI library and openGL. | ||

| + | |||

| + | The basic steps to build a system software for compute module is given in the link below. | ||

| + | |||

| + | https://github.com/raspberrypi/tools/tree/master/usbboot | ||

| + | |||

| + | The first requirement is to build an application, “rpiboot”, that is used to flash the onboard eMMC flash of the compute module (Refer to the link above for steps to build the rpiboot application). This application program interacts with the bootloader that is already present in the compute module and registers the eMMC flash as a USB mass storage device on the host system. This way, we can copy programs and files to/from the file system already present on the target platform and also flash the entire eMMC if necessary. | ||

| + | |||

| + | Once we are able to interact with the eMMC flash onboard the compute module, we can build the required image using buildroot. Buildroot can be cloned from the repository below. | ||

| + | |||

| + | git.buildroot.net/buildroot | ||

| + | |||

| + | Run “make menuconfig” in the buildroot base directory to perform a menu driven configuration of the system software and supporting packages. After saving and exiting ‘menuconfig’, the saved configuration can be found in the file called “.config” in the base directory. Save this file under a desired name in “{BUILDROOT_BASE}/configs” directory. We saved ours as “compute_module_defconfig”. The next time we build using buildroot, we can just run “make compute_module_defconfig” to restore the saved configuration. | ||

| + | |||

| + | As stated above, we ran into dependency issues with respect to OpenCV support. We were faced with errors such as “GTK2: Unable to open display”, “Xinit failed”, etc. This link shown below was very helpful in resolving the issues. | ||

| + | |||

| + | https://agentoss.wordpress.com/2011/03/06/building-a-tiny-x-org-linux-system-using-buildroot | ||

| + | |||

| + | We were able to bring up “twm” or Tiny Window Manager on the platform and run some sample OpenCV applications. | ||

| + | |||

| + | The next task was to enable camera and display support on the compute module. By default, the camera is disabled and the HDMI interface is not configured to detect a hot-plug. We referred to the link shown below to enable HDMI hot-plug through the config.txt file in the boot partition. | ||

| + | |||

| + | https://www.raspberrypi.org/documentation/configuration/config-txt.md | ||

| + | |||

| + | The difficulty in getting the camera to work was that a large amount of documentation online pointed at installing "raspbian" OS on the pi and running apt-get or other rpi applications to enable the camera interface. The existing raspbian image does not fit into the compute module eMMC flash. The camera interfaces are disabled by default. To enable the same, config.txt requires 'start_x' variable to be set to '1'. This would cause the BCM bootloader to lookup a start_x.elf and fixup_x.dat to actually enable to camera interfaces. In brief, this would cause the loader to initialize the GPIOs, shown in hardware setup section, to function as camera pins. This step also requires a dtb-blob.bin, a compiled device tree, setting the necessary pin configurations. If this file is missing, the loader will use a default dtb-blob.bin built into the 'start.elf' ( Please note this is not the same as start_x.elf ) binary. | ||

| + | We located this file by running '''''“sudo wget http://goo.gl/lozvZB -O /boot/dt-blob.bin”.''''' Once the CM boots up, executing “modprobe bcm2835-v4l2” on the module will load the v4l2 camera driver. OpenCV uses this driver to interact with the onboard camera interface. | ||

| + | |||

| + | Once the buildroot has produced the image, it needs to be flashed to the compute module eMMC. A step by step procedure for the same is given in the link below. | ||

| + | |||

| + | https://www.raspberrypi.org/documentation/hardware/computemodule/cm-emmc-flashing.md | ||

| + | |||

| + | Note that the jumper positions onboard needs to be changed for the CM to behave as a USB slave device. | ||

| + | |||

| + | <Jumper position image> | ||

| + | |||

| + | ===== Object Tracking using OpenCV ===== | ||

| + | Tracking of an object is made possible by using image processing and image analysis techniques on a live video feed from a camera mounted on the VisionCar. Relying on images as the primary ‘sensing’ element allows us to act upon real objects in a very intuitive way – similar to how we humans do. We use OpenCV, an open source real time computer vision library to process live video frames. The library provides definitions of datatypes such as Matrices and methods to work on them. In addition, it provides optimized implementations for complex image processing routines. We have designed and implemented an application to perform object tracking using features provided by OpenCV library. | ||

| + | |||

| + | An image is a 2D array of pixels. In the case of a coloured image, each pixel is represented by 3 components - red, green and blue. Apart from the RGB color scheme, the HSV or Hue, Saturation and Value scheme is widely used in image processing. Separating the color component from the intensity can help in obtaining more information from an image. | ||

| + | |||

| + | The algorithm for object tracking is as shown in Fig<>. The application performs tracking based on the color information of the object. On reading an image frame from the camera, we obtain an RGB frame. This is converted to HSV color format using the ‘cvtColor’ provided by OpenCV. A filter is applied to the resulting HSV frame based on which, we obtain a thresholded image. These values have to be configured per object that is to be tracked. The HSV frame is now subjected to morphological operations such as dilate and erode to filter noise, isolate individual elements and join disparate elements. This forms a perfectly segmented image with the target object in sight. Next, all the contours/shapes in the filtered image are determined using the findContours OpenCV function. This would return a list of contours and their sizes as per the contents in the filtered image. We use ‘moments’ to determine the area and location of the object. This information is also sent to the Car Controller through the Communication Interface. If the area of the object is not within the threshold specified, we consider the object to be absent. These sequence of operations are carried out continuously on every frame thereby tracking the target object. | ||

| + | |||

| + | The application was executed and tested on a desktop environment with a Webcam before porting it to compute module. | ||

| + | |||

| + | ===== Communication Interface ===== | ||

| + | The communication interface of the Compute Module is just a simple UART. The CM receives data regarding HSV thresholding values and light sensor readings from the LPC module. The position and distance to the tracked object is sent by the CM back to the Car Controller. The application on the CM has two threads. One is the main image processing thread. The UART write is performed by this thread once per frame as and when the position of the target object is computed. The second thread performs the UART read to adjust the HSV threshold values. This thread performs blocking read calls and remains asleep as long as there is no data available from the LPC module. | ||

| + | The data format which is transmitted/received in the CM is explained below. The transmitted data is a simple 4 byte structure: | ||

| + | |||

| + | /* Copy Tx structure here */ | ||

| + | |||

| + | The horizontal/lateral position of the target object in the frame is divided into multiple zones from extreme left to extreme right. This field is labelled ‘lZone’ in the structure. The depth or distance to the object which is based on the area of the object in the frame is also divided into multiple zones from ‘near’ to ‘far’. This zone information is encoded in the ‘dZone’ field of the data structure. | ||

| + | The possible values for the ‘lZone’ and ‘dZone’ in the form of enumerations is shown below: | ||

| + | |||

| + | /* Copy the Enum for zone and depth */ | ||

| + | |||

| + | The data format received by the compute module is as below: | ||

| + | |||

| + | /* Copy Rx structure here */ | ||

| + | |||

| + | The values for the field ‘<command>’ is enumerated below. This value is sent from the bluetooth interfaced to the LPC controller. Each command increments or decrements its corresponding Hue, Saturation or Value thresholds. The “StoreFIle” command stores the current threshold values to a file. The “LoadFile” command restores the threshold values from the file. | ||

| + | |||

| + | /* Copy enum for commands */ | ||

| + | |||

| + | The flowchart for the UART communication control is shown below | ||

| + | |||

| + | <UART threading flow chart here> | ||

| + | |||

| + | ===== Flaws of Current Algorithm ===== | ||

| + | The current algorithm has its own set of disadvantages. The first disadvantage is that the algorithm relies on area to compute distance to the target object. Any small amount of noise can easily mislead the algorithm to track some source of noise in the image. A partial solution to this problem could be to utilize the light sensor readings and modify the threshold values to eliminate noise under varying lighting conditions. This might still be insufficient as the object itself may move from one lighting condition to another. | ||

| + | |||

| + | Stereo vision can add depth as an extra dimension for thresholding the image. Noise can easily be eliminated by using distance as a threshold value. But this requires good computation power which is not available in the current platform. | ||

== '''Integration & Testing''' == | == '''Integration & Testing''' == | ||

Revision as of 17:01, 24 May 2016

Contents

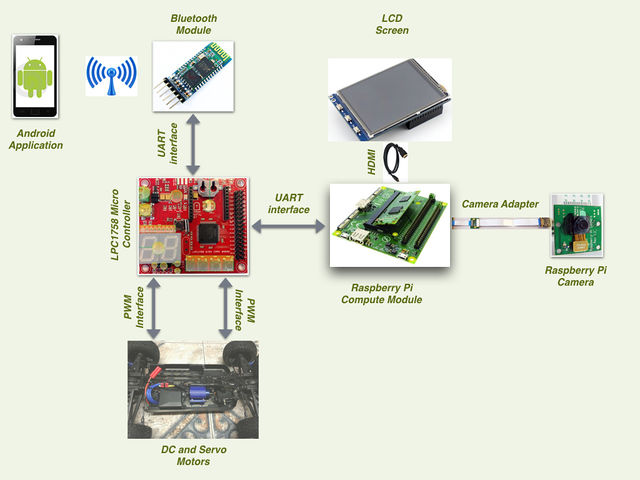

VisionCar

This project aims at tracking a known object from a vehicle and follow the target at a pre-fixed distance. The RC car is mounted with a camera which is interfaced to a Raspberry Pi Compute module. The Compute module performs the required image processing using OpenCV and provides relevant data to the Car Controller for driving. An Android Application is developed to allow a user to select an object by adjusting the HSV filter thresholds. These values are then used by the imaging application to track the desired object.

Objectives & Introduction

Show list of your objectives. This section includes the high level details of your project. You can write about the various sensors or peripherals you used to get your project completed.

Team Members & Responsibilities

- Aditya Devaguptapu

- Ajai Krishna Velayutham

- Akshay Vijaykumar

- Hemanth Konanur Nagendra

- Vishwanath Balakuntla Ramesh

Schedule

| Week# | Date | Task | Actual | Status |

|---|---|---|---|---|

| 1 | 3/27/2016 |

|

|

Completed |

| 2 | 4/03/2016 |

|

|

Completed |

| 4 | 4/20/2016 |

|

|

Completed |

| 5 | 4/27/2016 |

|

Completed | |

| 6 | 5/04/2016 |

|

Completed | |

| 7 | 5/11/2016 |

|

Completed | |

| 8 | 5/18/2016 |

|

Completed |

Parts List & Cost

| Sl No | Item | Cost |

|---|---|---|

| 1 | RC Car | $188 |

| 2 | Remote and Charger | $48 |

| 3 | SJOne Board | $80 |

| 4 | Raspberry Pi Compute Module | $122 |

| 5 | Raspberry Pi Camera | $70 |

| 6 | Raspberry Pi Camera Adapter | $28 |

| 7 | LCD Display | $40 |

| 8 | General Purpose PCB | $10 |

| 9 | Accessories | |

| 10 | Total |

Design & Implementation

Hardware Design

The hardware design for VisionCar involves using a SJOne board, Raspberry Pi Compute Module and Bluetooth Transciever as described in detail in the following sections. Information about the pins used for the interfacing of the boards and their power sources are provided.

System Architecture

The hardware design for VisionCar involves using a SJOne board, Raspberry Pi Compute Module and Bluetooth Transciever as described in detail in the following sections. Information about the pins used for the interfacing of the boards and their power sources are provided.

Power Distribution Unit

Power distribution is one of the most important aspects in the development of such an embedded system. VisionCar has 6 individual modules that require power supplies of various ranges for its operation as shown in the table below.

| Module | Voltage |

|---|---|

| SJOne Board | 3.3V |

| Raspberry Pi Compute Module | 5.0V |

| Servo Motor | 3.3V |

| DC Motor | 7.0V |

| Bluetooth Module | 3.6V - 6V |

| LCD Display | 5.0V |

As most of the voltage requirements lies between 3.3V to 5V range we made use of SparkFun Breadboard Power supply (PRT 00114). It is a simple breadboard power supply kit that takes power from a DC input and outputs a selectable 5V or 3.3V regulated voltage. In this project, the Input to the PRT 00114 is provided by a 7V DC LiPo rechargeable battery.

<Image of Breadboard PowerSupply>

The schematic of the power supply design is as shown in the diagram below. It has a switch to configure the output voltage to either 3.3V or 5V.

<Breadboard Schematic>

For components requiring a 7V supply, a direct connection was provided from the battery. Additionally, suitable power banks were used to power these modules as and when required.

Connection Matrix

This is a general purpose PCB which is used to mount the external wiring used in our system. The prototype PCB board houses the

- Power Supply (PRT-00114)

- Vcc and Ground signals.

- PWM control signals.

- UART interconnections.

- Bluetooth module’s interconnections.

It is an essential component of our design, as it makes our Vision Car look neat and connections a lot easier.

Car Control

Motor Interface

VisionCar uses a DC and Servomotor to move the car around. These motors were interfaced to the Car Controller using the pins as described below and were controlled using PWM signals.

Servo Motor Interface

The VisionCar has an inbuilt configurable servo motor which is driven by PWM. The power required for the servo motor operation is provided the rechargeable LiPo battery. Servomotor requires three connections which are 'VCC', 'GND' and 'PWM’. The width of the PWM signal turns the servo across its allowed range of angles. The pin connections to the Car Controller are as shown in the table below.