Difference between revisions of "S16: Robolamp"

Proj 146u3 (talk | contribs) (→My Issue #1) |

(→Grading Criteria) |

||

| (37 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | === | + | == Robolamp: Autonomous Laptop Lamp == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[File:BDJv2.jpg|600px|center]] | |

== Abstract == | == Abstract == | ||

| Line 17: | Line 7: | ||

== Objectives & Introduction == | == Objectives & Introduction == | ||

| − | |||

| − | * | + | * Follow movement of the user-selected object (mechanical pencil) |

| − | * | + | * Utilize two micro servo motors capable of interpreting pixel data from external program |

| − | * | + | * External program should support color tracking via OpenCV libraries |

| − | * The | + | * The LED should have a voltage regulated circuit driven by external power supply |

| + | * The LED output will be determined by current environment light value | ||

* The lamp shall be user-created and 3D printed | * The lamp shall be user-created and 3D printed | ||

=== Team Members & Responsibilities === | === Team Members & Responsibilities === | ||

* Brandon Zhen | * Brandon Zhen | ||

| + | ** Transfer designs for lamp parts into 3D-printable models | ||

** Write code for the motor movement based on CV data | ** Write code for the motor movement based on CV data | ||

| − | ** | + | ** Write code to control the LED based on light sensor input |

* Dustin Phou | * Dustin Phou | ||

** Order parts | ** Order parts | ||

| − | ** Design circuit to externally power motor | + | ** Design circuit to externally power motor |

| + | ** Design circuit to control LED brightness | ||

* James Ogden | * James Ogden | ||

| − | ** Create an OpenCV program that will track | + | ** Create an OpenCV program that will track pencil movement |

** Design the components used to create the physical lamp | ** Design the components used to create the physical lamp | ||

| Line 57: | Line 49: | ||

! scope="row"| 3 | ! scope="row"| 3 | ||

| 4/18 | | 4/18 | ||

| − | | Write an OpenCV program to track the movement of a | + | | Write an OpenCV program to track the movement of a pencil across a desk |

| − | | The program is able to recognize | + | | The program is able to recognize a pencil and track it, but needs to be improved |

|- | |- | ||

! scope="row"| 4 | ! scope="row"| 4 | ||

| Line 93: | Line 85: | ||

| SG92R | | SG92R | ||

| Micro Servo Motor | | Micro Servo Motor | ||

| − | | | + | | 2 |

| − | | $ | + | | $11.90 |

|- | |- | ||

!scope="row"| 2 | !scope="row"| 2 | ||

| Line 126: | Line 118: | ||

| $0.95 | | $0.95 | ||

|- | |- | ||

| − | !scope="row| 7 | + | !scope="row"| 7 |

| + | | n/a | ||

| + | | SJOne Board | ||

| + | | 1 | ||

| + | | $80.00 | ||

| + | |- | ||

| + | !scope="row"| 8 | ||

| + | | n/a | ||

| + | | 6V Wall Mount A/C Adapter | ||

| + | | 1 | ||

| + | | $8.95 | ||

| + | |- | ||

| + | !scope="row"| 9 | ||

| n/a | | n/a | ||

| 3D Printed Base | | 3D Printed Base | ||

| Line 132: | Line 136: | ||

| Free | | Free | ||

|- | |- | ||

| − | !scope="row| | + | !scope="row"| 10 |

| n/a | | n/a | ||

| 3D Printed Arm | | 3D Printed Arm | ||

| Line 138: | Line 142: | ||

| Free | | Free | ||

|- | |- | ||

| − | !scope="row| | + | !scope="row"| 11 |

| n/a | | n/a | ||

| 3D Printed Head | | 3D Printed Head | ||

| Line 144: | Line 148: | ||

| Free | | Free | ||

|- | |- | ||

| − | !scope="row| | + | !scope="row"| 12 |

| n/a | | n/a | ||

| Webcam | | Webcam | ||

| Line 153: | Line 157: | ||

== Design & Implementation == | == Design & Implementation == | ||

| − | |||

=== Hardware Design === | === Hardware Design === | ||

This project consists of a main lamp system that interfaces with the SJOne board and several other small subsystems. | This project consists of a main lamp system that interfaces with the SJOne board and several other small subsystems. | ||

| − | [[ | + | [[File:RobolampSysBlock.jpg|thumb|center|600px|Figure 1. System Block Diagram]] |

The lamp is composed of a custom-made arm, base and head. Two servo motors connect the parts together: one connects the arm to the base and one connects the head to the arm. The arm is capable of rotating a full 180°, while the head was limited to rotating only 90°. The head houses a generic webcam inside of it that collects data for OpenCV to process. The head also has a 1 Watt LED mounted onto it to serve as the lamp's light source. | The lamp is composed of a custom-made arm, base and head. Two servo motors connect the parts together: one connects the arm to the base and one connects the head to the arm. The arm is capable of rotating a full 180°, while the head was limited to rotating only 90°. The head houses a generic webcam inside of it that collects data for OpenCV to process. The head also has a 1 Watt LED mounted onto it to serve as the lamp's light source. | ||

| Line 164: | Line 167: | ||

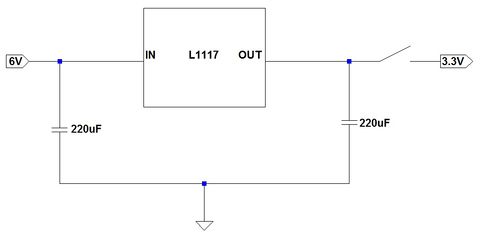

Because the motors draw a variable current that might exceed the current rating on the SJOne's pins, they were powered externally. The LED also shares this power circuit due to its large current draw. The basic voltage regulator circuit used to power them is shown below. | Because the motors draw a variable current that might exceed the current rating on the SJOne's pins, they were powered externally. The LED also shares this power circuit due to its large current draw. The basic voltage regulator circuit used to power them is shown below. | ||

| − | [[ | + | [[File:RobolampVoltReg.jpg|thumb|480px|center|Figure 2. Voltage Regulator Circuit]] |

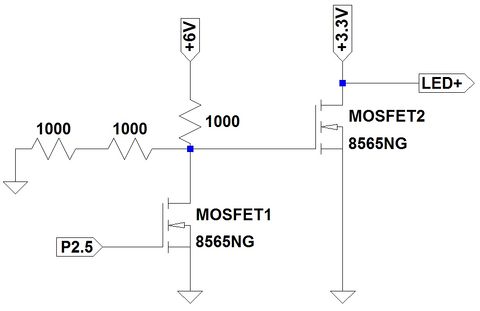

The LED on the head was also given a dedicated circuit in order to control its brightness based on the lighting conditions in the room. The circuit can be seen below. | The LED on the head was also given a dedicated circuit in order to control its brightness based on the lighting conditions in the room. The circuit can be seen below. | ||

| − | [[ | + | [[File:RobolampLEDPWM.jpg|thumb|center|480px|Figure 3. MOSFET Circuit to control PWM]] |

=== Hardware Interface === | === Hardware Interface === | ||

| − | + | ||

| + | The lamp's two servo motors are controlled by PWM pins 2.0 and 2.1 on the SJOne board. A camera mounted on the lamp feeds raw image data to the laptop running OpenCV through a USB connection. The OpenCV tracking algorithm then uses the image data to generate frame data to pass to the SJOne board. This frame data is passed to the board by a USB connection. This connection transfers the data directly to the board to be processed via a COM port connection, since the large amount of data being sent is not suitable to be stored on the board. This frame data is run through the algorithms detailed in our software section in order to generate PWM signals corresponding to the next angle that the lamp should aim at. | ||

| + | |||

| + | The LED is also controlled by a PWM output from pin 2.5 on the SJOne board that changes duty cycles based on light sensor data. At the brightness level of a typical lit room, the PWM should run at a 0% duty cycle, turning off the LED. When the room is sufficiently dark, the PWM will run at a full 100% duty cycle to turn on the LED. Light values in-between ambient room brightness and full darkness will also make the LED light up with a varying brightness depending on how low the light level in the room is. The MOSFET circuit shown above is used to vary the voltage (and thus current) to the LED, allowing for its brightness to vary. | ||

=== Software Design === | === Software Design === | ||

| − | + | The bulk of the software design involves five tasks, four queues, and an added handler for accepting raw data from the separate OpenCV-driven program. | |

| + | [[File:StateMachinev2.png|800px|thumb|centre|Figure 4. Software Design Flow]] | ||

| + | === Implementation === | ||

| + | Each task developed for this project was imperative for successful execution. A brief description of their key operations are listed below. | ||

| − | |||

| − | === | + | '''visionTask''' |

| − | + | ||

| + | The first task of importance, visionTask, receives data from the handler. The 'x' and 'y' pixel data received are values within our camera resolution, 640x480. After visionTask successfully pulls data from the queue, the raw values are converted to a percentage from -90 <--> + 90 using the calculation below. The results are placed on another queue specific for the task, CV_core. | ||

| + | |||

| + | frame.coordx = ((((float)raw.coordx/(float)raw.framex)*(200))-(100)); | ||

| + | frame.coordy = ((((float)raw.coordy/(float)raw.framey)*(200))-(100)); | ||

| + | |||

| + | |||

| + | |||

| + | '''CV_core''' | ||

| + | |||

| + | CV_core contains all of the logic necessary for calculating and interpreting each servo motor's next movements. The incoming data is first converted from percentages to degrees. Using the horizontal and vertical viewing angles of the the camera (totaling 48 degrees for 'x', 36 degrees for 'y'), we can gather the total possible movement to be made in a given frame of our total resolution. Next, the target degree for the motor is calculated based on whether the pixel coordinates have been updated, or we have recieved new data in the queue. Once computed, this data is passed into another queue used by the PWMTask. A small excerpt of the task involving the conversions are shown below. | ||

| + | |||

| + | float conversionRatio_DegreeX = ((CAM_ViewAngleHorizontal/100)/2); | ||

| + | float conversionRatio_DegreeY = ((CAM_ViewAngleVertical/100)/2); | ||

| + | |||

| + | float FRAME_DegreeX = conversionRatio_DegreeX * frame.coordx; // Range: -24 degrees to +24 degrees | ||

| + | float FRAME_DegreeY = conversionRatio_DegreeY * frame.coordy; // Range: -18 degrees to +18 degrees | ||

| + | |||

| + | |||

| + | |||

| + | '''PWMTask''' | ||

| + | |||

| + | The PWMTask recieves data from CV_core and another task yet to be discussed, LEDTask. PWMTask is capable of setting the motor and LED values based off percentage or degrees, but in our case we have the pixel data interpreted in degrees, and the LED interpreted in percentages. The minimum and maximum servo motor percentage (2.5 - 12.5) coupled with the maximum rotation (180) are used to calculate the next PWM value to be set. The code below demonstrates a small portion of the PWMTask used to compute the pixel data sent from CV_core. | ||

| + | |||

| + | bool setDegree(PWM &pwm, float degree, float min, float max, float rot) | ||

| + | { | ||

| + | if (degree < -rot/2) { | ||

| + | pwm.set(min); | ||

| + | return false; | ||

| + | } | ||

| + | else if (degree > rot/2) { | ||

| + | pwm.set(max); | ||

| + | return false; | ||

| + | } | ||

| + | else { | ||

| + | return pwm.set( ((degree/(rot/2))*((max-min)/2)) + ((max+min)/2) ); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | |||

| + | |||

| + | '''LEDTask''' | ||

| + | |||

| + | LEDTask continuously polls the SJSUOne's on-board light sensor, and sends the corresponding percentage to PWMTask based off current light values. The LED's brightness directly correlates with the current light values in any given environment. Using a series of logic checking and calculation, the LED percentage is determined and sent to PWMTask. | ||

| + | |||

| + | |||

| + | |||

| + | '''errorTask''' | ||

| + | |||

| + | The final task, errorTask, handles the various error checking logic used throughout each individual task in the system. Depending on the error ID, the task displays the corresponding error on the SJSUOne's LED display. A portion of the error list is shown below. | ||

| + | ERR_id error; | ||

| + | if (pdTRUE == xQueueReceive(ERR_QueueHandle, &error, ERR_ReadTimeout)) { | ||

| + | switch (error) { | ||

| + | case roboLampHandler_cmdParams_scanf: | ||

| + | LED_Display("HI"); | ||

| + | break; | ||

| + | case roboLampHandler_xQueueSend_To_visionTask: | ||

| + | LED_Display("HO"); | ||

| + | break; | ||

| + | case visionTask_xQueueReceive_From_roboLampHandler: | ||

| + | LED_Display("VI"); | ||

| + | break; | ||

| + | case visionTask_xQueueSend_To_CV_Core: | ||

| + | LED_Display("VO"); | ||

| + | break; | ||

| + | ... | ||

| + | ... | ||

| + | ... | ||

| + | } | ||

| − | + | Finally, the separate OpenCV application continuously sends 'x' and 'y' pixel coordinates with the camera's resolution size through serial connection with the SJSUOne board. The OpenCV based program detects objects through HSV values. A binary image is created from a given frame showing white and black colors. A threshold image is then generated depicting the set HSV values by the user. This area is tracked and used as our detected object as the coordinates are calculated. '''For an in-depth look''' at the calculations used for motor movement/smoothing and development of a percentage/degree standard for specific tasks, the GitHub repository has been listed towards the end of the documentation. | |

== Testing & Technical Challenges == | == Testing & Technical Challenges == | ||

| Line 192: | Line 268: | ||

Include sub-sections that list out a problem and solution, such as: | Include sub-sections that list out a problem and solution, such as: | ||

| − | === | + | === Issue #1 === |

One of the primary issues our group ran into was finalizing an OpenCV application. We debated between color detection, cascade classifiers, and motion detection. Motion detecting proved to be too inaccurate for our design, and cascade classifiers required more time for training and sampling, so color detection was chosen for time efficiency and detection accuracy. Given more time, however, developing a cascade classifier for the back of hand features would have given the project more depth. | One of the primary issues our group ran into was finalizing an OpenCV application. We debated between color detection, cascade classifiers, and motion detection. Motion detecting proved to be too inaccurate for our design, and cascade classifiers required more time for training and sampling, so color detection was chosen for time efficiency and detection accuracy. Given more time, however, developing a cascade classifier for the back of hand features would have given the project more depth. | ||

| + | |||

| + | === Issue #2 === | ||

| + | The LED circuit was capable of lighting up the LED at a variable brightness, but the lack of a filter system would cause the light to flicker when the duty cycle was at values between 0% and 100%. The flickering would become bothersome to anyone looking at the light for extended periods of time, so for our demo we made the light more "binary", only switching between on and off. Once a filtering system is implemented, the light should be fully capable of brightness values between off and full brightness. | ||

== Conclusion == | == Conclusion == | ||

| − | + | In all, this result of this project was successful. Our original idea involved modifying an existing lamp, but we found designing our own would be much more beneficial for the project. The design process helped familiarize our group with 3D design, OpenCV, and a further understanding of FreeRTOS. Once the initial prototype was built, we spent the remainder of our time testing and refining several aspects of the lamp, including motor smoothing and LED output. Due to time constraints, we were not able to develop a cascade classifier for object tracking to replace our current detection method. Overall, understanding the functionality of each aspect of the design and continuously testing our implementation was critical for developing a successful outcome. | |

=== Project Video === | === Project Video === | ||

| − | + | [https://www.youtube.com/watch?v=BLMEl06vN8I Demonstration] | |

=== Project Source Code === | === Project Source Code === | ||

| Line 209: | Line 288: | ||

=== References Used === | === References Used === | ||

| − | + | https://www.youtube.com/user/khounslow | |

=== Appendix === | === Appendix === | ||

You can list the references you used. | You can list the references you used. | ||

Latest revision as of 20:11, 26 May 2016

Contents

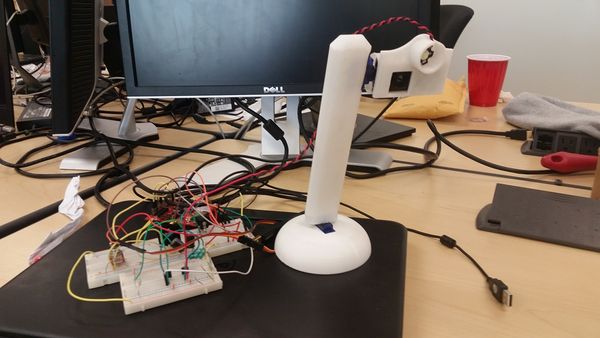

Robolamp: Autonomous Laptop Lamp

Abstract

Robolamp features an autonomous lamp that follows the movement of a user-defined object through OpenCV. For our project, we designated a specific mechanical pencil as our tracking object. Using two micro servo motors reflecting the 'x' and 'y' axis of rotation along with a uniquely designed lamp, Robolamp follows and tracks the user's writing movement while emitting a concentrated light on the surrounding area.

Objectives & Introduction

- Follow movement of the user-selected object (mechanical pencil)

- Utilize two micro servo motors capable of interpreting pixel data from external program

- External program should support color tracking via OpenCV libraries

- The LED should have a voltage regulated circuit driven by external power supply

- The LED output will be determined by current environment light value

- The lamp shall be user-created and 3D printed

Team Members & Responsibilities

- Brandon Zhen

- Transfer designs for lamp parts into 3D-printable models

- Write code for the motor movement based on CV data

- Write code to control the LED based on light sensor input

- Dustin Phou

- Order parts

- Design circuit to externally power motor

- Design circuit to control LED brightness

- James Ogden

- Create an OpenCV program that will track pencil movement

- Design the components used to create the physical lamp

Schedule

| Week# | Date | Task | Actual |

|---|---|---|---|

| 1 | 4/4 | Obtain approval for the project, research and order necessary parts, make rough design of system | Approval obtained, research and design still in progress |

| 2 | 4/11 | Research software for designing the tracking system (OpenCV), write a simple program to track an object to test functionality and how data is generated | Built OpenCV and used a sample program to track a marker using the color of its cap |

| 3 | 4/18 | Write an OpenCV program to track the movement of a pencil across a desk | The program is able to recognize a pencil and track it, but needs to be improved |

| 4 | 4/25 | Test servo motors by connecting their pins to the SJOne board to determine how they can be controlled | Through testing found that the PWM duty cycles that correspond to -90° and +90° are 2.5% and 12.5%, respectively. Used this information to write a program to control the rotation of a motor based on coordinate data received from OpenCV. |

| 5 | 5/2 | Construct the lamp using a combination of motors and 3-D printed lamp components (base, stand, arm) | Design of base completed |

| 6 | 5/9 | Functionally verify lamp's movements and bugfix tracking algorithm | Design of arm completed, verified connectivity of components |

| 7 | 5/16 | Finalize project and demo | Thorough testing and debugging of servo smoothing and tracking. Project report and wiki-page completed. |

Parts List & Cost

| Item # | Part # | Description | Amount | Total Cost |

|---|---|---|---|---|

| 1 | SG92R | Micro Servo Motor | 2 | $11.90 |

| 2 | LD1117 | 3.3V 800mA Linear Voltage Regulator | 1 | $1.25 |

| 3 | Adafruit 977 | TO-220 Clip-On Heatsink | 1 | $0.75 |

| 4 | Adafruit 518 | 1 Watt Heatsink Mounted LED | 1 | $3.95 |

| 5 | 3-050504M | Aluminum SMT Heat Sink 0.5" x 0.5" | 1 | $2.75 |

| 6 | 3M 8810 | Heat Sink Thermal Tape 25mm x 25mm | 1 | $0.95 |

| 7 | n/a | SJOne Board | 1 | $80.00 |

| 8 | n/a | 6V Wall Mount A/C Adapter | 1 | $8.95 |

| 9 | n/a | 3D Printed Base | 1 | Free |

| 10 | n/a | 3D Printed Arm | 1 | Free |

| 11 | n/a | 3D Printed Head | 1 | Free |

| 12 | n/a | Webcam | 1 | Free |

Design & Implementation

Hardware Design

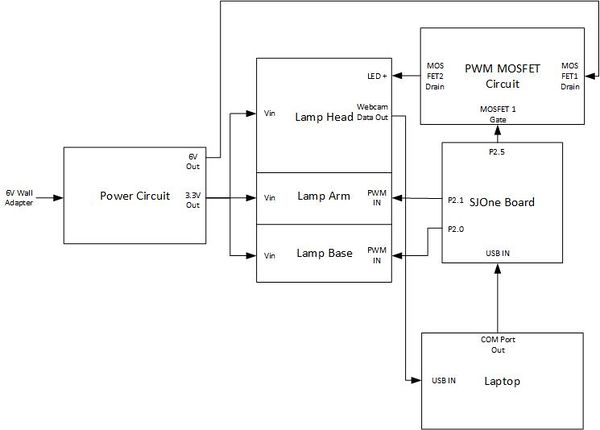

This project consists of a main lamp system that interfaces with the SJOne board and several other small subsystems.

The lamp is composed of a custom-made arm, base and head. Two servo motors connect the parts together: one connects the arm to the base and one connects the head to the arm. The arm is capable of rotating a full 180°, while the head was limited to rotating only 90°. The head houses a generic webcam inside of it that collects data for OpenCV to process. The head also has a 1 Watt LED mounted onto it to serve as the lamp's light source.

Because the motors draw a variable current that might exceed the current rating on the SJOne's pins, they were powered externally. The LED also shares this power circuit due to its large current draw. The basic voltage regulator circuit used to power them is shown below.

The LED on the head was also given a dedicated circuit in order to control its brightness based on the lighting conditions in the room. The circuit can be seen below.

Hardware Interface

The lamp's two servo motors are controlled by PWM pins 2.0 and 2.1 on the SJOne board. A camera mounted on the lamp feeds raw image data to the laptop running OpenCV through a USB connection. The OpenCV tracking algorithm then uses the image data to generate frame data to pass to the SJOne board. This frame data is passed to the board by a USB connection. This connection transfers the data directly to the board to be processed via a COM port connection, since the large amount of data being sent is not suitable to be stored on the board. This frame data is run through the algorithms detailed in our software section in order to generate PWM signals corresponding to the next angle that the lamp should aim at.

The LED is also controlled by a PWM output from pin 2.5 on the SJOne board that changes duty cycles based on light sensor data. At the brightness level of a typical lit room, the PWM should run at a 0% duty cycle, turning off the LED. When the room is sufficiently dark, the PWM will run at a full 100% duty cycle to turn on the LED. Light values in-between ambient room brightness and full darkness will also make the LED light up with a varying brightness depending on how low the light level in the room is. The MOSFET circuit shown above is used to vary the voltage (and thus current) to the LED, allowing for its brightness to vary.

Software Design

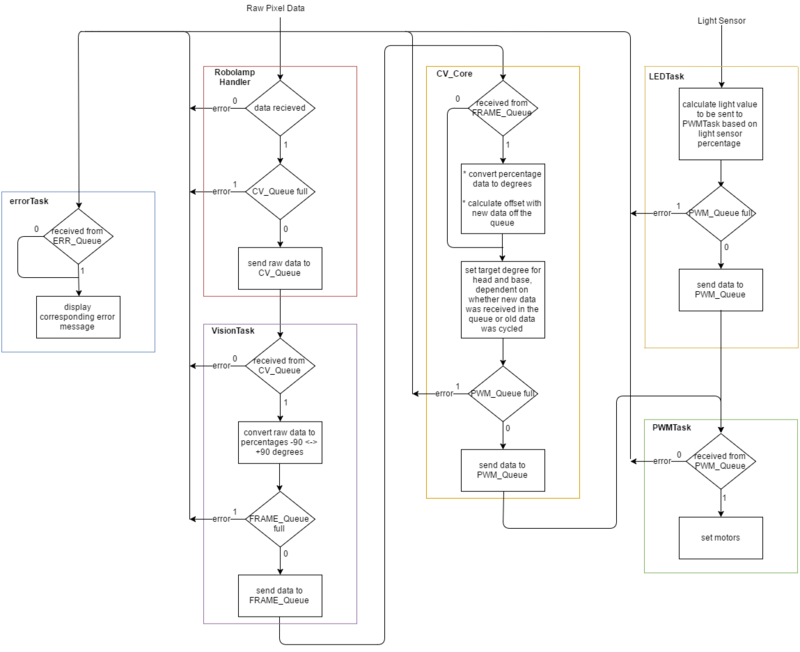

The bulk of the software design involves five tasks, four queues, and an added handler for accepting raw data from the separate OpenCV-driven program.

Implementation

Each task developed for this project was imperative for successful execution. A brief description of their key operations are listed below.

visionTask

The first task of importance, visionTask, receives data from the handler. The 'x' and 'y' pixel data received are values within our camera resolution, 640x480. After visionTask successfully pulls data from the queue, the raw values are converted to a percentage from -90 <--> + 90 using the calculation below. The results are placed on another queue specific for the task, CV_core.

frame.coordx = ((((float)raw.coordx/(float)raw.framex)*(200))-(100));

frame.coordy = ((((float)raw.coordy/(float)raw.framey)*(200))-(100));

CV_core

CV_core contains all of the logic necessary for calculating and interpreting each servo motor's next movements. The incoming data is first converted from percentages to degrees. Using the horizontal and vertical viewing angles of the the camera (totaling 48 degrees for 'x', 36 degrees for 'y'), we can gather the total possible movement to be made in a given frame of our total resolution. Next, the target degree for the motor is calculated based on whether the pixel coordinates have been updated, or we have recieved new data in the queue. Once computed, this data is passed into another queue used by the PWMTask. A small excerpt of the task involving the conversions are shown below.

float conversionRatio_DegreeX = ((CAM_ViewAngleHorizontal/100)/2);

float conversionRatio_DegreeY = ((CAM_ViewAngleVertical/100)/2);

float FRAME_DegreeX = conversionRatio_DegreeX * frame.coordx; // Range: -24 degrees to +24 degrees

float FRAME_DegreeY = conversionRatio_DegreeY * frame.coordy; // Range: -18 degrees to +18 degrees

PWMTask

The PWMTask recieves data from CV_core and another task yet to be discussed, LEDTask. PWMTask is capable of setting the motor and LED values based off percentage or degrees, but in our case we have the pixel data interpreted in degrees, and the LED interpreted in percentages. The minimum and maximum servo motor percentage (2.5 - 12.5) coupled with the maximum rotation (180) are used to calculate the next PWM value to be set. The code below demonstrates a small portion of the PWMTask used to compute the pixel data sent from CV_core.

bool setDegree(PWM &pwm, float degree, float min, float max, float rot)

{

if (degree < -rot/2) {

pwm.set(min);

return false;

}

else if (degree > rot/2) {

pwm.set(max);

return false;

}

else {

return pwm.set( ((degree/(rot/2))*((max-min)/2)) + ((max+min)/2) );

}

}

LEDTask

LEDTask continuously polls the SJSUOne's on-board light sensor, and sends the corresponding percentage to PWMTask based off current light values. The LED's brightness directly correlates with the current light values in any given environment. Using a series of logic checking and calculation, the LED percentage is determined and sent to PWMTask.

errorTask

The final task, errorTask, handles the various error checking logic used throughout each individual task in the system. Depending on the error ID, the task displays the corresponding error on the SJSUOne's LED display. A portion of the error list is shown below.

ERR_id error;

if (pdTRUE == xQueueReceive(ERR_QueueHandle, &error, ERR_ReadTimeout)) {

switch (error) {

case roboLampHandler_cmdParams_scanf:

LED_Display("HI");

break;

case roboLampHandler_xQueueSend_To_visionTask:

LED_Display("HO");

break;

case visionTask_xQueueReceive_From_roboLampHandler:

LED_Display("VI");

break;

case visionTask_xQueueSend_To_CV_Core:

LED_Display("VO");

break;

...

...

...

}

Finally, the separate OpenCV application continuously sends 'x' and 'y' pixel coordinates with the camera's resolution size through serial connection with the SJSUOne board. The OpenCV based program detects objects through HSV values. A binary image is created from a given frame showing white and black colors. A threshold image is then generated depicting the set HSV values by the user. This area is tracked and used as our detected object as the coordinates are calculated. For an in-depth look at the calculations used for motor movement/smoothing and development of a percentage/degree standard for specific tasks, the GitHub repository has been listed towards the end of the documentation.

Testing & Technical Challenges

Describe the challenges of your project. What advise would you give yourself or someone else if your project can be started from scratch again? Make a smooth transition to testing section and described what it took to test your project.

Include sub-sections that list out a problem and solution, such as:

Issue #1

One of the primary issues our group ran into was finalizing an OpenCV application. We debated between color detection, cascade classifiers, and motion detection. Motion detecting proved to be too inaccurate for our design, and cascade classifiers required more time for training and sampling, so color detection was chosen for time efficiency and detection accuracy. Given more time, however, developing a cascade classifier for the back of hand features would have given the project more depth.

Issue #2

The LED circuit was capable of lighting up the LED at a variable brightness, but the lack of a filter system would cause the light to flicker when the duty cycle was at values between 0% and 100%. The flickering would become bothersome to anyone looking at the light for extended periods of time, so for our demo we made the light more "binary", only switching between on and off. Once a filtering system is implemented, the light should be fully capable of brightness values between off and full brightness.

Conclusion

In all, this result of this project was successful. Our original idea involved modifying an existing lamp, but we found designing our own would be much more beneficial for the project. The design process helped familiarize our group with 3D design, OpenCV, and a further understanding of FreeRTOS. Once the initial prototype was built, we spent the remainder of our time testing and refining several aspects of the lamp, including motor smoothing and LED output. Due to time constraints, we were not able to develop a cascade classifier for object tracking to replace our current detection method. Overall, understanding the functionality of each aspect of the design and continuously testing our implementation was critical for developing a successful outcome.

Project Video

Project Source Code

References

Acknowledgement

Any acknowledgement that you may wish to provide can be included here.

References Used

https://www.youtube.com/user/khounslow

Appendix

You can list the references you used.