Difference between revisions of "S15: Swarm Robots"

Proj user5 (talk | contribs) (→Implementation) |

(→Grading Criteria) |

||

| (132 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | == '''Swarm Robots''' == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Abstract == | == Abstract == | ||

| − | Swarm robots deals with coordination of multiple robots. The collective behavior of the robot swarm emerges from interaction with each other and surroundings. All the | + | Swarm robots deals with coordination of multiple robots. The collective behavior of the robot swarm emerges from interaction with each other and surroundings. All the robots can communicate with each other. The position of each robot is determined and monitored by an overlooking camera. Large number of simple robots can perform complex tasks in a more efficient way than a single robot, giving robustness and flexibility to the group. |

== Objectives & Introduction == | == Objectives & Introduction == | ||

| − | * Initially all robots will go in search of the target, as and when the target is located, the | + | * Initially all robots will go in search of the target, as and when the target is located, the robot which is near to the target sends out a message about its present location to all other robots. |

| − | * Overhead camera serves the purpose of determining the location of individual | + | * Overhead camera serves the purpose of determining the location of individual robots. |

| − | * Once the message regarding the target position is received by other | + | * Once the message regarding the target position is received by other robots, each of them will move towards the target. |

=== Team Members & Responsibilities === | === Team Members & Responsibilities === | ||

* Team Member 1 | * Team Member 1 | ||

| − | ** Abhishek Gurudutt - OpenCV Image detection and | + | ** Abhishek Gurudutt - IR sensor circuit and interface, OpenCV (Image detection and co-ordinate mapping). |

* Team Member 2 | * Team Member 2 | ||

| − | ** Bhargava Sreekantappa Gayithri - | + | ** Bhargava Sreekantappa Gayithri - Robot assembly, Motor driver circuit, interfacing ultrasonic sensors and Motors |

* Team Member 3 | * Team Member 3 | ||

** Chinmayi Divakara - Wireless communication and synchronization | ** Chinmayi Divakara - Wireless communication and synchronization | ||

* Team Member 4 | * Team Member 4 | ||

| − | ** Praveen Prabhakaran - OpenCV co-ordinate mapping. OpenCV and SJone board communication. | + | ** Praveen Prabhakaran - OpenCV co-ordinate mapping. OpenCV and SJone board communication. Algorithm to move all the robots towards target upon target detection. |

* Team Member 5 | * Team Member 5 | ||

| − | ** Tejeshwar Chandra Kamaal - | + | ** Tejeshwar Chandra Kamaal - Robot assembly, Motor driver circuit, interfacing ultrasonic sensors and Motors |

== Schedule == | == Schedule == | ||

| − | |||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

! scope="col"| Week# | ! scope="col"| Week# | ||

| − | ! scope="col"| Date | + | ! scope="col"| Start Date |

! scope="col"| Task | ! scope="col"| Task | ||

! scope="col"| Actual | ! scope="col"| Actual | ||

| Line 47: | Line 34: | ||

|- | |- | ||

! scope="row"| 1 | ! scope="row"| 1 | ||

| − | | 4/ | + | | 4/7/2015 |

| | | | ||

1) Hardware - Design of Motor Controller, | 1) Hardware - Design of Motor Controller, | ||

| Line 61: | Line 48: | ||

|- | |- | ||

! scope="row"| 2 | ! scope="row"| 2 | ||

| − | | 4/ | + | | 4/14/2015 |

| | | | ||

1) Communication between OpenCV to SJone board | 1) Communication between OpenCV to SJone board | ||

| Line 82: | Line 69: | ||

|- | |- | ||

! scope="row"| 3 | ! scope="row"| 3 | ||

| − | | 4/ | + | | 4/21/2015 |

| | | | ||

| − | 1 | + | 1) Co-ordinates communication between nodes |

| − | |||

and boundary mapping | and boundary mapping | ||

| − | + | 2) Inter board communication and | |

communicating the Co-ordinates | communicating the Co-ordinates | ||

| + | 3) IR sensor circuit and software | ||

| | | | ||

| − | 1 | + | 1) Object detection completed. Co-ordinates mapping in progress. |

| − | + | 2) Inter board communication completed. | |

| − | + | 3) IR sensor circuit and software to read the values completed | |

| Completed | | Completed | ||

|- | |- | ||

| Line 99: | Line 86: | ||

| | | | ||

1) Integration, testing and Bug fixing | 1) Integration, testing and Bug fixing | ||

| − | | | + | | |

| − | | | + | Integration and testing successful |

| + | | Completed | ||

|} | |} | ||

| Line 150: | Line 138: | ||

== Design & Implementation == | == Design & Implementation == | ||

| − | + | [[File:S15_244_grp12_overview_design.png|center|Overview Diagram]] | |

=== Hardware Design === | === Hardware Design === | ||

| − | + | [[File:S15_244_grp12_H_Bridge.jpg|200px|left|thumb|Fig 1. H-bridge]] | |

| − | + | [[File:S15_244_grp12_MotorDriver_PCB_Layout.jpg|220px|right|thumb|Fig 2. PCB layout for Motor Driver (H-Bridge)]] | |

| − | * The schematic was designed by the team and it was embedded on the PCB using the actual process carried on to embed a circuit on the PCB. | + | [[File:S15_244_grp12_robot_assembly.jpg|200px|left|thumb|Fig 3. Robot chassis]] |

| − | * | + | [[File:S15_244_grp12_motordriverschematic.png|200px|220px|right|thumb|Fig 4. L293D circuit]] |

| − | * | + | [[File:S15_244_grp12_IR_Sensor.png|220px|right|thumb|Fig 5. IR sensor circuit]] |

| − | * | + | [[File:S15_244_grp12_ultrasonic_sensor.jpg|200px|left|thumb|Fig 6. Ultrasonic Sensor]] |

| + | [[File:S15_244_grp12_ServoMotor.jpg|220px|right|thumb|Fig 7. Servo Motor]] | ||

| + | |||

| + | * All parts of the robot was assembled by the team. Robot assembly started with connecting chassis with dc motor and later on putting the wheels together for the robot. The movement of the motors is controlled by the L293D IC. An ultrasonic sensor is mounted on stepper motor to detect obstacles in the way of robot. The stepper motor helps to rotate the ultrasonic sensor from initial position through 180 degrees. | ||

| + | * The schematic was designed by the team and it was embedded on the PCB using the actual process carried on to embed a circuit layout on the PCB. H-bridge image provides the layout information of the motor driver. H-bridge drives the two dc motors of robot which helps in its movement. The dc motor is driven by a rechargeable lipo battery. | ||

| + | * Robot chassis is a circular cardboard material which is strong enough to withstand materials utilized by the robot. These chassis were bought online from https://www.elabpeers.com/smart-car-robot-with-chassis-and-kit-round-black.html | ||

| + | * The L293D circuit along with the LM7805 regulator schematic was drawn using orcad design tool. The aim of the schematic was to achieve proper connections between the motor,L293D IC and 7805. L293D IC is used to drive the two dc motors as shown in the image. The speed of the dc motors is controlled by supplying a PWM to the enable pin of the L293D. The LM7805 voltage regulator is used to supply power for SJ-One board and its peripherals. | ||

| + | * IR Sensor circuit is used for object detection above the surface. The schematic was drawn using orcad design tool. IR sensor is turned ON by supplying a voltage of 3.3 volt. The transmitter LED sends a light pulse and receiver LED receives the reflected light, depending on the amount of light received by the receiver LED the voltage across the resistor changes. If no light is received by the receiver LED then voltage across the resistor will be very less. | ||

| + | * Servo motors are used for precise rotary movement of ultrasonic sensors. The angle by which the servo motor has to rotate is achieved by providing a calculated amount of pulses to PWM pin. | ||

| + | * Ultrasonic sensors are used to detect any obstacle. When ultrasonic sensor is triggered through the trigger pin, ultrasonic sensor transmits sound waves, this hits the object in front and returns back, upon reception the echo pin receives a falling edge. The time taken to receive the sound wave back can be used to calculate the distance of object from the sensor. | ||

=== Hardware Interface === | === Hardware Interface === | ||

| − | + | '''Design consideration''' | |

| + | : 1. Pulses to enable pin of motor driver circuit and to drive the servo motor is given through PWM pin available on SJone board. | ||

| + | : 2. UART3 pin is used to communicate between laptop and SJone board. | ||

| + | : 3. ADC pins are used to read the value from IR sensor. | ||

| + | '''Pin connections''' | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! scope="col"| Serial No. | ||

| + | ! scope="col"| Port and Pin number | ||

| + | ! scope="col"| Pin Type | ||

| + | ! scope="col"| Purpose | ||

| + | |- | ||

| + | ! scope="row"| 1 | ||

| + | | P0.1, P0.0, P0.29, P0.30 | ||

| + | | GPIO output | ||

| + | | DC motors | ||

| + | |- | ||

| + | ! scope="row"| 2 | ||

| + | | P2.0, P2.1 | ||

| + | | PWM | ||

| + | | DC motors | ||

| + | |- | ||

| + | ! scope="row"| 3 | ||

| + | | P2.2 | ||

| + | | PWM | ||

| + | | Servo motor | ||

| + | |- | ||

| + | ! scope="row"| 4 | ||

| + | | P1.19, P2.7 | ||

| + | | GPIO Output | ||

| + | | Ultrasonic sensor | ||

| + | |- | ||

| + | ! scope="row"| 5 | ||

| + | | P0.26, P1.30 | ||

| + | | ADC | ||

| + | | IR sensors | ||

| + | |- | ||

| + | ! scope="row"| 6 | ||

| + | | P4.28, P4.29 | ||

| + | | UART3 | ||

| + | | Communication between openCV and SJone board | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | [[File:S15_244_grp12_Robot_Hardware.jpg|center|Robot]] | ||

=== Software Design === | === Software Design === | ||

| − | + | ||

| − | * Robot control and wireless communication is accomplished with the help of FreeRTOS | + | * Robot control and wireless communication is accomplished with the help of FreeRTOS. |

| − | * | + | * Modules of FreeRTOS utilized are: |

: 1. FreeRTOS Task and Scheduler | : 1. FreeRTOS Task and Scheduler | ||

: 2. FreeRTOS Queues | : 2. FreeRTOS Queues | ||

| Line 176: | Line 217: | ||

* FreeRTOS Mutex helps in sharing resources. | * FreeRTOS Mutex helps in sharing resources. | ||

* Robot to Robot communication is performed with the help of Wireless mesh network. | * Robot to Robot communication is performed with the help of Wireless mesh network. | ||

| − | * PWM API developed by | + | * PWM API developed by Preet is used for controlling dc motors and interfacing ultrasonic sensor. |

| − | * API to read the analog values which is developed by | + | * API to read the analog values which is developed by Preet is used to read the values from IR sensors |

* Camera access and detection of robot is implemented on a computer with the help of openCV library. | * Camera access and detection of robot is implemented on a computer with the help of openCV library. | ||

=== Implementation === | === Implementation === | ||

| − | [[File:S15_244_grp12_IR_sensor_flowchart.jpg| | + | [[File:S15_244_grp12_opencv_flowchart.jpg|200px|left|thumb|Fig 6. Flowchart of detecting, locating and communicating the same to robots]] |

| + | |||

| + | [[File:S15_244_grp12_IR_sensor_flowchart.jpg|right|thumb|Fig 7. Flowchart for implementation of IR sensor]] | ||

| + | |||

| + | ====Motor Control==== | ||

| + | DC Motor: DC motors are used to move the robots. PWM supplied to the enable pin will drive dc motor. PWM is generated with the help of API provided by Preet. The robot is made to turn or go straight during different scenarios. The wheels are rotated in opposite direction to turn the robot. | ||

| + | |||

| + | Servo Motor: Ultrasonic sensors mounted on the robot are turned to left or right with the help of servo motor. When an obstacle is detected the ultrasonic sensors are turned to left and right to check free space. PWM signals are provided to servo motors to rotate the shaft. | ||

| + | |||

| + | ====Obstacle detection==== | ||

| + | This is achieved with help of an ultrasonic sensor. Trigger pin of the ultrasonic sensor is signaled and echo pin is monitored for a falling edge. Time taken to receive a falling edge from the moment of triggering an ultrasonic sensor is formulated to calculate the distance of the obstacle from the robot. When distance to obstacle is less than a threshold, software is interrupted and the robot changes its direction to avoid collision. Robot is turned to move in a direction where there is no obstacle. | ||

| + | |||

| + | ====Target detection==== | ||

| + | Infrared sensors are used to detect the target. Target which in this case is a black spot, is detected when the value from the IR sensor is below a threshold. Analog value from the IR sensor is converted to digital value and read at regular intervals. IR sensors are placed near both wheels. Any one of the IR sensor can indicate the detection of target. The robot is turned towards the target according to the input from IR sensors. | ||

| + | |||

| + | ====Detecting and locating robot==== | ||

| + | This is achieved with the help of an overhead wireless camera. Each robot will be updated with its present location. Since the robots are made to run in a closed environment, it is difficult to get accurate locations from GPS. Hence a camera is used as a replacement of GPS. | ||

| + | |||

| + | '''Detection of robot''' : Each robot is of a different color. This will help in differentiating the robots from one another. Images from the camera are captured every 30 millisecond, these images obtained will be in RGB format which is converted to HSV format. The converted image is then filtered. Hue(H), Saturation(S) and Value(V) are different for different color, hence the image can be filtered by defining the range for each color. The filtered image is then processed to remove noise. | ||

| + | |||

| + | '''Location of robot''' : Each filtered image contains only one object, the outline of the object is found out and checked if the area is more than threshold. If the area is less than the threshold, then it is discarded as noise. The spatial coordinates of the outlined image is found out which gives the location of the robot. | ||

| − | + | ====Communication between robots==== | |

| + | Each robot has unique ID, this is utilized to communicate with a particular robot. The data between robots is exchanged with the help of inbuilt wireless module API written by Preet. Queues and Mutex are utilized at this point of time. Queues are used to communicate the co-ordinates of robots between the tasks. Mutex is used to lock the access of robot co-ordinates to one task. | ||

| − | + | ====Communicating locations to robots==== | |

| − | + | Co-ordinates of each robot detected through openCV is transmitted to a SJone board using DB9 connector and UART3 on SJone board. Each co-ordinate is sent with a header and Robot ID. This board sends out the information to all other robots through wireless network. The robots keep track of its location. Direction of its movement is determined. Once the target is found, the respective robot will broadcast its present location to other robots and also indicates that the target is found. Upon receiving the message that target is found, the other robots will start moving towards the target location. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Testing & Technical Challenges == | == Testing & Technical Challenges == | ||

| − | + | === Unit test and System test condition === | |

| − | + | {| class="wikitable" | |

| + | |- | ||

| + | ! scope="col"| Serial No. | ||

| + | ! scope="col"| Test Case | ||

| + | ! scope="col"| Test Description | ||

| + | ! scope="col"| Result | ||

| + | |- | ||

| + | ! scope="row"| 1 | ||

| + | | Ultrasonic sensor | ||

| + | | Check for distance to object, Test minimum distance and distance till 40cm. | ||

| + | | All sensors '''PASSED''' | ||

| + | |- | ||

| + | ! scope="row"| 2 | ||

| + | | DC motors | ||

| + | | Check for enabling and disabling of Individual Motor, test for maximum speed and full load condition. | ||

| + | | All motors '''PASSED''' | ||

| + | |- | ||

| + | ! scope="row"| 3 | ||

| + | | Servo motor | ||

| + | | Check for rotation of motor, test for minimum angle rotation and full coverage (0deg to 180deg). | ||

| + | | All motors '''PASSED''' | ||

| + | |- | ||

| + | ! scope="row"| 4 | ||

| + | | IR sensors | ||

| + | | Check for the values from sensors, place white sheet and observe larger values, place black sheet and observe smaller values | ||

| + | | All sensors '''PASSED''' | ||

| + | |- | ||

| + | ! scope="row"| 5 | ||

| + | | Wireless communication | ||

| + | | Check for transmission of data with an unique ID and if the respective board receives the data. | ||

| + | | '''PASSED''' for all boards | ||

| + | |- | ||

| + | ! scope="row"| 6 | ||

| + | | OpenCV | ||

| + | | Check for color detection and co-ordinate mapping of respective color, check for multiple color detection. | ||

| + | | '''PASSED''' for multiple colors | ||

| + | |- | ||

| + | |} | ||

| − | + | === Challenges === | |

| + | Integrating all the modules to form a complete project is always a challenging task. Integrating one module at a time, testing the same and solving the issues was tedious. | ||

| − | === | + | === Issue 1 : Interfacing Ultrasonic sensor === |

| − | + | * '''Details''' - Initially we were trying to use polling method to detect the falling edge of the echo signal on the micro-controller pin. This method seemed rather difficult to capture the edge on the pin. With further testing and debugging, later it was found that the ultrasonic sensor trigger pin was damaged and hence the sensor operation wasn't on the expected lines. | |

| + | * '''Solution''' - Ultrasonic sensor was replaced and approach was also changed from polling method to external interrupt method on the micro-controller pin. This provided the results on expected lines. | ||

| + | * '''Advice''' - First check with multiple hardware for expected working. If this fails, then check with software logic once. | ||

| + | |||

| + | === Issue 2 : PCB for Motor Driver === | ||

| + | * '''Details''' - The pcb layout for the dc motor driver was designed by the team. In the initial phase, the etching process was carried using ferric chloride and after the process was completed the etched pcb was not according to the schematic since the tracks were discontinuous at few places and hence the pcb had to be discarded. | ||

| + | * '''Solution''' - Monitor the etching process carefully and keep stirring the solution continuously so the process is faster and also scrub the part where the copper has to be etched. | ||

| + | * '''Advice''' - Use a fresh and more quantity of ferric chloride solution so that the process goes smoothly. | ||

| + | |||

| + | === Issue 3 : PWM tuning === | ||

| + | * '''Issue''' - Tuning the dc motors was an important task as the movement of the robot determines the overall functionality of the project. It is interlinked to other parts of the project. All the robots had to be tuned individually, which is a time consuming task. | ||

| + | * '''Advice''' - Use high precision motors to reduce the time factor. | ||

| + | |||

| + | === Issue 4 : Communicating between openCV and SJOne board === | ||

| + | * '''Issue''' - While transmitting data from openCV to SJOne board, the data received was never same as the data sent. The data was being transmitted with the help of a USB to DB9 converter. The Tx, Rx and ground pin was connected to SJOne board. | ||

| + | * '''Analysis and Solution''' - After analyzing the data received, we figured out that the data was converted to TTL logic. USB to UART bridge helped solve this problem. | ||

== Conclusion == | == Conclusion == | ||

| − | + | This team project was good learning experience for the team.This project not only helped us to learn and improve our technical skills but also improved our team & work management skills.On the hardware side we learnt designing schematic, drawing pcb layout and also we carried out the pcb etching process. We also handled the hardware debugging issues carefully and rectified it. On the software side we learnt writing drivers for sensor and dc motors, implementing wireless communication between the robots and using openCV for finding location. Overall, we would like to call this project a success as it helped us improve our technical and non technical environment which would help us in real work environment. | |

=== Project Video === | === Project Video === | ||

| − | + | https://youtu.be/YMepV2-pIy0 | |

=== Project Source Code === | === Project Source Code === | ||

| Line 220: | Line 326: | ||

=== References Used === | === References Used === | ||

| − | + | * Preetpal Kang Lecture notes of CMPE 244, Computer Engineering, Charles W. Davidson College of Engineering, San Jose State University, Jan-May 2015. | |

| − | + | * http://www.socialledge.com/sjsu/index.php?title=Main_Page | |

| − | + | * L293D datasheet | |

| − | + | * LM7805 datasheet | |

| + | * Ultrasonic Sensor datasheet(HC-SR04) | ||

| + | * Servo Motor datasheet | ||

| + | * LPC 1758 datasheet | ||

| + | * openCV API library | ||

| + | * Preet's example for wireless communication | ||

| + | * LIPO battery | ||

| + | * http://www.freertos.org/ FreeRTOS tutorial | ||

Latest revision as of 14:56, 27 May 2015

Contents

Swarm Robots

Abstract

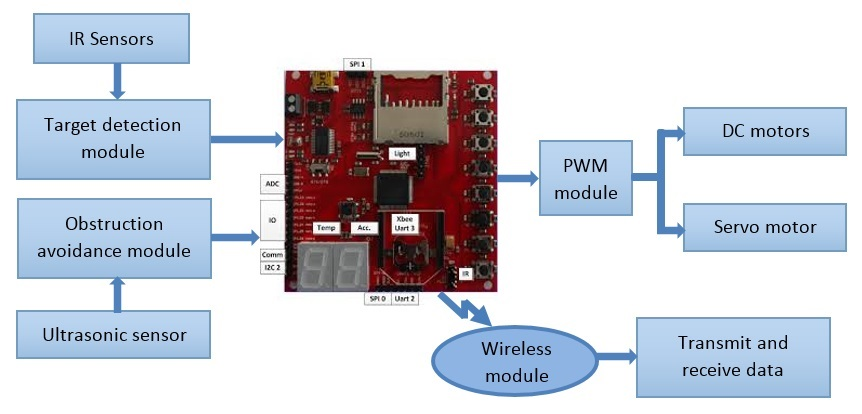

Swarm robots deals with coordination of multiple robots. The collective behavior of the robot swarm emerges from interaction with each other and surroundings. All the robots can communicate with each other. The position of each robot is determined and monitored by an overlooking camera. Large number of simple robots can perform complex tasks in a more efficient way than a single robot, giving robustness and flexibility to the group.

Objectives & Introduction

- Initially all robots will go in search of the target, as and when the target is located, the robot which is near to the target sends out a message about its present location to all other robots.

- Overhead camera serves the purpose of determining the location of individual robots.

- Once the message regarding the target position is received by other robots, each of them will move towards the target.

Team Members & Responsibilities

- Team Member 1

- Abhishek Gurudutt - IR sensor circuit and interface, OpenCV (Image detection and co-ordinate mapping).

- Team Member 2

- Bhargava Sreekantappa Gayithri - Robot assembly, Motor driver circuit, interfacing ultrasonic sensors and Motors

- Team Member 3

- Chinmayi Divakara - Wireless communication and synchronization

- Team Member 4

- Praveen Prabhakaran - OpenCV co-ordinate mapping. OpenCV and SJone board communication. Algorithm to move all the robots towards target upon target detection.

- Team Member 5

- Tejeshwar Chandra Kamaal - Robot assembly, Motor driver circuit, interfacing ultrasonic sensors and Motors

Schedule

| Week# | Start Date | Task | Actual | Status |

|---|---|---|---|---|

| 1 | 4/7/2015 |

1) Hardware - Design of Motor Controller,

Robot assembly

2) Software - OpenCV environment setup,

Basic video processing

|

1) Hardware, OpenCV setup - Completed

Problems Encountered - Hardware Bug discovered in version 0.1

of motor controller circuit after testing.

2) Software - Problems during filtering video for certain colors.

|

Completed |

| 2 | 4/14/2015 |

1) Communication between OpenCV to SJone board

using DB9 connector and UART3

2) PWM and Ultrasonic sensor drivers

3) Object detection and mapping

4) Wireless communication between two nodes

|

1) Modification of RS232 header file to support windows 7 file API.

Was not able to communicate between OpenCV and SJone board using UART0

2) Unable to receive the echo signal and was not able to use

ultrasonic sensor without interrupt.

3) HSV values had to be detected to recognize particular color.

Had difficulties to find the HSV values for a color.

This was solved by writing a program to print the

HSV value of the color that is pointed in the video.

4) Packet sending and recieving failure. System crash while

transmitting and recieving packets

|

Completed |

| 3 | 4/21/2015 |

1) Co-ordinates communication between nodes

and boundary mapping

2) Inter board communication and

communicating the Co-ordinates

3) IR sensor circuit and software

|

1) Object detection completed. Co-ordinates mapping in progress. 2) Inter board communication completed. 3) IR sensor circuit and software to read the values completed |

Completed |

| 4 | 05/05/2015 |

1) Integration, testing and Bug fixing |

Integration and testing successful |

Completed |

Parts List & Cost

| Serial No. | Part Description | Cost |

|---|---|---|

| 1 | Robot chassis and motors | $66.7 |

| 2 | Servo motors | $27.38 |

| 3 | Wireless camera | $26.21 |

| 4 | Ultrasonic sensor | $17.98 |

| 5 | Lipo Batteries and charger | $43.28 |

| 6 | H bridge IC - L293d | $10.91 |

| 7 | Connectors, cables etc | $45 |

| 8 | Antennas for wireless module | $15 |

| 9 | Foam board for arena | $65 |

Design & Implementation

Hardware Design

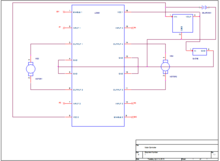

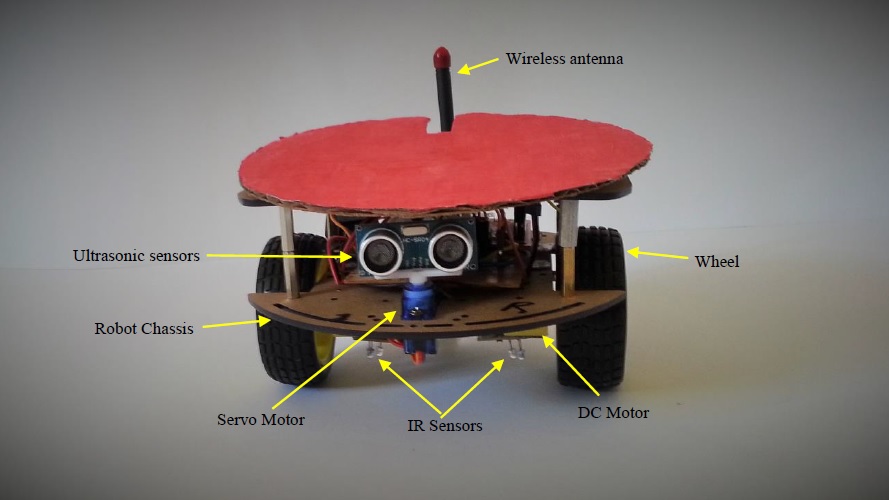

- All parts of the robot was assembled by the team. Robot assembly started with connecting chassis with dc motor and later on putting the wheels together for the robot. The movement of the motors is controlled by the L293D IC. An ultrasonic sensor is mounted on stepper motor to detect obstacles in the way of robot. The stepper motor helps to rotate the ultrasonic sensor from initial position through 180 degrees.

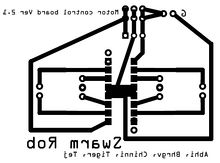

- The schematic was designed by the team and it was embedded on the PCB using the actual process carried on to embed a circuit layout on the PCB. H-bridge image provides the layout information of the motor driver. H-bridge drives the two dc motors of robot which helps in its movement. The dc motor is driven by a rechargeable lipo battery.

- Robot chassis is a circular cardboard material which is strong enough to withstand materials utilized by the robot. These chassis were bought online from https://www.elabpeers.com/smart-car-robot-with-chassis-and-kit-round-black.html

- The L293D circuit along with the LM7805 regulator schematic was drawn using orcad design tool. The aim of the schematic was to achieve proper connections between the motor,L293D IC and 7805. L293D IC is used to drive the two dc motors as shown in the image. The speed of the dc motors is controlled by supplying a PWM to the enable pin of the L293D. The LM7805 voltage regulator is used to supply power for SJ-One board and its peripherals.

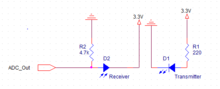

- IR Sensor circuit is used for object detection above the surface. The schematic was drawn using orcad design tool. IR sensor is turned ON by supplying a voltage of 3.3 volt. The transmitter LED sends a light pulse and receiver LED receives the reflected light, depending on the amount of light received by the receiver LED the voltage across the resistor changes. If no light is received by the receiver LED then voltage across the resistor will be very less.

- Servo motors are used for precise rotary movement of ultrasonic sensors. The angle by which the servo motor has to rotate is achieved by providing a calculated amount of pulses to PWM pin.

- Ultrasonic sensors are used to detect any obstacle. When ultrasonic sensor is triggered through the trigger pin, ultrasonic sensor transmits sound waves, this hits the object in front and returns back, upon reception the echo pin receives a falling edge. The time taken to receive the sound wave back can be used to calculate the distance of object from the sensor.

Hardware Interface

Design consideration

- 1. Pulses to enable pin of motor driver circuit and to drive the servo motor is given through PWM pin available on SJone board.

- 2. UART3 pin is used to communicate between laptop and SJone board.

- 3. ADC pins are used to read the value from IR sensor.

Pin connections

| Serial No. | Port and Pin number | Pin Type | Purpose |

|---|---|---|---|

| 1 | P0.1, P0.0, P0.29, P0.30 | GPIO output | DC motors |

| 2 | P2.0, P2.1 | PWM | DC motors |

| 3 | P2.2 | PWM | Servo motor |

| 4 | P1.19, P2.7 | GPIO Output | Ultrasonic sensor |

| 5 | P0.26, P1.30 | ADC | IR sensors |

| 6 | P4.28, P4.29 | UART3 | Communication between openCV and SJone board |

Software Design

- Robot control and wireless communication is accomplished with the help of FreeRTOS.

- Modules of FreeRTOS utilized are:

- 1. FreeRTOS Task and Scheduler

- 2. FreeRTOS Queues

- 3. FreeRTOS Binary Semaphore

- 4. FreeRTOS Mutex

- FreeRTOS scheduler is used to run all the tasks on the robot.

- FreeRTOS Queues are used to communicate between tasks.

- FreeRTOS Binary Semaphore is used to enable a task.

- FreeRTOS Mutex helps in sharing resources.

- Robot to Robot communication is performed with the help of Wireless mesh network.

- PWM API developed by Preet is used for controlling dc motors and interfacing ultrasonic sensor.

- API to read the analog values which is developed by Preet is used to read the values from IR sensors

- Camera access and detection of robot is implemented on a computer with the help of openCV library.

Implementation

Motor Control

DC Motor: DC motors are used to move the robots. PWM supplied to the enable pin will drive dc motor. PWM is generated with the help of API provided by Preet. The robot is made to turn or go straight during different scenarios. The wheels are rotated in opposite direction to turn the robot.

Servo Motor: Ultrasonic sensors mounted on the robot are turned to left or right with the help of servo motor. When an obstacle is detected the ultrasonic sensors are turned to left and right to check free space. PWM signals are provided to servo motors to rotate the shaft.

Obstacle detection

This is achieved with help of an ultrasonic sensor. Trigger pin of the ultrasonic sensor is signaled and echo pin is monitored for a falling edge. Time taken to receive a falling edge from the moment of triggering an ultrasonic sensor is formulated to calculate the distance of the obstacle from the robot. When distance to obstacle is less than a threshold, software is interrupted and the robot changes its direction to avoid collision. Robot is turned to move in a direction where there is no obstacle.

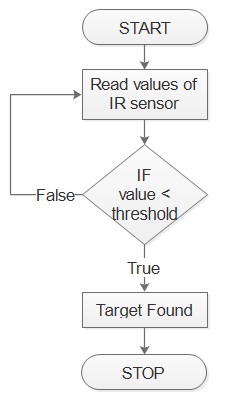

Target detection

Infrared sensors are used to detect the target. Target which in this case is a black spot, is detected when the value from the IR sensor is below a threshold. Analog value from the IR sensor is converted to digital value and read at regular intervals. IR sensors are placed near both wheels. Any one of the IR sensor can indicate the detection of target. The robot is turned towards the target according to the input from IR sensors.

Detecting and locating robot

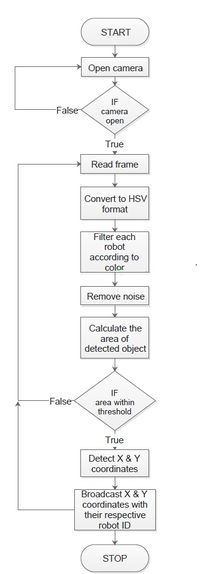

This is achieved with the help of an overhead wireless camera. Each robot will be updated with its present location. Since the robots are made to run in a closed environment, it is difficult to get accurate locations from GPS. Hence a camera is used as a replacement of GPS.

Detection of robot : Each robot is of a different color. This will help in differentiating the robots from one another. Images from the camera are captured every 30 millisecond, these images obtained will be in RGB format which is converted to HSV format. The converted image is then filtered. Hue(H), Saturation(S) and Value(V) are different for different color, hence the image can be filtered by defining the range for each color. The filtered image is then processed to remove noise.

Location of robot : Each filtered image contains only one object, the outline of the object is found out and checked if the area is more than threshold. If the area is less than the threshold, then it is discarded as noise. The spatial coordinates of the outlined image is found out which gives the location of the robot.

Communication between robots

Each robot has unique ID, this is utilized to communicate with a particular robot. The data between robots is exchanged with the help of inbuilt wireless module API written by Preet. Queues and Mutex are utilized at this point of time. Queues are used to communicate the co-ordinates of robots between the tasks. Mutex is used to lock the access of robot co-ordinates to one task.

Communicating locations to robots

Co-ordinates of each robot detected through openCV is transmitted to a SJone board using DB9 connector and UART3 on SJone board. Each co-ordinate is sent with a header and Robot ID. This board sends out the information to all other robots through wireless network. The robots keep track of its location. Direction of its movement is determined. Once the target is found, the respective robot will broadcast its present location to other robots and also indicates that the target is found. Upon receiving the message that target is found, the other robots will start moving towards the target location.

Testing & Technical Challenges

Unit test and System test condition

| Serial No. | Test Case | Test Description | Result |

|---|---|---|---|

| 1 | Ultrasonic sensor | Check for distance to object, Test minimum distance and distance till 40cm. | All sensors PASSED |

| 2 | DC motors | Check for enabling and disabling of Individual Motor, test for maximum speed and full load condition. | All motors PASSED |

| 3 | Servo motor | Check for rotation of motor, test for minimum angle rotation and full coverage (0deg to 180deg). | All motors PASSED |

| 4 | IR sensors | Check for the values from sensors, place white sheet and observe larger values, place black sheet and observe smaller values | All sensors PASSED |

| 5 | Wireless communication | Check for transmission of data with an unique ID and if the respective board receives the data. | PASSED for all boards |

| 6 | OpenCV | Check for color detection and co-ordinate mapping of respective color, check for multiple color detection. | PASSED for multiple colors |

Challenges

Integrating all the modules to form a complete project is always a challenging task. Integrating one module at a time, testing the same and solving the issues was tedious.

Issue 1 : Interfacing Ultrasonic sensor

- Details - Initially we were trying to use polling method to detect the falling edge of the echo signal on the micro-controller pin. This method seemed rather difficult to capture the edge on the pin. With further testing and debugging, later it was found that the ultrasonic sensor trigger pin was damaged and hence the sensor operation wasn't on the expected lines.

- Solution - Ultrasonic sensor was replaced and approach was also changed from polling method to external interrupt method on the micro-controller pin. This provided the results on expected lines.

- Advice - First check with multiple hardware for expected working. If this fails, then check with software logic once.

Issue 2 : PCB for Motor Driver

- Details - The pcb layout for the dc motor driver was designed by the team. In the initial phase, the etching process was carried using ferric chloride and after the process was completed the etched pcb was not according to the schematic since the tracks were discontinuous at few places and hence the pcb had to be discarded.

- Solution - Monitor the etching process carefully and keep stirring the solution continuously so the process is faster and also scrub the part where the copper has to be etched.

- Advice - Use a fresh and more quantity of ferric chloride solution so that the process goes smoothly.

Issue 3 : PWM tuning

- Issue - Tuning the dc motors was an important task as the movement of the robot determines the overall functionality of the project. It is interlinked to other parts of the project. All the robots had to be tuned individually, which is a time consuming task.

- Advice - Use high precision motors to reduce the time factor.

Issue 4 : Communicating between openCV and SJOne board

- Issue - While transmitting data from openCV to SJOne board, the data received was never same as the data sent. The data was being transmitted with the help of a USB to DB9 converter. The Tx, Rx and ground pin was connected to SJOne board.

- Analysis and Solution - After analyzing the data received, we figured out that the data was converted to TTL logic. USB to UART bridge helped solve this problem.

Conclusion

This team project was good learning experience for the team.This project not only helped us to learn and improve our technical skills but also improved our team & work management skills.On the hardware side we learnt designing schematic, drawing pcb layout and also we carried out the pcb etching process. We also handled the hardware debugging issues carefully and rectified it. On the software side we learnt writing drivers for sensor and dc motors, implementing wireless communication between the robots and using openCV for finding location. Overall, we would like to call this project a success as it helped us improve our technical and non technical environment which would help us in real work environment.

Project Video

Project Source Code

References

Acknowledgement

The hardware components were procured from HobbyKing, ebay, Amazon, getFPV, HomeDepot and various other sources for this project. Thanks to Preetpal Kang for teaching this class and inspiring, guiding and support us with our coursework and project. Thanks to entire team who helped and supported each other during all stages of the project and spending weekends and extra hours to get all parts of the project working.

References Used

- Preetpal Kang Lecture notes of CMPE 244, Computer Engineering, Charles W. Davidson College of Engineering, San Jose State University, Jan-May 2015.

- http://www.socialledge.com/sjsu/index.php?title=Main_Page

- L293D datasheet

- LM7805 datasheet

- Ultrasonic Sensor datasheet(HC-SR04)

- Servo Motor datasheet

- LPC 1758 datasheet

- openCV API library

- Preet's example for wireless communication

- LIPO battery

- http://www.freertos.org/ FreeRTOS tutorial