Difference between revisions of "F17: Vindicators100"

Proj user14 (talk | contribs) (→Hardware Design) |

(→Grading Criteria) |

||

| (321 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | = | + | |

| + | <center><font size=20px>Vindicators100 Autonomous RC Car</font></center> | ||

== Abstract == | == Abstract == | ||

| − | + | <center>In this project we designed and developed an autonomous RC car. The purpose was to go through the development process, from beginning to end, to build a car that can avoid obstacles on its own to reach a specified destination using a variety of skills and tools including CAN communication between five SJOne boards.</center> | |

| + | |||

| + | [[File:CmpE243 S17 Vindicators100 CarDriving.gif|border|center]] | ||

== Objectives & Introduction == | == Objectives & Introduction == | ||

| Line 48: | Line 40: | ||

== Schedule == | == Schedule == | ||

| − | |||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| − | ! scope="col"| Sprint | + | ! scope="col"| Sprint |

! scope="col"| End Date | ! scope="col"| End Date | ||

! scope="col"| Plan | ! scope="col"| Plan | ||

| Line 89: | Line 80: | ||

Drive(Prototype purchased components: move motor at various velocities); | Drive(Prototype purchased components: move motor at various velocities); | ||

| − | | App(); | + | | App(Decided to use ESP8266 web server implementation); |

Master(Unit tests passed); | Master(Unit tests passed); | ||

| Line 135: | Line 126: | ||

Master(Field-test done without Lidar. Master is sending appropriate data. Drive is having issues steering.); | Master(Field-test done without Lidar. Master is sending appropriate data. Drive is having issues steering.); | ||

| − | GPS( | + | GPS(Found compass and prototyping. Calculated projected heading.); |

| − | Sensors( | + | Sensors(Trying to interface ADC MUX with the ultrasonics. Integrating LIDAR with servo); |

Drive(Done.); | Drive(Done.); | ||

| Line 152: | Line 143: | ||

Drive(Implement a constant-velocity PID, Implement a PID Ramp-up functionality to limit in-rush current); | Drive(Implement a constant-velocity PID, Implement a PID Ramp-up functionality to limit in-rush current); | ||

| − | | App(); | + | | App(Google map data point acquisition, and waypoint plotting); |

| − | Master(); | + | Master(State machine set up, waiting on app); |

| − | GPS(); | + | GPS(Compass prototyping and testing using raw values); |

| − | Sensors(); | + | Sensors(Working on interfacing Ultrasonic sensors with ADC mux. Still integrating LIDAR with the servo properly); |

| − | Drive(); | + | Drive(Completed PID ramp-up, constant-velocity PID incomplete, but drives.); |

|- | |- | ||

! scope="row"| 6 | ! scope="row"| 6 | ||

| Line 173: | Line 164: | ||

Drive(Interface with buttons and headlight); | Drive(Interface with buttons and headlight); | ||

| − | | App(); | + | | App(SD Card Implementation for map data point storage; SD card data point parsing); |

| − | Master(); | + | Master(waiting on app and nav); |

| − | GPS(); | + | GPS(live gps module testing, and risk area assessment, GPS/compass integration); |

| − | Sensors(); | + | Sensors(Cleaning up Ultrasonic readings coming through the ADC mux. Troubleshooting LIDAR inaccuracy); |

| − | Drive(); | + | Drive(Drive Buttons Interfaced. Code refactoring complete.); |

|- | |- | ||

! scope="row"| 7 | ! scope="row"| 7 | ||

| Line 194: | Line 185: | ||

Drive(Full System Test w/ PCB); | Drive(Full System Test w/ PCB); | ||

| − | | App(); | + | | App(Full system integration testing with PCB); |

| − | Master(); | + | Master(Done); |

| − | GPS(); | + | GPS(Full system integration testing with PCB); |

| − | Sensors(); | + | Sensors(LIDAR properly calibrated with accurate readings); |

| − | Drive(); | + | Drive(Code Refactoring complete. No LCD or Headlights mounted on PCB.); |

|- | |- | ||

! scope="row"| 8 | ! scope="row"| 8 | ||

| Line 215: | Line 206: | ||

Drive(Full System Test w/ PCB); | Drive(Full System Test w/ PCB); | ||

| − | | App(); | + | | App(Fine-tuning and full system integration testing with ESP8266 webserver); |

| − | Master(); | + | Master(Done); |

| − | GPS(); | + | GPS(Final Pathfinding Algorithm Field-Testing); |

| − | Sensors(); | + | Sensors(Got Ultrasonic sensors working with ADC mux); |

| − | Drive(); | + | Drive(Missing LCD and Headlights on PCB.); |

|} | |} | ||

| Line 233: | Line 224: | ||

|- | |- | ||

| 1 || SJOne Board || Preet | | 1 || SJOne Board || Preet | ||

| − | || | + | || 5 || $80/board |

|- | |- | ||

| 2 || 1621 RPM HD Premium Gear Motor || [https://www.servocity.com/1621-rpm-hd-premium-planetary-gear-motor-w-encoder Servocity] | | 2 || 1621 RPM HD Premium Gear Motor || [https://www.servocity.com/1621-rpm-hd-premium-planetary-gear-motor-w-encoder Servocity] | ||

| Line 247: | Line 238: | ||

|| 1 || $4.95 | || 1 || $4.95 | ||

|- | |- | ||

| − | | 6 || Power Management IC Development Tools || [https://www.mouser.com/ | + | | 6 || EV-VN7010AJ Power Management IC Development Tools || [https://www.mouser.com/ProductDetail/STMicroelectronics/EV-VN7010AJ/?qs=%2fha2pyFadui%2f8JKnxVd20oimZCtSISg0sxVFYfGGLeUfH4w%252bf16HaA%3d%3d Mouser] |

|| 1 || $8.63 | || 1 || $8.63 | ||

|- | |- | ||

| 7 || eBoot Mini MP1584EN DC-DC Buck Converter || [https://www.amazon.com/eBoot-MP1584EN-Converter-Adjustable-Module/dp/B01MQGMOKI/ref=sr_1_5?ie=UTF8&qid=1511925590&sr=8-5&keywords=6+pack+buck+converter Amazon] | | 7 || eBoot Mini MP1584EN DC-DC Buck Converter || [https://www.amazon.com/eBoot-MP1584EN-Converter-Adjustable-Module/dp/B01MQGMOKI/ref=sr_1_5?ie=UTF8&qid=1511925590&sr=8-5&keywords=6+pack+buck+converter Amazon] | ||

| − | || 1 || $9. | + | || 2 || $9.69 |

| + | |- | ||

| + | | 8 || Garmin Lidar Lite v3 || [https://www.sparkfun.com/products/14032?gclid=Cj0KCQiAyNjRBRCpARIsAPDBnn0GnaqJe6gJ5Gs-IPcdaK7bMNm84HijAJq2pN4yFJr7TMiIbl3-HbkaApdcEALw_wcB SparkFun] | ||

| + | || 1 || $150 | ||

| + | |- | ||

| + | | 9 || ESP8266 || [https://www.amazon.com/HiLetgo-Internet-Development-Wireless-Micropython/dp/B010O1G1ES/ref=pd_lpo_vtph_23_bs_t_1?_encoding=TF8&psc=1&refRID=23NMKFA03J63GW27PXEX Amazon] | ||

| + | || 1 || $8.79 | ||

| + | |- | ||

| + | | 10 || Savox SA1230SG Monster Torque Coreless Steel Gear Digital Servo || [https://www.amazon.com/dp/B008CQI2EA/ref=cm_sw_r_cp_apa_H1foAb2F0ZVZZ Amazon] | ||

| + | || 1 || $77 | ||

| + | |- | ||

| + | | 11 || Lumenier LU-1800-4-35 1800mAh 35c Lipo Battery || [https://www.amazon.com/dp/B00OYQLKEG/ref=cm_sw_r_cp_apa_l4foAb2K21B87 Amazon] | ||

| + | || 1 || $34 | ||

| + | |- | ||

| + | | 12 || Acrylic Board || Tap Plastics | ||

| + | || 2 || $1 | ||

| + | |- | ||

| + | | 13 || Pololu G2 High-Power Motor Driver 24v21 || [https://www.pololu.com/product/2995 Pololu] | ||

| + | || 1 || $40 | ||

| + | |- | ||

| + | | 14 || Hardware Components (standoffs, threaded rods, etc.) || Excess Solutions, Ace | ||

| + | || - || $20 | ||

| + | |- | ||

| + | | 15 || LM7805 Power Regulator || [https://www.mouser.com/productdetail/on-semiconductor-fairchild/lm7805act?qs=sGAEpiMZZMtzPgOfznR9QQTeY9%252bkcWD8 Mouser] | ||

| + | || 1 || $0.90 | ||

| + | |- | ||

| + | | 16 || Triple-axis Accelerometer+Magnetometer (Compass) Board - LSM303 || [https://www.adafruit.com/product/1120 Adafruit] | ||

| + | || 1 || $14.95 | ||

|- | |- | ||

|} | |} | ||

== Design & Implementation == | == Design & Implementation == | ||

| − | |||

=== Hardware Design === | === Hardware Design === | ||

| − | In this section, we provide details on hardware design for each component - power control systems, drive, sensors, and | + | In this section, we provide details on hardware design for each component - power management, drive, sensors, app, and GPS. |

| + | |||

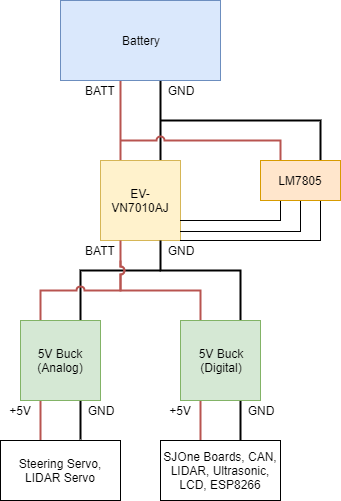

| + | ==== Power Management ==== | ||

| + | [[File:CmpE243 F17 Vindicators100 PowerManagementDiagram.png|center|frame|Block Diagram for whole power management system.]] | ||

| + | We used an EV-VN7010AJ Power Management Board to monitor real-time battery voltage. The power board has a pin which outputs a current proportional to the battery voltage. Connecting a load resistor between this pin and ground gives a smaller voltage proportional to the battery voltage. We read this smaller voltage using the ADC pin on the SJOne Board. | ||

| + | |||

| + | From battery voltage, it is split up into two 5V rails, an analog 5V (for the servos) and a digital 5V (for boards, transceivers, LIDAR, US sensors, etc.). We're using two buck converters to step the voltage down from battery voltage. There is also a battery voltage rail which goes to the drive system, which they then PWM to get their desired voltage level. | ||

| + | |||

| + | There is also an LM7805 regulator which is used just to power some of the power management chip's control signals. | ||

| + | |||

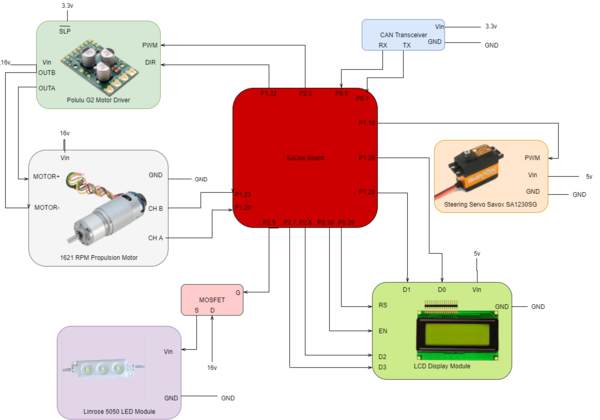

| + | ==== Drive ==== | ||

| + | |||

| + | {| | ||

| + | |Our drive system contains the following hardware components: | ||

| + | * Motor driver | ||

| + | * Propulsion motor | ||

| + | * 5050 LED modules | ||

| + | * Steering servo | ||

| + | * LCD Display | ||

| + | * CAN transceiver | ||

| + | |||

| + | The motor driver is powered by our battery (about 16v). It takes in a PWM signal from the SJOne board. It also has a control signal, SLP, which is active low and it is tied high from the 3.3v power rail. | ||

| + | The motor driver outputs two signals OUTA and OUTB used to control the propulsion motor which outputs signals for CH A and CH B to the SJOne board. It is also powered by our 16v battery. | ||

| + | |||

| + | The steering servo is controlled by the SJOne board via PWM and requires a 5v input. | ||

| + | |||

| + | The headlights use a MOSFET where the drain is connected to the 16v battery. The gate is a PWM signal input from the SJOne board which outputs at source, to the LED module's Vin. | ||

| + | |||

| + | The LCD display requires a 5v input and has two control signals, EN and RS. It receives inputs from the SJOne board via GPIO for D0-D3 pins to display items on the LCD screen. | ||

| + | |||

| + | Lastly, as per all other systems, all communication between them is done via CAN and so drive also has a CAN transceiver which is powered with 3.3v connected to the SJOne's RX and TX line on pin <code>P0.0</code> and pin <code>P0.1</code> | ||

| + | |||

| + | |[[File:CmpE243 F17 Vindicators100 DriveDiagram.png|thumb|right|600px|<center>Diagram of Drive System</center>]] | ||

| + | |} | ||

| + | |||

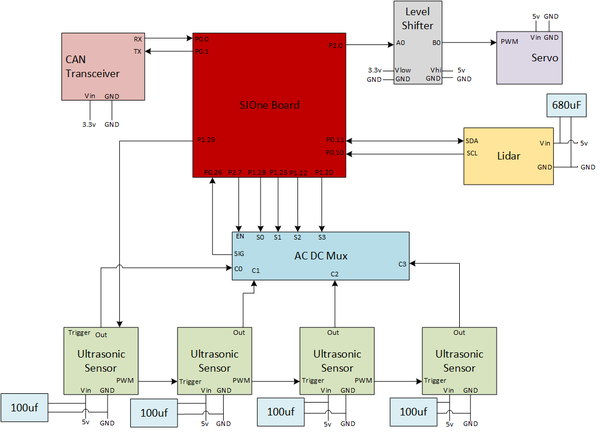

| + | ==== Sensors ==== | ||

| + | |||

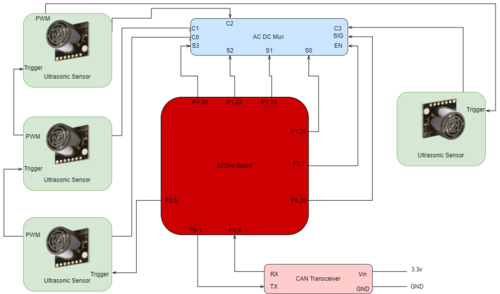

| + | We are using two sets of sensors - a LIDAR and 4 ultrasonic sensors.The ''Sensors System Hardware Design'' provides an overview of the entire sensors system and its connections. | ||

| + | [[File:CmpE243 F17 Vindicators100 SensorSystemDiagram.png|thumb|600px|center|<center>Sensors System Hardware Design</center>]] | ||

| + | |||

| + | ===== LIDAR ===== | ||

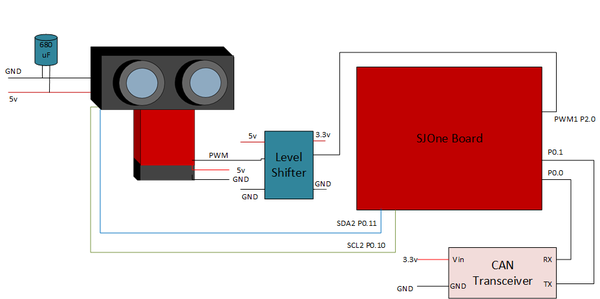

| + | [[File:CmpE243 F17 Vindicators100 LidarSystem.png|thumb|center|600px|<center>LIDAR System Diagram</center>]] | ||

| + | {| | ||

| + | |Our LIDAR system has two main separate hardware components - the LIDAR and the servo. <br>The LIDAR is mounted on the servo to provide a "big-picture" view of the car's surroundings. The ''LIDAR System Diagram'' shows the pin connections between the LIDAR and servo to the SJOne board. <br> The bottom portion in red is our servo and mounted on top is the LIDAR. Both the LIDAR and servo require a 5v input. However, to minimize noise, the servo is powered via the 5v analog power rail and the lidar is powered via the 5v digital power rail. | ||

| + | |||

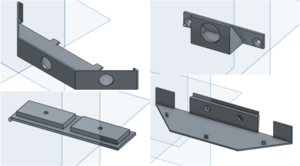

| + | The LIDAR is connected to the SJOne board using the i2c2 pins (P0.10 & P0.11). <br> As per the datasheet's instructions, a 680 uF capacitor was added across the Vcc and GND lines. <br> The servo is driven using the PWM1 pin which is <code>P2.0</code> on the SJOne board. <br><br> A level shifter between the servo and the SJOne board was necessary since the SJOne board outputs a 3.3v signal, but the servo works with a 5v signal. <br> | ||

| + | |||

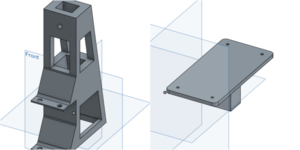

| + | To mount the LIDAR onto the servo, a case that holds the LIDAR and an actual mounting piece was designed and printed using a 3D printer. The ''Lidar System and Mount Diagrams'' shows the three components for mounting the lidar to the servo and then to the car. | ||

| + | |||

| + | |[[File:CmpE243 F17 Vindicators100 LIDARMountComponents.png|thumb|right|400px|<center>LIDAR Mount Diagrams<br>Left: Case for the LIDAR <br>Middle: Connector for the LIDAR and servo <br>Right: Servo to car interface to keep the LIDAR steady</center>]] | ||

| + | |} | ||

| + | |||

| + | ===== Ultrasonic Sensors ===== | ||

| + | |||

| + | {| | ||

| + | |[[File:CmpE243 F17 Vindicators100 UltrasonicSystem.png|thumb|500px|center|<center>Ultrasonic Sensor System</center>]] | ||

| + | |||

| + | |[[File:CmpE243 F17 Vindicators100 UltrasonicMounts.png|thumb|right|<center>CAD Design for Ultrasonic Sensor Mount</center>]] | ||

| + | The ultrasonic sensor setup that we have installed on the autonomous car is three ultrasonic sensors on the front and one on the back. The layout of the front sensors is one sensor down the middle and one on each side angled at 26.4/45 degrees. | ||

| + | |||

| + | The sensors are powered using the 5v digital power rail. In addition to the sensors, an Analog/Digital mux was required to allow us to obtain values for all 4 sensors with the use of one ADC pin from the SJOne board. | ||

| + | |||

| + | All sensors are daisy chained together with the initial sensor triggered by a GPIO pin, <code> P2.5</code> on the SJOne board. The first sensor outputs a PWM signal shortly after the trigger. The PWM signal is connected to the trigger on the next sensor and so forth. In turn, this triggers the second sensor and continuously triggers the sensors throughout the chain. Chaining the sensors was necessary to ensure that there is no cross-talk between the sensors. By configuring our hardware components in this manner, after about 10ms the first sensor will take measurements. Once the measurement is complete, the next sensor will take its measurement and so forth. Essentially, only one sensor is active at any given time. | ||

| + | |||

| + | A mount for all the ultrasonic sensors was designed and 3D printed. In the ''CAD Design for Ultrasonic Sensor Mount'' the top right component is used for the rear sensor while the remaining three are combined to create the front holding mount that is attached to the front of our car. | ||

| + | |||

| + | |} | ||

| + | <br> | ||

| + | |||

| + | ==== App ==== | ||

| + | {| | ||

| + | |[[File:CmpE243 F17 Vindicators100 APP.png|thumb|right|500px|<center>ESP8266 Interface with SJOne Board</center>]] | ||

| + | |||

| + | Our application uses an ESP8266 as our bridge for wifi connectivity. The ESP8266 takes an input voltage of 5v which is connected to the 5v digital power rail. The ESP8266's RX is connected to the TXD2 pin on the SJOne board and the TX is connected to the RXD2 on the SJOne board for UART communication. | ||

| + | |||

| + | As with all other components, we are using CAN for communication between the boards and so it uses <code>P0.0</code> and <code>P0.1</code> which is connected to the CAN transceiver's RX and TX pins respectively. | ||

| + | |} | ||

| + | |||

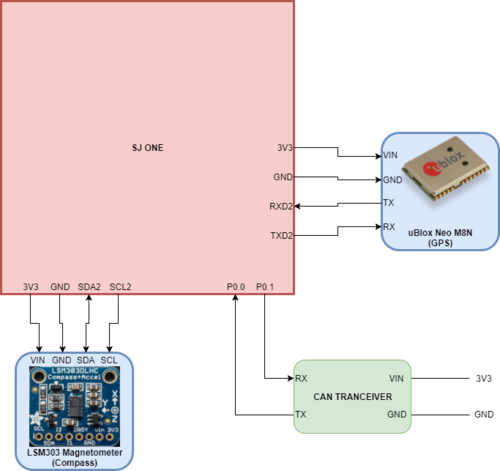

| + | ==== GPS ==== | ||

| + | {| | ||

| + | |[[File:CmpE243 F17 Vindicators100 Navigation.png|center|thumb|500px|<center>Compass and GPS Interface with SJOne Board</center>]] | ||

| + | |||

| + | |Our GPS system uses the following: | ||

| + | * uBlox Neo M8N for GPS | ||

| + | * LSM303 Mangetometer for our compass | ||

| + | |||

| + | The uBlock Neo uses UART for communication so the TX is connected to the SJOne's RXD2 pin and the RX is connected to the TXD2 pin. The module is powered directly from the SJOne board using the onboard 3.3v output pin which has been connected to a 3.3v power rail to supply power to other modules. | ||

| + | |||

| + | The LSM303 magnetometer module uses i2c for communication. The SDA is connected to the SJOne's SDA2 pin and the SCL is connected to the SJOne's SCL2 pin. The LSM303 also needs 3.3v to be powered and it is also connected to the 3.3v power rail. | ||

| + | [[File:CmpE243 F17 Vindicators100 TowerAndPlatformCAD.png|thumb|300px|<center>Left: CAD of our tower that holds the platform <br> Right: Platform to place our nav components on</center>]] | ||

| + | |} | ||

| + | <br> | ||

| − | === | + | ==== PCB Design ==== |

| + | {| | ||

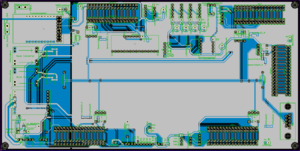

| + | |Once we finalized all the separate systems, we were able to design our PCB. | ||

| + | During this process, we collected all of the circuit diagrams and the necessary pinouts from each group to decide where to place each of the components. | ||

| − | + | In addition, we added extra pins where necessary in order to test each component easily. | |

| + | For example, we provided two set of SJOne pin connections - one for the ribbon cable that connects to the SJOne board and one extra set of pins that provide us access to the same SJOne pins. The reasoning for this was that it would provide us access to the pins without having to disconnect the SJOne board from the PCB. We are then able to use these pins to connect different debugging tools. It also provides a more accurate testing environment since we can test directly on the PCB. | ||

| − | + | |[[File:CmpE243 F17 Vindicators100 PCBTraceImage.PNG|thumb|300px|<center>Image of our PCB trace</center>]] | |

| + | |} | ||

=== Hardware Interface === | === Hardware Interface === | ||

| − | In this section, | + | In this section we provide details on the hardware interfaces used for each system. |

| + | |||

| + | ==== Power Management ==== | ||

| + | |||

| + | The EV-VN7010AJ has a very basic control control mechanism. It has an output enable pin, MultiSense enable pin, and MultiSense multiplexer select lines. All of the necessary pins are either permanently tied to +5V, GND, or a GPIO pin on the SJOne Board. The MultiSense pin outputs information about the current battery voltage. The MultiSense pin is simply connected to an ADC pin on the SJOne board. | ||

| + | |||

| + | ==== Drive ==== | ||

| + | The drive system is comprised of two main parts - the motor driver and propulsion motor and the steering servo. We provide information about each of these component's hardware interfaces. | ||

| + | |||

| + | ===== Motor Driver & Propulsion Motor ===== | ||

| + | The motor driver is used to control the propulsion motor via OUTA and OUTB. It is driven by the SJOne board using a PWM signal connected to pin <code>P2.0</code>. The PWM frequency is set to 20,000 kHz. | ||

| + | |||

| + | ===== Steering Servo ===== | ||

| + | Our steering servo is driven via a PWM signal as well. The PWM frequency is set to 50 Hz. However, since we are already using a PWM signal for the motor, we used a repetitive interrupt timer (RIT) to manually generate a PWM signal using a GPIO pin for the steering servo. | ||

| + | The RIT had to be modified to allow us to count in microseconds (uS) since the original only allows to count in ms. | ||

| + | To do this, we added an additional parameter <code>uint32_t time</code>. | ||

| + | These time values are using to calculate our compare value which tells us how often to trigger the callback function. | ||

| + | Since we wanted our function to trigger every 50 us, we essentially need to calculate how many CPU clock edges we need before the callback is triggered. | ||

| + | |||

| + | For example, we wanted to trigger our callback every 50 us. Thus, our prefix is 1000000 (amount of us in 1 second) and our time is 50 (us). | ||

| + | This will give us the number of 50 us in 1 second in terms of us, which is 20000. | ||

| + | When we take our system clock (48 MHz) and divide it by the number we just calculated and it gives us the number of CPU cycles we want before the callback is triggered. | ||

| + | |||

| + | <syntaxhighlight language="cpp"> | ||

| + | // function prototype | ||

| + | void rit_enable(void_func_t function, uint32_t time, uint32_t prefix); | ||

| + | // Enable RIT - function, time, prefix | ||

| + | rit_enable(RIT_PWM, RIT_INCREMENT_US, MICROSECONDS); | ||

| + | // modified calculation | ||

| + | LPC_RIT->RICOMPVAL = sys_get_cpu_clock() / (prefix / time);</syntaxhighlight> | ||

| + | |||

| + | We used pin <code>P1.19</code> as a GPIO output pin to generate our PWM signal. In our RIT callback, it brings the GPIO pin high or low. | ||

| + | The algorithm to generate the signal is as follows: | ||

| + | # Initialize a static counter to 0 | ||

| + | # Increment by 50 on each callback (we chose 50 us so as not to eat up too much CPU time) | ||

| + | # Check if counter is greater than or equal to our period (50 Hz) | ||

| + | ## Assert GPIO pin high | ||

| + | ## Reset count | ||

| + | # Check if counter is greater than or equal to our target | ||

| + | ## Bring GPIO pin low | ||

| + | '''NOTE''' Our target has to be in terms of us since all of our calculations are in us. We get the target for our RIT by obtaining a target angle from control. We use a mapping function to generate our target in us from the desired angle. The initial value for the mapping function calculation was done by using a function generator with our steering servo. | ||

| + | * We found the min and max values in terms of ms and converted it to us. | ||

| + | * We then used these min and max values to move the wheels and mark it at each extreme on a sheet of paper. | ||

| + | * Using the marks, we calculated the angle and used the midpoint of the angle as 0°. That means that the minimum is a negative angle value and maximum is a positive angle value. | ||

| + | |||

| + | We were then able to generate a simple mapping function to map angles in degrees to us using: | ||

| + | <code>(uint32_t) (((angle - MIN_STEERING_DEGREES) * OUTPUT_RANGE / INPUT_RANGE) + MIN_MICRO_SEC_STEERING);</code> | ||

| + | |||

| + | ===== LCD Screen ===== | ||

| + | |||

| + | The LCD screen was interfaced with the SJOne board using a variety of GPIO pins for data (D0, D1, D2, D3) and to enable the screen (EN). | ||

| + | |||

| + | The pins used are P1.29, P1.28, P2.6, P2.7, P0.29, and P0.30 for D0, D1, D2, D3, RS, and EN respectively. | ||

| + | |||

| + | The driver for the LCD screen does the following: | ||

| + | * Initialize LCD screen and GPIO pins used for the screen | ||

| + | <syntaxhighlight language="cpp"> | ||

| + | commandWrite(0x02); //Four-bit Mode? | ||

| + | commandWrite(0x0F); //Display On, Underline Blink Cursor | ||

| + | commandWrite(0x28); //Initialize LCD to 4-bit mode, 2-line 5x7 Dot Matrix | ||

| + | commandWrite(0x01); //Clear Display | ||

| + | commandWrite(0x80); //Move Cursor to beginning of first line | ||

| + | </syntaxhighlight> | ||

| + | * To write to the LCD screen we send a command to write to a specific line | ||

| + | ** <code>0x80</code> for line 1 | ||

| + | ** <code>0xc0</code> for line 2 | ||

| + | ** <code>0x93</code> for line 3 | ||

| + | ** <code>0xd4</code> for line 4 | ||

| + | * Write each character to the LCD screen | ||

| + | ** Write each nibble of the data byte by writing out its value using the 4 GPIO pins to output high or low | ||

| + | <syntaxhighlight language="cpp"> | ||

| + | ((byte >> 0x00) & 0x01) ? lcd_d0.set(true) : lcd_d0.set(false); | ||

| + | ((byte >> 0x01) & 0x01) ? lcd_d1.set(true) : lcd_d1.set(false); | ||

| + | ((byte >> 0x02) & 0x01) ? lcd_d2.set(true) : lcd_d2.set(false); | ||

| + | ((byte >> 0x03) & 0x01) ? lcd_d3.set(true) : lcd_d3.set(false); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | ===== Headlights ===== | ||

| + | |||

| + | Our driver for the headlights uses pin <code>P2.5</code> to generate a PWM signal to turn our headlights on or off. We are using the same frequency as our motor driver (20,000 kHz) since we can only have one PWM frequency for all PWM pins on our SJOne board. | ||

| + | |||

| + | ==== Sensors ==== | ||

| + | ===== Ultrasonic Sensor Interface ===== | ||

| + | |||

| + | There are two mounting brackets for our ultrasonic sensors; one angled at 26.4 degrees and another at 45 degrees. The mounting brace on the front bumper of the autonomous car allows us to install either brackets based on our desired functionality. The ultrasonic sensors are also interfaced with an ADC mux because the SJOne board does not have enough ADC pins to process our sensors individually. | ||

| + | |||

| + | ===== LIDAR and Servo Interface ===== | ||

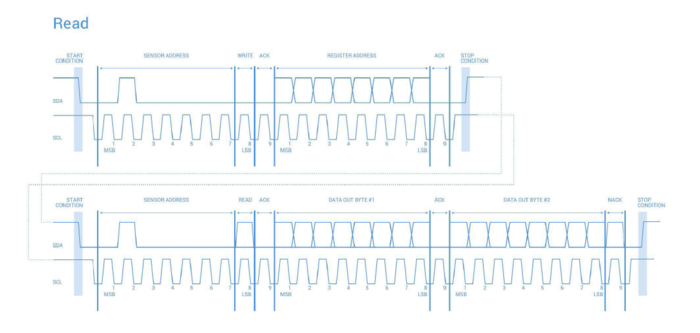

| + | The LIDAR communicates via i2c. Typically, when executing a read operation, there is a write and then a read. It writes the device address and provides a repeat start. However, the LIDAR expects a stop signal and does not respond to a repeat start, as shown in the ''LIDAR Read Timing Diagram''. | ||

| + | |||

| + | [[File:CmpE243 F17 Vindicators100 lidarread.PNG|left|thumb|700px|LIDAR Read Timing Diagram]] | ||

| + | |||

| + | Thus, we utilized a modified version of the i2c driver that does two completely separate write and read operations. This provides the proper format expected by the lidar. | ||

| + | |||

| + | The LIDAR driver is as follows: | ||

| + | * Begin with initialization | ||

| + | <syntaxhighlight lang="c">lidar_i2c2 = &I2C2::getInstance(); | ||

| + | lidar_i2c2->writeReg((DEVICE_ADDR << 1), ACQ_COMMAND, 0x00); | ||

| + | lidar_servo = new PWM(PWM::pwm1, SERVO_FREQUENCY); </syntaxhighlight> | ||

| + | Initialize lidar to default configuration | ||

| + | <syntaxhighlight lang="cpp"> // set configuration to default for balanced performance | ||

| + | lidar_i2c2->writeReg((DEVICE_ADDR << 1), 0x02, 0x80); | ||

| + | lidar_i2c2->writeReg((DEVICE_ADDR << 1), 0x04, 0x08); | ||

| + | lidar_i2c2->writeReg((DEVICE_ADDR << 1), 0x1c, 0x00);</syntaxhighlight> | ||

| + | * Write to reg via i2c <code>0x04</code> to <code>0x00</code> to indicate a measurement is to be taken | ||

| + | * Check the status register <code>0x01</code> until the least significant bit is set | ||

| + | * Send the register value <code>0x8f</code> where the measurement is stored | ||

| + | * Read the value sent back from the lidar and store it in an array | ||

| + | * Shift the contents of index 0 to the left 1 byte and or it with the contents of the array in index 1 | ||

| + | <syntaxhighlight lang="c">return (bytes[0] << 8) | bytes[1];</syntaxhighlight> | ||

| + | |||

| + | The driver for the servo simply uses the PWM functions from the library provided to us to initialize the PWM signal to our desired frequency. In our case, we used a 50Hz signal. We also used the <code>set(dutyCycle)</code> function to set the PWM pin to output the desired pattern based on the duty cycles. | ||

| + | |||

| + | ==== GPS ==== | ||

| + | |||

| + | The GPS (uBlox NEO M8N) uses UART to send GPS information. We've disabled all messages except for $GNGLL, which gives us the current coordinates of our car. The GPS then sends parse-able lines of text over its TX pin that contains the latitude, longitude, and UTC. The SJOne board receives this text via RXD2 (Uart2). The latitude and longitude were given in degrees minutes in the format of dddmm.mmmmmm where d is the degrees and m is the minutes. To convert it to just degrees, the number was divided by one hundred and then the decimal portion was separated from it, divided by 60 to acquire the degrees equivalent, and then added back to the initial degrees of the number. | ||

| + | |||

| + | The GPS can be configured by sending configuration values over its RX pin. To do this, we send configurations over the SJOne's TXD2 pin and save the configuration by sending a save command to the GPS. | ||

| + | |||

| + | ==== Compass ==== | ||

| + | |||

| + | We made a compass using the LSM303 Magnetometer. To do this, we first had to configure the mode register to allow for continuous reads. We also had to set the gain by modifying the second configuration register. The compass can then be polled at 10Hz to read the X, Y, and Z registers, which are comprised of 6 1-byte wide registers. From this, we can calculate the heading by atan2(Y,X). We chose to completely neglect the Z axis as we found that it made very little difference for our project. | ||

=== Software Design === | === Software Design === | ||

| − | + | In this section we provide details on our software design for each of the systems. We also delve into our DBC file and the messages sent via CAN bus. | |

| + | ==== CAN Communication ==== | ||

243.dbc | 243.dbc | ||

| − | + | <syntaxhighlight lang="c">VERSION "" | |

| − | + | ||

| − | + | NS_ : | |

| − | + | BA_ | |

| − | + | BA_DEF_ | |

| − | + | BA_DEF_DEF_ | |

| − | + | BA_DEF_DEF_REL_ | |

| − | + | BA_DEF_REL_ | |

| − | + | BA_DEF_SGTYPE_ | |

| − | + | BA_REL_ | |

| − | + | BA_SGTYPE_ | |

| − | + | BO_TX_BU_ | |

| − | + | BU_BO_REL_ | |

| − | + | BU_EV_REL_ | |

| − | + | BU_SG_REL_ | |

| − | + | CAT_ | |

| − | + | CAT_DEF_ | |

| − | + | CM_ | |

| − | + | ENVVAR_DATA_ | |

| − | + | EV_DATA_ | |

| − | + | FILTER | |

| − | + | NS_DESC_ | |

| − | + | SGTYPE_ | |

| − | + | SGTYPE_VAL_ | |

| − | + | SG_MUL_VAL_ | |

| − | + | SIGTYPE_VALTYPE_ | |

| − | + | SIG_GROUP_ | |

| − | + | SIG_TYPE_REF_ | |

| − | + | SIG_VALTYPE_ | |

| − | + | VAL_ | |

| − | + | VAL_TABLE_ | |

| − | + | ||

| − | + | BS_: | |

| − | + | ||

| − | + | BU_: DBG SENSORS CONTROL_UNIT DRIVE APP NAV | |

| − | + | ||

| − | + | BO_ 100 COMMAND: 1 DBG | |

| − | + | SG_ ENABLE : 0|1@1+ (1,0) [0|1] "" DBG | |

| − | + | ||

| − | BO_ | + | BO_ 200 ULTRASONIC_SENSORS: 6 SENSORS |

| − | + | SG_ front_sensor1 : 0|12@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP,DRIVE | |

| − | + | SG_ front_sensor2 : 12|12@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP,DRIVE | |

| − | BO_ 123 DRIVE_CMD: 3 CONTROL_UNIT | + | SG_ front_sensor3 : 24|12@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP,DRIVE |

| − | + | SG_ back_sensor1 : 36|12@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP,DRIVE | |

| − | + | ||

| − | + | BO_ 101 LIDAR_DATA1: 7 SENSORS | |

| − | + | SG_ lidar_data1 : 0|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar_data2 : 11|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar_data3 : 22|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar_data4 : 33|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar_data5 : 44|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | ||

| − | + | BO_ 102 LIDAR_DATA2: 6 SENSORS | |

| − | + | SG_ lidar2_data6 : 0|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar2_data7 : 11|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar2_data8 : 22|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | SG_ lidar2_data9 : 33|11@1+ (1,0) [0|0] "cm" CONTROL_UNIT,APP | |

| − | + | ||

| − | + | BO_ 123 DRIVE_CMD: 3 CONTROL_UNIT | |

| − | + | SG_ steer_angle : 0|9@1- (1,0) [-23|23] "degrees" DRIVE,APP | |

| − | + | SG_ speed : 9|6@1+ (1,0) [0|20] "mph" DRIVE,APP | |

| − | + | SG_ direction : 15|1@1+ (1,0) [0|1] "" DRIVE,APP | |

| − | + | SG_ headlights : 16|1@1+ (1,0) [0|1] "" DRIVE,APP | |

| − | + | ||

| − | + | BO_ 122 SENSOR_CMD: 1 CONTROL_UNIT | |

| − | BO_ | + | SG_ lidar_zero : 0|8@1- (1,0) [-90|90] "degrees" SENSORS,APP |

| − | + | ||

| − | + | BO_ 121 GPS_POS: 8 NAV | |

| − | + | SG_ latitude : 0|32@1+ (0.000001,-90) [-90|90] "degrees" APP | |

| − | + | SG_ longitude : 32|32@1+ (0.000001,-180) [-180|180] "degrees" APP | |

| − | + | ||

| − | + | BO_ 120 GPS_STOP: 1 NAV | |

| − | + | SG_ stop : 0|1@1+ (1,0) [0|1] "" APP,CONTROL_UNIT | |

| − | + | ||

| − | + | BO_ 119 GPS_STOP_ACK: 1 CONTROL_UNIT | |

| − | + | SG_ stop_ack : 0|1@1+ (1,0) [0|1] "" APP,NAV | |

| − | + | ||

| − | + | BO_ 148 LIGHT_SENSOR_VALUE: 1 SENSORS | |

| − | + | SG_ light_value : 0|8@1+ (1,0) [0|100] "%" CONTROL_UNIT,APP | |

| − | + | ||

| − | + | BO_ 146 GPS_HEADING: 3 NAV | |

| − | + | SG_ current : 0|9@1+ (1,0) [0|359] "degrees" APP,CONTROL_UNIT | |

| − | + | SG_ projected : 10|9@1+ (1,0) [0|359] "degrees" APP,CONTROL_UNIT | |

| − | + | ||

| − | + | BO_ 124 DRIVE_FEEDBACK: 1 DRIVE | |

| − | + | SG_ velocity : 0|6@1+ (0.1,0) [0|0] "mph" CONTROL_UNIT,APP | |

| − | + | SG_ direction : 6|1@1+ (1,0) [0|1] "" CONTROL_UNIT,APP | |

| − | + | ||

| − | + | BO_ 243 APP_DESTINATION: 8 APP | |

| − | + | SG_ latitude : 0|32@1+ (0.000001,-90) [-90|90] "degrees" NAV | |

| − | + | SG_ longitude : 32|32@1+ (0.000001,-180) [-180|180] "degrees" NAV | |

| + | |||

| + | BO_ 244 APP_DEST_ACK: 1 NAV | ||

| + | SG_ acked : 0|1@1+ (1,0) [0|1] "" APP | ||

| + | |||

| + | BO_ 245 APP_WAYPOINT: 2 APP | ||

| + | SG_ index : 0|7@1+ (1,0) [0|0] "" NAV | ||

| + | SG_ node : 7|7@1+ (1,0) [0|0] "" NAV | ||

| + | |||

| + | BO_ 246 APP_WAYPOINT_ACK: 1 NAV | ||

| + | SG_ index : 0|7@1+ (1,0) [0|0] "" APP | ||

| + | SG_ acked : 7|1@1+ (1,0) [0|1] "" APP | ||

| + | |||

| + | BO_ 0 APP_SIG_STOP: 1 APP | ||

| + | SG_ APP_SAYS_stop : 0|8@1+ (1,0) [0|1] "" CONTROL_UNIT | ||

| + | |||

| + | BO_ 2 APP_SIG_START: 1 APP | ||

| + | SG_ APP_SAYS_start : 0|8@1+ (1,0) [0|1] "" CONTROL_UNIT | ||

| + | |||

| + | BO_ 1 STOP_SIG_ACK: 1 CONTROL_UNIT | ||

| + | SG_ control_received_stop : 0|8@1+ (1,0) [0|1] "" APP | ||

| + | |||

| + | BO_ 3 START_SIG_ACK: 1 CONTROL_UNIT | ||

| + | SG_ control_received_start : 0|8@1+ (1,0) [0|1] "" APP | ||

| + | |||

| + | BO_ 69 BATT_INFO: 2 SENSORS | ||

| + | SG_ BATT_VOLTAGE : 0|8@1+ (0.1,0) [0.0|25.5] "V" APP,DRIVE | ||

| + | SG_ BATT_PERCENT : 8|7@1+ (1,0) [0|100] "%" APP,DRIVE | ||

| + | |||

| + | CM_ BU_ DBG "Debugging entity"; | ||

| + | CM_ BU_ DRIVE "Drive System"; | ||

| + | CM_ BU_ SENSORS "Sensor Suite"; | ||

| + | CM_ BU_ APP "Communication to mobile app"; | ||

| + | CM_ BU_ CONTROL_UNIT "Central command board"; | ||

| + | CM_ BU_ NAV "GPS and compass"; | ||

| + | |||

| + | BA_DEF_ "BusType" STRING ; | ||

| + | BA_DEF_ BO_ "GenMsgCycleTime" INT 0 0; | ||

| + | BA_DEF_ SG_ "FieldType" STRING ; | ||

| + | |||

| + | BA_DEF_DEF_ "BusType" "CAN"; | ||

| + | BA_DEF_DEF_ "FieldType" ""; | ||

| + | BA_DEF_DEF_ "GenMsgCycleTime" 0; | ||

| + | |||

| + | BA_ "GenMsgCycleTime" BO_ 256 10; | ||

| + | BA_ "GenMsgCycleTime" BO_ 512 10; | ||

| + | BA_ "GenMsgCycleTime" BO_ 768 500; | ||

| + | BA_ "GenMsgCycleTime" BO_ 1024 100; | ||

| + | BA_ "GenMsgCycleTime" BO_ 1280 1000; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | ==== Power Management ==== | ||

| + | The MultiSense pin on the EV-VN7010AJ board outputs real time voltage information. It's connected to an ADC pin on the SJOne Board.The ADC reading is converted in software from a 0-4095 range to the actual voltage. | ||

| + | |||

| + | ==== Drive ==== | ||

| + | |||

| + | The drive software is separated into 3 main sections: period callbacks, drive methods, and LCD library. The period callback file contains the drive controllers, LCD interface, and message handling. The CAN messages are decoded in the 100 Hz task to feed the desired target values into the PID propulsion controller and the servo steering controller. In addition, the control unit CAN message will control the on and off state of the headlights. The drive methods files contain all functions, classes, and defines necessary for the drive controllers. For example, unit conversion factors and unit conversion functions. The LCD library contains the LCD display driver and high-level functions to interface with a 4x20 LCD display in 4-bit mode. | ||

| + | |||

| + | ==== Sensors ==== | ||

| + | |||

| + | ===== Ultrasonic Sensors ===== | ||

| + | |||

| + | For our software design, we set our TRIGGER pin to high to initiate the first ultrasonic to begin sampling. The ultrasonic sensor trigger requires a high for uqat least 20us and needs to be pulled low before the next sampling cycle. We chose to put a vTaskDelay(10) before pulling the trigger pin low. After pulling the pin low, we wait for an additional 50ms for the ultrasonic sensor to finish sampling and output the correct values for the latest reading. | ||

| + | Because we have an ADC mux, we have two GPIOs responsible for selecting which ultrasonic sensor we are reading from. Since we have 4 sensors, we cycle between 00 -> 11 and read from the ADC pin P0,26. For filtering, we are doing an average with a sample size of 50. | ||

| + | |||

| + | ===== LIDAR ===== | ||

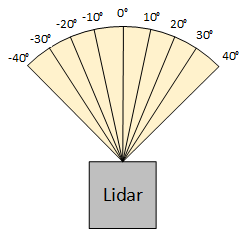

| + | Our Lidar class contains a reference to the instance of the PWM class (for the servo) and to the I2C2 class (for communication with the lidar). It also contains an array with 9 elements to hold each of the measurements obtained during the 90° sweep. | ||

| + | |||

| + | During development, we found that the LIDAR takes measurements far faster than the servo can turn. Thus, we had to add a delay long enough for the servo to position itself before obtaining readings. To accomplish this, we created a separate task for the LIDAR itself called <code> LidarTask </code> so as not overrun the periodic tasks. This is because we have the ultrasonic sensors and battery information running in the task that would have been most suitable for the lidar. The delay value was obtained from the servo's datasheet that indicated how many degrees it can turn in a specific amount of time. | ||

| + | |||

| + | [[File:CmpE243 F17 Vindicators100 LidarMeasurements.png|thumb|Lidar Position Diagram]] | ||

| + | |||

| + | The basic process for the LIDAR is that it sweeps across a 90° angle right in front of it, as shown in the ''LIDAR Position Diagram''. We have a set list of 9 duty cycles for each position we wish to obtain values for. The duty cycles were obtained by calculating the time in ms needed to move 10°. This is covered in further detail in the ''Implementation'' section. The basic algorithm is as follows: | ||

| + | |||

| + | # Iterate through the list of positions in ascending order | ||

| + | ## Set position of servo | ||

| + | ## Wait long enough for servo to position itself | ||

| + | ## Take measurement and store it | ||

| + | # When ascending order is complete, give the semaphore to send lidar data via CAN | ||

| + | # Set increment flag to false | ||

| + | # Iterate through the list of positions in descending order | ||

| + | ## Set position of servo | ||

| + | ## Wait long enough for servo to position itself | ||

| + | ## Take measurement and store it | ||

| + | # When descending order is complete, give the semaphore to send lidar data via CAN | ||

| + | #Set increment to true | ||

| + | |||

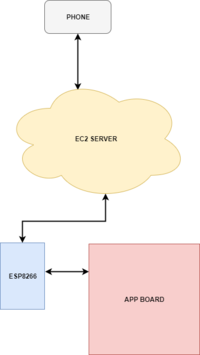

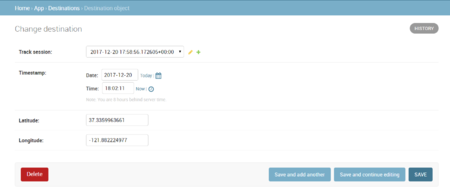

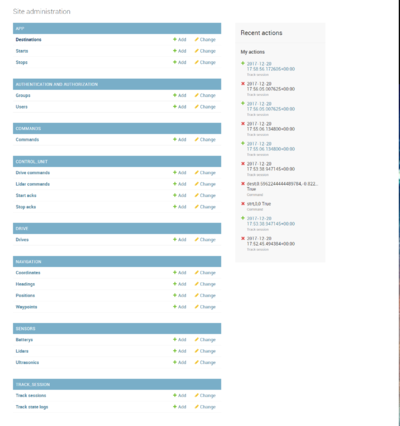

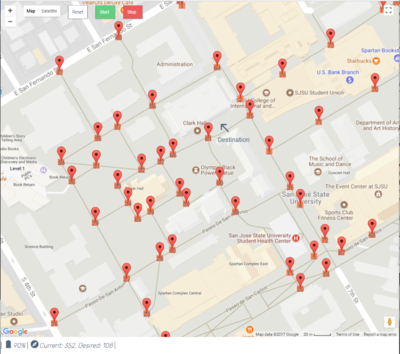

| + | ==== APP BOARD / ESP8266 / Server / Phone Communication ==== | ||

| + | {| | ||

| + | |The Phone communicates to the server via a website. The website was created with the [https://www.djangoproject.com/ Django Web Framework]. | ||

| + | Commands from the phone are saved in a database that the ESP8266 and APP Board can poll. | ||

| + | |||

| + | Here is the basic flow: | ||

| + | * The APP board sends a command to read a specific URL. | ||

| + | :: For instance, the APP board can send "http://exampleurl.com/commands/read/" to the ESP8266. | ||

| + | * The ESP8266 will make a GET request to the server to see if there are any commands it needs to execute. | ||

| + | * If it finds one, it will send that information over UART to the APP board. | ||

| + | |||

| + | * To send from the APP to the server, the APP board sends a URL to the ESP8266 such as "http://exampleurl.com/nav/update_heading/?current_heading=0&desired_heading=359". | ||

| + | * The ESP8266 makes a GET request so that the server can update the current and desired headings. | ||

| + | |||

| + | |[[File:CmpE243 F17 Vindicators100 APP Server Communication.png|thumb|right|200px|<center>APP Communication Dataflow</center>]] | ||

| + | |} | ||

| + | {| | ||

| + | [[File:CmpE243_F17_Vindicators100_App_Log_Details.PNG|thumb|left|450px|<center>Detailed View of Each Log Entry</center>]] | ||

| + | | | ||

| + | [[File:CmpE243_F17_Vindicators100_App_Admin.PNG|thumb|center|400px|<center>APP Admin for Checking Logs</center>]] | ||

| + | | | ||

| + | [[File:CmpE243_F17_Vindicators100_App_Interface.PNG|thumb|right|400px|<center>Main Interface for Selecting Destination</center>]] | ||

| + | |} | ||

| + | |||

| + | ==== Control Unit ==== | ||

| + | |||

| + | The control unit does the majority of its work in the 10Hz periodic task. The 1Hz task is used to check for CAN bus off and to toggle the control unit's heartbeat LED. The 100Hz task is used exclusively for decoding incoming CAN messages and MIA handling. | ||

| + | |||

| + | The 10Hz and 100Hz tasks employ a state machine which are both controlled by the STOP and GO messages from APP. If APP sends a GO signal, the state machine will switch to the "GO" state. If APP sends a STOP signal, the state machine will switch to the "STOP_STATE" state. While in the "STOP_STATE" state, the program will simply wait for a GO signal from app. While in the "GO" state, the 10Hz task will perform the path finding, object avoidance, speed, and headlight logic and the 100Hz task will begin decoding incoming CAN messages. | ||

| + | |||

| + | ===== Path Finding ===== | ||

| + | For path finding, NAV sends CONTROL_UNIT two headings: projected and current. Projected heading is the heading the car needs to be facing in order to be pointed directly at the next waypoint. This was calculated using the coordinates of the cars current destination versus the coordinates of the next waypoint. Current heading is the direction the car is pointing at that moment. These two values are used to determine which direction to turn to and of what severity to turn. | ||

| + | |||

| + | |||

| + | Using the grid of GPS coordinates, a data structure of nodes is created. Each node is aware of its neighbor nodes to the North, East, South, and West. A modified implementation of the Astar algorithm is used to determine the path of grid nodes, given a start node and an end node. Astar is intended for use with equally spaced GPS coordinates. In this case, our grid is made up of arbitrary GPS coordinates that were picked strategically throughout the campus to avoid "noisy" paths and favor paved paths. Because of our custom grid, Astar was modified to do the following: | ||

| + | #{A} Given a current node, get a list of every neighbor = (n) and every neighbor's neighbor = (nn). | ||

| + | #{B} Calculate the distance from every (nn) to the end node = (f) | ||

| + | #{C} Calculate the distance from the current node to every (nn) = (s) | ||

| + | #{D} Scale (s) down by 30%. [Why? because the cost of a possible node (nn) is the sum of (f) + (s). Scaling (s) down allows the algorithm to pick nodes that are closer to the end node despite how far they maybe from the current node.] | ||

| + | #{E} Sum (0.3 * s) + (f) = (c) | ||

| + | #{F} Pick the (nn) with the least (c) = (nnBest) | ||

| + | #{G} Set current node to the (n), which is a neighbor of (nnBest). | ||

| + | #Loop back to {A} if current node is not equal to end node. | ||

| + | |||

| + | ===== Object Avoidance ===== | ||

| + | Object avoidance is broken into multiple steps: | ||

| + | #Simplify ultrasonic readings to whether the sensor is "blocked" or not. | ||

| + | #Simplify lidar readings to whether the reading is "blocked", "getting close", or "safe". | ||

| + | #Determine what direction to turn based solely on lidar. | ||

| + | #Determine what direction to turn based solely on ultrasonics. | ||

| + | #Determine final steering angle based on path finding and lidar and ultrasonic direction calculations. | ||

| + | |||

| + | ===== Speed ===== | ||

| + | Because the maximum speed of our car is about 2mph, speed modulation is relatively simple and can be simplified into stop, slow, and full speed. When lidar detects something is "getting close", the speed will be "slow". The speed will also be set to slow when the car is performing a turn or reversing. If all ultrasonic sensors are blocked (including the rear sensor), the car will stop. | ||

| + | |||

| + | ===== Headlights ===== | ||

| + | If the light value received from SENSORS is below 10 percent then CONTROL will send a signal to drive to turn on the headlights. | ||

=== Implementation === | === Implementation === | ||

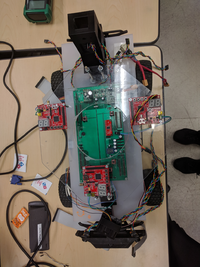

| − | This | + | [[File:CmpE243 F17 Vindicators100 integration.png|thumb|left|200px|<center>Image of our integration in progress</center>]] |

| + | We tested each of the components individually before integrating each component. | ||

| + | Once each of the systems were verified to be working correctly, we connected one system at a time. We did this to help us identify if there were any systems that caused problems when adding them to the PCB. | ||

| + | |||

| + | The following tables provide our overall implementation for each of our systems by providing an overview of the periodic tasks that are run for each one. This gives us an overall implementation overview of how each system works with the other. | ||

| + | |||

| + | [[File:CmpE243 F17 Vindicators100 PCBLabeled.png|thumb|400px|right|<center>PCB Final Design</center>]] | ||

| + | |||

| + | {| class="wikitable" | ||

| + | | | ||

| + | ==== Drive ==== | ||

| + | | | ||

| + | '''Periodic Init''' | ||

| + | * Initializes CAN as well as sets servo PWM and motor PWM to 0. <br> | ||

| + | '''1Hz Task''' | ||

| + | * Used to check the state of CAN. If it's off, LED 1 turns off to indicate that bus is off.<br> | ||

| + | '''10Hz Task''' | ||

| + | * Sets PID controller target and feedback values | ||

| + | * Sets the direction of the motor based on control values | ||

| + | * Converts motor RPM to duty cycle using with the exponential moving average value | ||

| + | * Sends feedback to control (speed and direction) <br> | ||

| + | '''100 Hz Task''' | ||

| + | * Receives CAN messages from control and sensors. Control provides speed, direction, and steering angle. We use the sensors data to display it on the LCD display. | ||

| + | |} | ||

| + | |||

| + | {| class="wikitable" | ||

| + | | | ||

| + | ==== GPS ==== | ||

| + | | | ||

| + | '''Periodic Init''' | ||

| + | * Init CAN | ||

| + | * Init GPS and compass <br> | ||

| + | * Load GPS Nodes from SD card | ||

| + | '''1Hz Task''' | ||

| + | * Check CAN bus status and reset if off | ||

| + | '''10Hz Task''' | ||

| + | * Calculate heading and convert to degrees | ||

| + | * Parse the GPS message and send to control via CAN | ||

| + | * Calculate distance from next node and increment waypoint index when close enough | ||

| + | '''100Hz Task''' | ||

| + | * Receive destination via CAN | ||

| + | * Send software ack to App | ||

| + | * Calculate nearest waypoint | ||

| + | * Get GPS frame and parse the new message | ||

| + | |} | ||

| + | |||

| + | {| class="wikitable" | ||

| + | | | ||

| + | |||

| + | ==== App ==== | ||

| + | | | ||

| + | ''' Periodic Init ''' | ||

| + | * Init UART | ||

| + | * Init CAN | ||

| + | ''' 1Hz Task ''' | ||

| + | * Check CAN bus state and reset if bus off | ||

| + | '''100Hz Task''' | ||

| + | * Sends start and stop conditions to start or stop car | ||

| + | * Get destination from ESP and send to control | ||

| + | * Receive CAN messages from each unit with data such as sensor values | ||

| + | |} | ||

| + | {| class="wikitable" | ||

| + | | | ||

| + | ==== Control ==== | ||

| + | | | ||

| + | '''Periodic Init''' | ||

| + | * Init CAN | ||

| + | '''1Hz Task''' | ||

| + | * Check CAN bus status and reset if off | ||

| + | '''10Hz Task''' | ||

| + | * Obtain LIDAR and ultrasonic messages via CAN | ||

| + | * Run through state machine and send messages to relevant systems based on feedback from each system | ||

| + | '''100Hz Task''' | ||

| + | * Receive messages from other systems via CAN on their status | ||

| + | |} | ||

| + | {| class="wikitable" | ||

| + | | | ||

| + | |||

| + | ==== Sensors ==== | ||

| + | | | ||

| + | '''Periodic Init''' | ||

| + | * Initialize all GPIOs used for ADC mux and CAN.<br> | ||

| + | ''' LIDAR Init''' | ||

| + | * Initialize PWM and i2c for servo and LIDAR respectively.<br> | ||

| + | '''1Hz Task''' | ||

| + | * Check CAN bus status | ||

| + | * Send light sensor data to control<br> | ||

| + | ''' 10Hz Task''' | ||

| + | * Take Sempaphore from LIDAR task and send values back to control | ||

| + | * Get ADC readings from ultrasonics and filter out any value spikes | ||

| + | '''100Hz Task''' | ||

| + | * Send ultrasonic values back to control<br> | ||

| + | '''LIDAR Task''' | ||

| + | * Moves servo to each position based on a list of set duty cycles | ||

| + | * Takes measurements from LIDAR | ||

| + | * Once all measurements are obtained, it sends back 9 data points to control | ||

| + | |} | ||

== Testing & Technical Challenges == | == Testing & Technical Challenges == | ||

| − | + | In this section we provide an overview of our testing methods for each component as well as technical challenges we observed throughout the course of this project. | |

| − | |||

| − | + | === Testing === | |

| − | === Single frequency setting for PWM === | + | For unit testing we used cgreen for each of the modules. |

| + | |||

| + | Each of the modules was tested independently before any full integration testing. | ||

| + | |||

| + | ==== GPS & Compass ==== | ||

| + | |||

| + | GPS was tested by taking the GPS module and walking around campus to map out accuracy and areas of the campus that gave us inaccurate readings. | ||

| + | Once the nodes were setup for pathfinding, the following were tested for accuracy: | ||

| + | # The coordinate of each node versus what the GPS determined it to be at the physical location | ||

| + | # The accuracy of the calculated heading from the car's current location to the next node in relation to true north | ||

| + | # The accuracy of the heading given by the compass against the calculated heading in relation to true north | ||

| + | # The distance from the car's location to the next waypoint | ||

| + | |||

| + | ==== Drive ==== | ||

| + | |||

| + | To test the basic functionality of drive we began in a multi-part process. | ||

| + | Testing for drive was done by testing each component in the following order: | ||

| + | # Test motor functionality - get the wheels turning | ||

| + | # Test the steering capability - turn the wheels | ||

| + | # Combine both and test | ||

| + | |||

| + | We began with testing the drive functionality by supplying the necessary signals to see whether we can get it to run at different speeds. We did this by programming each of the buttons to supply different duty cycles. We used an oscilloscope to verify the duty cycles along with the frequency since we had issues with incorrect frequencies. This is dicussed further in the ''Technical Challenges'' section. | ||

| + | |||

| + | Once that was complete, we used an oscilloscope to verify that our GPIO output was supplying the expected PWM signal. We then programmed the onboard push buttons to subtract or add degrees to verify that the wheels would turn as expected. | ||

| + | |||

| + | Finally, we integrated both parts and provided test values from control to ensure that it behaved as expected. | ||

| + | |||

| + | ==== Sensors ==== | ||

| + | |||

| + | ===== Ultrasonic ===== | ||

| + | |||

| + | Overall testing of the ultrasonic sensors was done by pointing the sensors to different objects and checking the output from Hercules to verify that the values were correct. This is where we found that once we added the analog/digital mux, the values we were receiving were not longer accurate. We go over the details in the ''Technical Challenges'' section. | ||

| + | |||

| + | ===== LIDAR ===== | ||

| + | |||

| + | To test the LIDAR, a variety of methods was used. We began by taping a piece of paper in front of the LIDAR and measured the distance. We then looked at the output values obtained and printed each value at each position. This helped us identify that the values were offset or sometimes incorrect because the LIDAR was able to take measurements faster than the servo could move. | ||

| + | |||

| + | To fix this, we began with the datasheet for the servo and found that it took 0.16 seconds to move 60°. Using this measurement, we began by adding a 160ms delay between servo moves. Then, by trial and error we found that a 90ms delay would allow us to retain the correct values. | ||

| + | |||

| + | === Technical Challenges === | ||

| + | |||

| + | ==== Timing issue after adding Analog/Digital mux ==== | ||

| + | Adding an analog/digital mux caused a lot of problems with obtaining accurate values from the ultrasonic sensors. Since we had chained each of the sensors to take measurements one at a time, we had to finetune the timing to work with the mux because the mux requires us to use GPIO pins for the select lines. Getting the timing down was crucial for switching between each of the sensors to provide the signal to the ADC pin to convert. | ||

| + | |||

| + | ==== Single frequency setting for PWM ==== | ||

Using PWM on the SJOne board only allows you to run all PWM pins at the same frequency. For drive, we required two different frequencies - one for the motor and one for the servo. We solved this issue by generating our own PWM signal using a GPIO pin and a RIT (repetitive interrupt timer). | Using PWM on the SJOne board only allows you to run all PWM pins at the same frequency. For drive, we required two different frequencies - one for the motor and one for the servo. We solved this issue by generating our own PWM signal using a GPIO pin and a RIT (repetitive interrupt timer). | ||

| − | === Inaccurate PWM Frequency === | + | ==== Inaccurate PWM Frequency ==== |

When originally working with PWM for drive, we found that the actual frequency that was set was inaccurate. We expected the frequency to be 20kHz, but when we hooked it up to the oscilloscope we found that it was much higher. It was consistently setting the frequency to output about 10 times the expected value. We came to the realization that since we had been declaring the PWM class as a global, the initialization was not being done properly. We are not sure why this is the case, but after we instantiated the PWM class in the periodic init function, the frequency was correct. | When originally working with PWM for drive, we found that the actual frequency that was set was inaccurate. We expected the frequency to be 20kHz, but when we hooked it up to the oscilloscope we found that it was much higher. It was consistently setting the frequency to output about 10 times the expected value. We came to the realization that since we had been declaring the PWM class as a global, the initialization was not being done properly. We are not sure why this is the case, but after we instantiated the PWM class in the periodic init function, the frequency was correct. | ||

| − | === Difficulties with Quadrature Counter === | + | ==== Difficulties with Quadrature Counter ==== |

We had issues getting the quadrature counter to give us valid numbers. | We had issues getting the quadrature counter to give us valid numbers. | ||

Our solution was to use LPC's built-in counter instead of having an extra external component. | Our solution was to use LPC's built-in counter instead of having an extra external component. | ||

| − | === | + | ==== Finding Closest Node from Start Point ==== |

| − | The | + | The path-finding algorithm requires the car to find the closest node before it can start the route. However, if the closest node is across a building, the car will attempt to drive through buildings to get to that node. We fixed this by adding more nodes such that the closest node will never be across a building. |

| − | |||

== Conclusion == | == Conclusion == | ||

| − | + | This project definitely taught us a lot; it has not only taught us new technical skills such as utilizing CAN bus communication, but it has also taught us life skills. We found how difficult a large scale project like this is to implement with so many different groups and how many things can go wrong. This really showed us the importance of time management and testing. | |

=== Project Video === | === Project Video === | ||

| − | + | You can find the Vindicators100 project video at https://youtu.be/JlotRHnlk_0 | |

=== Project Source Code === | === Project Source Code === | ||

| Line 401: | Line 916: | ||

== References == | == References == | ||

=== Acknowledgement === | === Acknowledgement === | ||

| − | + | We would like to thank Professor Preetpal Kang, the ISAs, and Khalil for the guidance they provided us throughout this project. | |

=== References Used === | === References Used === | ||

| − | + | ||

| + | ==== APP ==== | ||

| + | [https://docs.djangoproject.com/en/1.11/ Django Web Framework Documentation]<br> | ||

| + | [https://aws.amazon.com/getting-started/ Amazon AWS Documentation]<br> | ||

| + | |||

| + | ==== Navigation / GPS ==== | ||

| + | [http://www.st.com/resource/en/datasheet/lsm303dlhc.pdf LSM303 Datasheet]<br> | ||

| + | [https://www.u-blox.com/sites/default/files/products/documents/u-blox8-M8_ReceiverDescrProtSpec_%28UBX-13003221%29.pdf uBlox NEO M8N Protocol Description]<br> | ||

| + | [https://www.u-blox.com/sites/default/files/NEO-M8_DataSheet_%28UBX-13003366%29.pdf uBlox NEO M8N Datasheet]<br> | ||

| + | |||

| + | ==== Sensors ==== | ||

| + | [https://static.garmin.com/pumac/LIDAR_Lite_v3_Operation_Manual_and_Technical_Specifications.pdf Garmin LIDAR Lite v3 Datasheet]<br> | ||

| + | [https://maxbotix.com/documents/HRLV-MaxSonar-EZ_Datasheet.pdf Maxbotix Ultrasonic Sensor Datasheet] | ||

| + | |||

| + | ==== Drive ==== | ||

| + | [https://www.pololu.com/product/2992 Polulu G2 Motor Driver Specs] | ||

| + | |||

| + | ==== SJONE ==== | ||

| + | [http://www.socialledge.com/ Social Ledge]<br> | ||

=== Appendix === | === Appendix === | ||

You can list the references you used. | You can list the references you used. | ||

Latest revision as of 06:45, 27 December 2017

Contents

Abstract

Objectives & Introduction

Show list of your objectives. This section includes the high level details of your project. You can write about the various sensors or peripherals you used to get your project completed.

Team Members & Responsibilities

- Sameer Azer

- Project Lead

- Sensors

- Quality Assurance

- Kevin Server

- Unit Testing Lead

- Control Unit

- Delwin Lei

- Sensors

- Control Unit

- Harmander Sihra

- Sensors

- Mina Yi

- DEV/GIT Lead

- Drive System

- Elizabeth Nguyen

- Drive System

- Matthew Chew

- App

- GPS/Compass

- Mikko Bayabo

- App

- GPS/Compass

- Rolando Javier

- App

- GPS/Compass

Schedule

| Sprint | End Date | Plan | Actual |

|---|---|---|---|

| 1 | 10/10 | App(HL reqs, and framework options);

Master(HL reqs, and draft CAN messages); GPS(HL reqs, and component search/buy); Sensors(HL reqs, and component search/buy); Drive(HL reqs, and component search/buy); |

App(Angular?);

Master(Reqs identified, CAN architecture is WIP); GPS(UBLOX M8N); Sensors(Lidar: 4UV002950, Ultrasonic: HRLV-EZ0); Drive(Motor+encoder: https://www.servocity.com/437-rpm-hd-premium-planetary-gear-motor-w-encoder, Driver: Pololu G2 24v21, Encoder Counter: https://www.amazon.com/SuperDroid-Robots-LS7366R-Quadrature-Encoder/dp/B00K33KDJ2); |

| 2 | 10/20 | App(Further Framework research);

Master(Design unit tests); GPS(Prototype purchased component: printf(heading and coordinates); Sensors(Prototype purchased components: printf(distance from lidar and bool from ultrasonic); Drive(Prototype purchased components: move motor at various velocities); |

App(Decided to use ESP8266 web server implementation);

Master(Unit tests passed); GPS(GPS module works, but inaccurate around parts of campus. Compass not working. Going to try new component); Sensors(Ultrasonic works [able to get distance reading over ADC], Lidar doesn't work with I2C driver. Need to modify I2C Driver); Drive(Able to move. No feedback yet.); |

| 3 | 10/30 | App(Run a web server on ESP8266);

Master(TDD Obstacle avoidance); GPS(Interface with compass); Sensors(Interface with Lidar); Drive(Interface with LCD Screen); |

App(web Server running on ESP8266, ESP8266 needs to "talk" to SJOne);

Master(Unit tests passed for obstacle avoidance using ultrasonic); GPS(Still looking for a reliable compass); Sensors(I2C Driver modified. Lidar is functioning. Waiting on Servo shipment and more Ultrasonic sensors); Drive(LCD Driver works using GPIO); |

| 4 | 11/10 | App(Manual Drive Interface, Start, Stop);

Master(Field-test avoiding an obstacle using one ultrasonic and Lidar); GPS(TDD Compass data parser, TDD GPS data parser, Write a CSV file to SD card); Sensors(Interface with 4 Ultrasonics [using chaining], Test power management chip current sensor, voltage sensor, and output on/off, Field-test avoiding an obstacle using 1 Ultrasonic); Drive(Servo library [independent from PWM Frequency], Implement quadrature counter driver); |

App(Cancelled Manual Drive, Start/Stop not finished due to issues communicating with ESP);

Master(Field-test done without Lidar. Master is sending appropriate data. Drive is having issues steering.); GPS(Found compass and prototyping. Calculated projected heading.); Sensors(Trying to interface ADC MUX with the ultrasonics. Integrating LIDAR with servo); Drive(Done.); |

| 5 | 11/20 | App(Send/receive GPS data to/from App);

Master(Upon a "Go" from App, avoid multiple obstacles using 4 ultrasonics and a rotating lidar); GPS(TDD Compass heading and error, TDD GPS coordinate setters/getters, TDD Logging); Sensors(CAD/3D-Print bumper mount for Ultrasonics, CAD/3D-Print Lidar-Servo interface. Servo-Car interface); Drive(Implement a constant-velocity PID, Implement a PID Ramp-up functionality to limit in-rush current); |

App(Google map data point acquisition, and waypoint plotting);

Master(State machine set up, waiting on app); GPS(Compass prototyping and testing using raw values); Sensors(Working on interfacing Ultrasonic sensors with ADC mux. Still integrating LIDAR with the servo properly); Drive(Completed PID ramp-up, constant-velocity PID incomplete, but drives.); |

| 6 | 11/30 | App(App-Nav Integration testing: Send Coordinates from App to GPS);

Master(Drive to specific GPS coordinates); GPS(App-Nav Integration testing: Send Coordinates from App to GPS); Sensors(Field-test avoiding multiple obstacles using Lidar & Ultrasonics); Drive(Interface with buttons and headlight); |

App(SD Card Implementation for map data point storage; SD card data point parsing);

Master(waiting on app and nav); GPS(live gps module testing, and risk area assessment, GPS/compass integration); Sensors(Cleaning up Ultrasonic readings coming through the ADC mux. Troubleshooting LIDAR inaccuracy); Drive(Drive Buttons Interfaced. Code refactoring complete.); |

| 7 | 12/10 | App(Full System Test w/ PCB);

Master(Full System Test w/ PCB); GPS(Full System Test w/ PCB); Sensors(Full System Test w/ PCB); Drive(Full System Test w/ PCB); |

App(Full system integration testing with PCB);

Master(Done); GPS(Full system integration testing with PCB); Sensors(LIDAR properly calibrated with accurate readings); Drive(Code Refactoring complete. No LCD or Headlights mounted on PCB.); |

| 8 | 12/17 | App(Full System Test w/ PCB);

Master(Full System Test w/ PCB); GPS(Full System Test w/ PCB); Sensors(Full System Test w/ PCB); Drive(Full System Test w/ PCB); |

App(Fine-tuning and full system integration testing with ESP8266 webserver);

Master(Done); GPS(Final Pathfinding Algorithm Field-Testing); Sensors(Got Ultrasonic sensors working with ADC mux); Drive(Missing LCD and Headlights on PCB.); |

Parts List & Cost

| Part # | Part Name | Purchase Location | Quantity | Cost per item |

|---|---|---|---|---|

| 1 | SJOne Board | Preet | 5 | $80/board |

| 2 | 1621 RPM HD Premium Gear Motor | Servocity | 1 | $60 |

| 3 | 20 kg Full Metal Servo | Amazon | 1 | $18.92 |

| 4 | Maxbotix Ultrasonic Rangefinder | Adafruit | 4 | $33.95 |

| 5 | Analog/Digital Mux Breakout Board | RobotShop | 1 | $4.95 |

| 6 | EV-VN7010AJ Power Management IC Development Tools | Mouser | 1 | $8.63 |

| 7 | eBoot Mini MP1584EN DC-DC Buck Converter | Amazon | 2 | $9.69 |

| 8 | Garmin Lidar Lite v3 | SparkFun | 1 | $150 |

| 9 | ESP8266 | Amazon | 1 | $8.79 |

| 10 | Savox SA1230SG Monster Torque Coreless Steel Gear Digital Servo | Amazon | 1 | $77 |

| 11 | Lumenier LU-1800-4-35 1800mAh 35c Lipo Battery | Amazon | 1 | $34 |

| 12 | Acrylic Board | Tap Plastics | 2 | $1 |

| 13 | Pololu G2 High-Power Motor Driver 24v21 | Pololu | 1 | $40 |

| 14 | Hardware Components (standoffs, threaded rods, etc.) | Excess Solutions, Ace | - | $20 |

| 15 | LM7805 Power Regulator | Mouser | 1 | $0.90 |

| 16 | Triple-axis Accelerometer+Magnetometer (Compass) Board - LSM303 | Adafruit | 1 | $14.95 |

Design & Implementation

Hardware Design

In this section, we provide details on hardware design for each component - power management, drive, sensors, app, and GPS.

Power Management

We used an EV-VN7010AJ Power Management Board to monitor real-time battery voltage. The power board has a pin which outputs a current proportional to the battery voltage. Connecting a load resistor between this pin and ground gives a smaller voltage proportional to the battery voltage. We read this smaller voltage using the ADC pin on the SJOne Board.

From battery voltage, it is split up into two 5V rails, an analog 5V (for the servos) and a digital 5V (for boards, transceivers, LIDAR, US sensors, etc.). We're using two buck converters to step the voltage down from battery voltage. There is also a battery voltage rail which goes to the drive system, which they then PWM to get their desired voltage level.

There is also an LM7805 regulator which is used just to power some of the power management chip's control signals.

Drive

Our drive system contains the following hardware components:

The motor driver is powered by our battery (about 16v). It takes in a PWM signal from the SJOne board. It also has a control signal, SLP, which is active low and it is tied high from the 3.3v power rail. The motor driver outputs two signals OUTA and OUTB used to control the propulsion motor which outputs signals for CH A and CH B to the SJOne board. It is also powered by our 16v battery. The steering servo is controlled by the SJOne board via PWM and requires a 5v input. The headlights use a MOSFET where the drain is connected to the 16v battery. The gate is a PWM signal input from the SJOne board which outputs at source, to the LED module's Vin. The LCD display requires a 5v input and has two control signals, EN and RS. It receives inputs from the SJOne board via GPIO for D0-D3 pins to display items on the LCD screen. Lastly, as per all other systems, all communication between them is done via CAN and so drive also has a CAN transceiver which is powered with 3.3v connected to the SJOne's RX and TX line on pin |

Sensors

We are using two sets of sensors - a LIDAR and 4 ultrasonic sensors.The Sensors System Hardware Design provides an overview of the entire sensors system and its connections.

LIDAR

| Our LIDAR system has two main separate hardware components - the LIDAR and the servo. The LIDAR is mounted on the servo to provide a "big-picture" view of the car's surroundings. The LIDAR System Diagram shows the pin connections between the LIDAR and servo to the SJOne board. The bottom portion in red is our servo and mounted on top is the LIDAR. Both the LIDAR and servo require a 5v input. However, to minimize noise, the servo is powered via the 5v analog power rail and the lidar is powered via the 5v digital power rail. The LIDAR is connected to the SJOne board using the i2c2 pins (P0.10 & P0.11). To mount the LIDAR onto the servo, a case that holds the LIDAR and an actual mounting piece was designed and printed using a 3D printer. The Lidar System and Mount Diagrams shows the three components for mounting the lidar to the servo and then to the car. |

Ultrasonic Sensors

|

The ultrasonic sensor setup that we have installed on the autonomous car is three ultrasonic sensors on the front and one on the back. The layout of the front sensors is one sensor down the middle and one on each side angled at 26.4/45 degrees. The sensors are powered using the 5v digital power rail. In addition to the sensors, an Analog/Digital mux was required to allow us to obtain values for all 4 sensors with the use of one ADC pin from the SJOne board. All sensors are daisy chained together with the initial sensor triggered by a GPIO pin, A mount for all the ultrasonic sensors was designed and 3D printed. In the CAD Design for Ultrasonic Sensor Mount the top right component is used for the rear sensor while the remaining three are combined to create the front holding mount that is attached to the front of our car. |

App

|

Our application uses an ESP8266 as our bridge for wifi connectivity. The ESP8266 takes an input voltage of 5v which is connected to the 5v digital power rail. The ESP8266's RX is connected to the TXD2 pin on the SJOne board and the TX is connected to the RXD2 on the SJOne board for UART communication. As with all other components, we are using CAN for communication between the boards and so it uses |

GPS

Our GPS system uses the following:

The uBlock Neo uses UART for communication so the TX is connected to the SJOne's RXD2 pin and the RX is connected to the TXD2 pin. The module is powered directly from the SJOne board using the onboard 3.3v output pin which has been connected to a 3.3v power rail to supply power to other modules. The LSM303 magnetometer module uses i2c for communication. The SDA is connected to the SJOne's SDA2 pin and the SCL is connected to the SJOne's SCL2 pin. The LSM303 also needs 3.3v to be powered and it is also connected to the 3.3v power rail. |

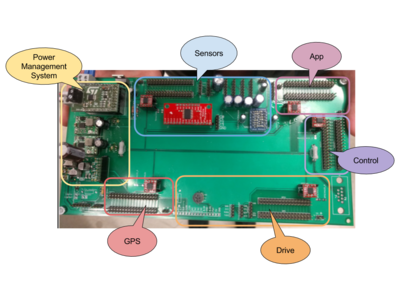

PCB Design

| Once we finalized all the separate systems, we were able to design our PCB.

During this process, we collected all of the circuit diagrams and the necessary pinouts from each group to decide where to place each of the components. In addition, we added extra pins where necessary in order to test each component easily. For example, we provided two set of SJOne pin connections - one for the ribbon cable that connects to the SJOne board and one extra set of pins that provide us access to the same SJOne pins. The reasoning for this was that it would provide us access to the pins without having to disconnect the SJOne board from the PCB. We are then able to use these pins to connect different debugging tools. It also provides a more accurate testing environment since we can test directly on the PCB. |

Hardware Interface

In this section we provide details on the hardware interfaces used for each system.

Power Management

The EV-VN7010AJ has a very basic control control mechanism. It has an output enable pin, MultiSense enable pin, and MultiSense multiplexer select lines. All of the necessary pins are either permanently tied to +5V, GND, or a GPIO pin on the SJOne Board. The MultiSense pin outputs information about the current battery voltage. The MultiSense pin is simply connected to an ADC pin on the SJOne board.

Drive

The drive system is comprised of two main parts - the motor driver and propulsion motor and the steering servo. We provide information about each of these component's hardware interfaces.

Motor Driver & Propulsion Motor

The motor driver is used to control the propulsion motor via OUTA and OUTB. It is driven by the SJOne board using a PWM signal connected to pin P2.0. The PWM frequency is set to 20,000 kHz.

Steering Servo

Our steering servo is driven via a PWM signal as well. The PWM frequency is set to 50 Hz. However, since we are already using a PWM signal for the motor, we used a repetitive interrupt timer (RIT) to manually generate a PWM signal using a GPIO pin for the steering servo.

The RIT had to be modified to allow us to count in microseconds (uS) since the original only allows to count in ms.

To do this, we added an additional parameter uint32_t time.

These time values are using to calculate our compare value which tells us how often to trigger the callback function.

Since we wanted our function to trigger every 50 us, we essentially need to calculate how many CPU clock edges we need before the callback is triggered.

For example, we wanted to trigger our callback every 50 us. Thus, our prefix is 1000000 (amount of us in 1 second) and our time is 50 (us). This will give us the number of 50 us in 1 second in terms of us, which is 20000. When we take our system clock (48 MHz) and divide it by the number we just calculated and it gives us the number of CPU cycles we want before the callback is triggered.

// function prototype

void rit_enable(void_func_t function, uint32_t time, uint32_t prefix);

// Enable RIT - function, time, prefix

rit_enable(RIT_PWM, RIT_INCREMENT_US, MICROSECONDS);

// modified calculation

LPC_RIT->RICOMPVAL = sys_get_cpu_clock() / (prefix / time);We used pin P1.19 as a GPIO output pin to generate our PWM signal. In our RIT callback, it brings the GPIO pin high or low.

The algorithm to generate the signal is as follows:

- Initialize a static counter to 0

- Increment by 50 on each callback (we chose 50 us so as not to eat up too much CPU time)

- Check if counter is greater than or equal to our period (50 Hz)

- Assert GPIO pin high

- Reset count

- Check if counter is greater than or equal to our target

- Bring GPIO pin low

NOTE Our target has to be in terms of us since all of our calculations are in us. We get the target for our RIT by obtaining a target angle from control. We use a mapping function to generate our target in us from the desired angle. The initial value for the mapping function calculation was done by using a function generator with our steering servo.

- We found the min and max values in terms of ms and converted it to us.

- We then used these min and max values to move the wheels and mark it at each extreme on a sheet of paper.

- Using the marks, we calculated the angle and used the midpoint of the angle as 0°. That means that the minimum is a negative angle value and maximum is a positive angle value.

We were then able to generate a simple mapping function to map angles in degrees to us using:

(uint32_t) (((angle - MIN_STEERING_DEGREES) * OUTPUT_RANGE / INPUT_RANGE) + MIN_MICRO_SEC_STEERING);

LCD Screen

The LCD screen was interfaced with the SJOne board using a variety of GPIO pins for data (D0, D1, D2, D3) and to enable the screen (EN).

The pins used are P1.29, P1.28, P2.6, P2.7, P0.29, and P0.30 for D0, D1, D2, D3, RS, and EN respectively.

The driver for the LCD screen does the following:

- Initialize LCD screen and GPIO pins used for the screen

commandWrite(0x02); //Four-bit Mode?

commandWrite(0x0F); //Display On, Underline Blink Cursor