Difference between revisions of "F17: Optimus"

Proj user5 (talk | contribs) (→Bluetooth Controller) |

Proj user5 (talk | contribs) (→Hardware Specifications) |

||

| (169 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | Optimus | + | {| |

| + | | | ||

| + | | | ||

| + | |[[File:CMPE243_F17_Optimus_car_2.png|300px|thumb|left|Optimus left view]] | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |[[File:CMPE243_F17_Optimus_car_1.png|600px|thumb|center|Optimus front view]] | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |[[File:CMPE243_F17_Optimus_car_3.png|300px|thumb|right|Optimus right view]] | ||

| + | | | ||

| + | |} | ||

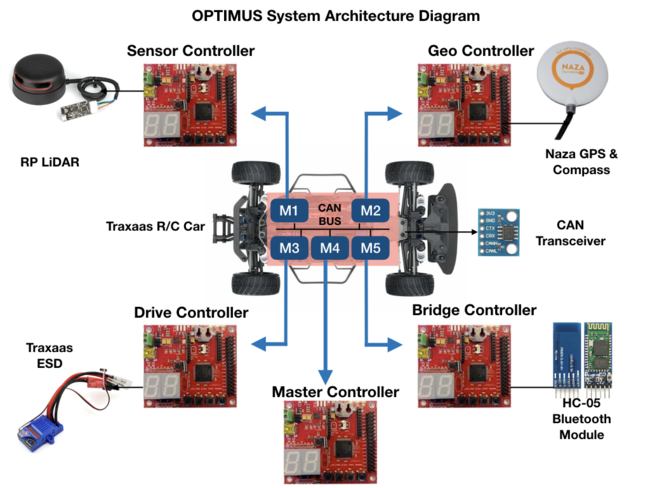

| − | + | '''Optimus''' - An Android app controlled Self Navigating Car powered by SJOne(LPC1758) microcontroller. Optimus manuevers through the selected Routes using LIDAR and GPS Sensors. This wiki page covers the detailed report on how Optimus is built by Team Optimus. | |

== '''Abstract''' == | == '''Abstract''' == | ||

| − | Embedded Systems are omnipresent and one of its unique, yet powerful application is Self Driving Car. In this project we to build a Self-Navigating | + | Embedded Systems are omnipresent and one of its unique, yet powerful application is Self Driving Car. In this project we to build a Self-Navigating Car named Optimus, that navigates from a source location to a selected destination by avoiding obstacles in its path. |

The key features the system supports are | The key features the system supports are | ||

| − | 1. | + | 1. Android Application with Customized map and Dashboard Information. |

| − | 2. LIDAR powered obstacle avoidance | + | 2. LIDAR powered obstacle avoidance. |

3. Route Calculation and Manuvering to the selected destination | 3. Route Calculation and Manuvering to the selected destination | ||

| − | 4. Self- Adjusting the speed of the car | + | 4. Self- Adjusting the speed of the car on Ramp. |

| − | The system is built on FreeRTOS running on LPC1758 SJOne controller | + | The system is built on FreeRTOS running on LPC1758 SJOne controller and Android application. |

| − | The building block of Optimus are the five controllers communicating | + | The building block of Optimus are the five controllers communicating through High Speed CAN network designed to handle dedicated tasks. The controllers integrates various sensors that is used for navigation of the car. |

| − | 1. Master Controller - handles the Route Manuevering and Obstacle Avoidance | + | '''1. Master Controller''' - handles the Route Manuevering and Obstacle Avoidance |

| − | 2. Sensor Controller - detects the surrounding objects | + | '''2. Sensor Controller''' - detects the surrounding objects |

| − | 3. Geo Controller - provides current location | + | '''3. Geo Controller''' - provides current location |

| − | 4. Drive Controller - controls the ESC | + | '''4. Drive Controller''' - controls the ESC |

| − | 5. Bridge controller - Interfaces the system to Android app | + | '''5. Bridge controller''' - Interfaces the system to Android app |

| − | + | {| | |

| − | + | |[[ File: CMPE243_F17_Optimus_SystemArchitecture.png|650px|thumb|left|System Architecture]] | |

| − | [[ File: CMPE243_F17_Optimus_SystemArchitecture.png| | + | | |

| + | |[[ File: CMPE243_F17_Optimus_Application.png |400px|thumb|right|Android Application]] | ||

| + | | | ||

| + | |} | ||

== '''Objectives & Introduction''' == | == '''Objectives & Introduction''' == | ||

| Line 36: | Line 53: | ||

''' Sensor Controller: ''' | ''' Sensor Controller: ''' | ||

| − | Sensor controller uses RPLIDAR to scan its 360-degree environment within 6-meter range. It sends | + | Sensor controller uses RPLIDAR to scan its 360-degree environment within 6-meter range. It sends the scanned obstacle data to master controller and bridge controller. |

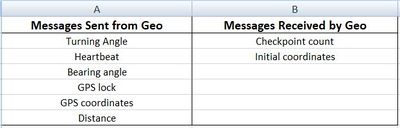

''' Geo Controller: ''' | ''' Geo Controller: ''' | ||

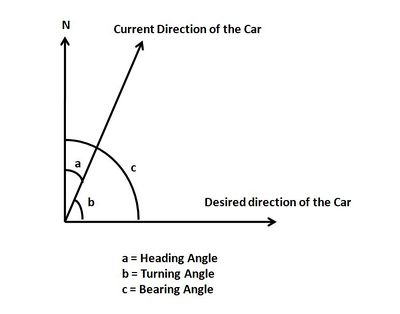

| − | Geo controller uses NAZA GPS module that provides car current GPS location and compass angle. It calculates heading and bearing angle that helps the car to turn | + | Geo controller uses NAZA GPS module that provides car current GPS location and compass angle. It calculates heading and bearing angle that helps the car to turn with respect to destination direction. |

''' Drive Controller: ''' | ''' Drive Controller: ''' | ||

| Line 45: | Line 62: | ||

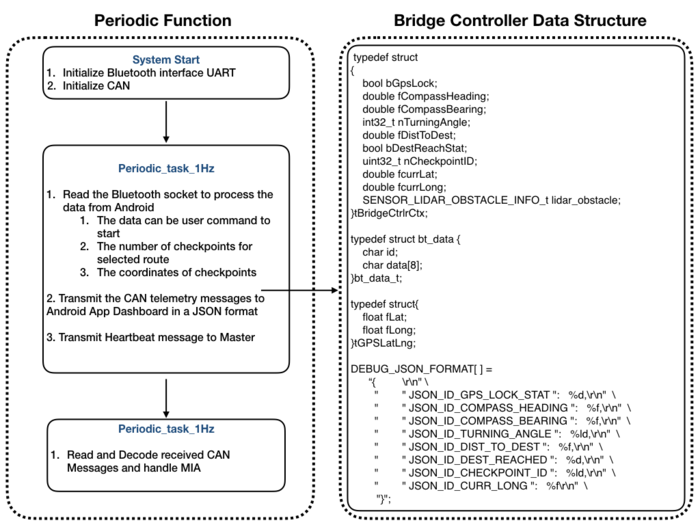

''' Bridge Controller: ''' | ''' Bridge Controller: ''' | ||

| − | Bridge controller works as a gateway between the Android application and Self-driving car and | + | Bridge controller works as a gateway between the Android application and Self-driving car and passes information to/from between them. |

''' Master Controller: ''' | ''' Master Controller: ''' | ||

| Line 53: | Line 70: | ||

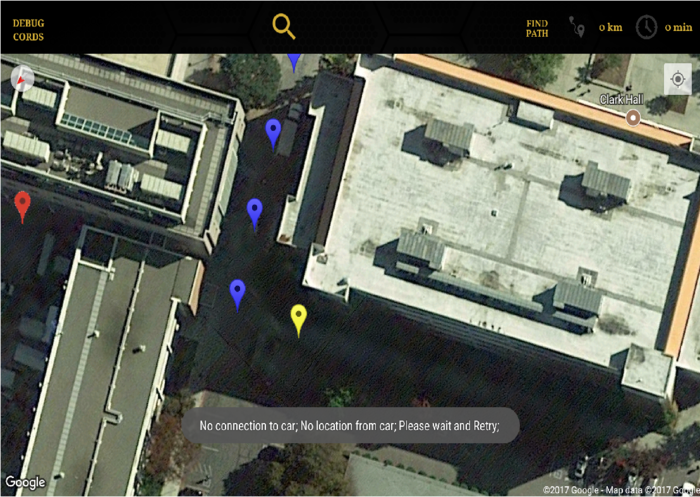

Android application communicates with the car through Bridge controller. It sends the destination location to be reached to the Geo controller and also provides all the Debugging information of the Car like | Android application communicates with the car through Bridge controller. It sends the destination location to be reached to the Geo controller and also provides all the Debugging information of the Car like | ||

| − | 1. Obstacles information around the car | + | 1. Obstacles information around the car |

| − | 2. Car's turning angle | + | 2. Car's turning angle |

3. Compass value | 3. Compass value | ||

| Line 63: | Line 80: | ||

5. Car's GPS location | 5. Car's GPS location | ||

| − | 6. Destination reached status | + | 6. Destination reached status |

7. Total checkpoints in the route | 7. Total checkpoints in the route | ||

| − | 8. Current checkpoint indication | + | 8. Current checkpoint indication |

== '''Team Members & Responsibilities''' == | == '''Team Members & Responsibilities''' == | ||

| − | * Motor Controller | + | * '''Master Controller''': |

| − | ** Unnikrishnan | + | ** Revathy |

| + | |||

| + | * '''Motor Controller''': | ||

| + | ** [https://www.linkedin.com/in/unnikrishnan-sreekumar-4a3b8922/ Unnikrishnan]<br> | ||

** [https://www.linkedin.com/in/rajul-gupta-5b366ba9/ Rajul]<br> | ** [https://www.linkedin.com/in/rajul-gupta-5b366ba9/ Rajul]<br> | ||

| − | * Android and Communication Bridge | + | * '''Sensor and I/O Controller''': |

| − | ** Parimal | + | ** [https://www.linkedin.com/in/sushma-nagaraj Sushma]<br> |

| + | ** [https://www.linkedin.com/in/supradeepk/ Supradeep]<br> | ||

| + | ** [https://www.linkedin.com/in/harshitha-bura-4926727a/ Harshitha] | ||

| + | |||

| + | * '''Android and Communication Bridge''': | ||

| + | ** [https://www.linkedin.com/in/parimal-basu-67b92430 Parimal]<br> | ||

** [https://www.linkedin.com/in/kripanandjha Kripanand Jha]<br> | ** [https://www.linkedin.com/in/kripanandjha Kripanand Jha]<br> | ||

| − | ** Unnikrishnan | + | ** [https://www.linkedin.com/in/unnikrishnan-sreekumar-4a3b8922/ Unnikrishnan]<br> |

| − | * Geographical Controller: | + | * '''Geographical Controller''': |

** [https://www.linkedin.com/in/sneha-shahi-8b1636152 Sneha]<br> | ** [https://www.linkedin.com/in/sneha-shahi-8b1636152 Sneha]<br> | ||

** [https://www.linkedin.com/in/sarveshharhare Sarvesh Harhare]<br> | ** [https://www.linkedin.com/in/sarveshharhare Sarvesh Harhare]<br> | ||

| − | * | + | * '''Integration Testing''': |

** Revathy | ** Revathy | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

** [https://www.linkedin.com/in/kripanandjha Kripanand Jha]<br> | ** [https://www.linkedin.com/in/kripanandjha Kripanand Jha]<br> | ||

| + | **[https://www.linkedin.com/in/unnikrishnan-sreekumar-4a3b8922/ Unnikrishnan] | ||

| − | * PCB Design | + | * '''PCB Design''': |

** [https://www.linkedin.com/in/rajul-gupta-5b366ba9/ Rajul]<br> | ** [https://www.linkedin.com/in/rajul-gupta-5b366ba9/ Rajul]<br> | ||

| Line 379: | Line 396: | ||

| | | | ||

* <font color="orange"> Major Feature: Full feature integration test <br></font> | * <font color="orange"> Major Feature: Full feature integration test <br></font> | ||

| − | | | + | | complete |

|} | |} | ||

| Line 470: | Line 487: | ||

|- | |- | ||

| 11 | | 11 | ||

| − | | [https://www.amazon.com/DJI- | + | | [https://www.amazon.com/DJI-NAZA-M-V2-GPS-Module/dp/B00O11YQXQ/ref=sr_1_5?ie=UTF8&qid=1513760869&sr=8-5&keywords=naza+gps GPS Module] |

| 1 | | 1 | ||

| − | | $ | + | | $69 |

|- | |- | ||

|- | |- | ||

| Line 496: | Line 513: | ||

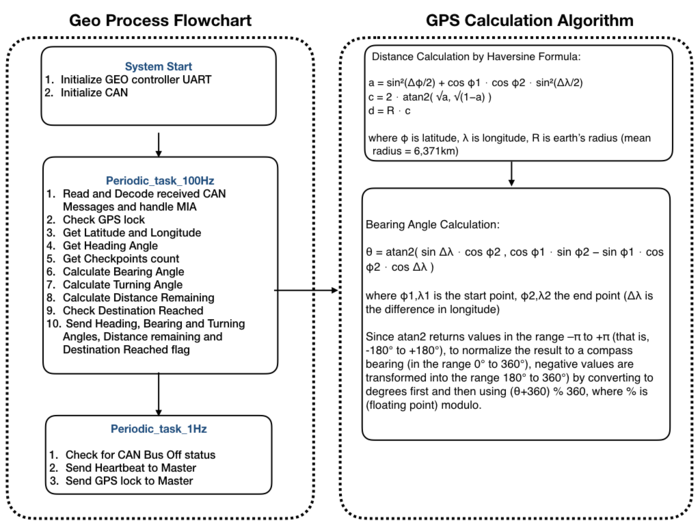

== '''CAN Communication''' == | == '''CAN Communication''' == | ||

| − | System Nodes : MASTER , MOTOR , BLE , SENSOR , GEO | + | The controllers are connected in a CAN bus at 100K baudrate. |

| + | System Nodes: MASTER, MOTOR, BLE, SENSOR, GEO | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 511: | Line 529: | ||

| 1 | | 1 | ||

| 2 | | 2 | ||

| − | | System | + | | System Stop command to stop motor |

| Motor | | Motor | ||

|- | |- | ||

| Line 569: | Line 587: | ||

| 214 | | 214 | ||

| Current Coordinate | | Current Coordinate | ||

| − | | Master | + | | Master,BLE |

|- | |- | ||

|- | |- | ||

| Line 625: | Line 643: | ||

|} | |} | ||

| − | == '''DBC File''' == | + | === '''DBC File''' === |

| + | |||

| + | The CAN message id's transmitted and received from all the controllers are designed based on the priority of the CAN messages. | ||

| + | The priority is as follows | ||

| + | |||

| + | Priority Level 1 - User Commands | ||

| + | |||

| + | Priority Level 2 - Sensor data | ||

| + | |||

| + | Priority Level 3 - Status Signals | ||

| + | |||

| + | Priority Level 4 - Heartbeat | ||

| + | |||

| + | Priority Level 5 - Telemetry signals to display in I/O | ||

| + | |||

| + | BU_: DBG DRIVER IO MOTOR SENSOR MASTER GEO BLE | ||

| + | |||

| + | BO_ 1 BLE_START_STOP_CMD: 1 BLE | ||

| + | SG_ BLE_START_STOP_CMD_start : 0|4@1+ (1,0) [0|1] "" MASTER | ||

| + | SG_ BLE_START_STOP_CMD_reset : 4|4@1+ (1,0) [0|1] "" MASTER | ||

| + | |||

| + | BO_ 2 MASTER_SYS_STOP_CMD: 1 MASTER | ||

| + | SG_ MASTER_SYS_STOP_CMD_stop : 0|8@1+ (1,0) [0|1] "" MOTOR | ||

| + | |||

| + | BO_ 212 BLE_GPS_DATA: 8 BLE | ||

| + | SG_ BLE_GPS_long : 0|32@1- (0.000001,0) [0|0] "" GEO | ||

| + | SG_ BLE_GPS_lat : 32|32@1- (0.000001,0) [0|0] "" GEO | ||

| + | |||

| + | BO_ 213 BLE_GPS_DATA_CNT: 1 BLE | ||

| + | SG_ BLE_GPS_COUNT : 0|8@1+ (1,0) [0|0] "" GEO,SENSOR | ||

| + | |||

| + | BO_ 214 GEO_CURRENT_COORD: 8 GEO | ||

| + | SG_ GEO_CURRENT_COORD_LONG : 0|32@1- (0.000001,0) [0|0] "" MASTER,BLE | ||

| + | SG_ GEO_CURRENT_COORD_LAT : 32|32@1- (0.000001,0) [0|0] "" MASTER,BLE | ||

| + | |||

| + | BO_ 195 GEO_TELECOMPASS: 6 GEO | ||

| + | SG_ GEO_TELECOMPASS_compass : 0|12@1+ (0.1,0) [0|360.0] "" MASTER,BLE | ||

| + | SG_ GEO_TELECOMPASS_bearing_angle : 12|12@1+ (0.1,0) [0|360.0] "" MASTER,BLE | ||

| + | SG_ GEO_TELECOMPASS_distance : 24|12@1+ (0.1,0) [0|0] "" MASTER,BLE | ||

| + | SG_ GEO_TELECOMPASS_destination_reached : 36|1@1+ (1,0) [0|1] "" MASTER,BLE | ||

| + | SG_ GEO_TELECOMPASS_checkpoint_id : 37|8@1+ (1,0) [0|0] "" MASTER,BLE | ||

| + | |||

| + | BO_ 194 MASTER_TELEMETRY: 3 MASTER | ||

| + | SG_ MASTER_TELEMETRY_gps_mia : 0|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_sensor_mia : 1|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_sensor_heartbeat : 2|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_ble_heartbeat : 3|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_motor_heartbeat : 4|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_geo_heartbeat : 5|1@1+ (1,0) [0|1] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_sys_status : 6|2@1+ (1,0) [0|3] "" BLE | ||

| + | SG_ MASTER_TELEMETRY_gps_tele_mia : 8|1@1+ (1,0) [0|1] "" BLE | ||

| + | |||

| + | BO_ 196 GEO_TELEMETRY_LOCK: 1 GEO | ||

| + | SG_ GEO_TELEMETRY_lock : 0|8@1+ (1,0) [0|0] "" MASTER,SENSOR,BLE | ||

| + | |||

| + | BO_ 3 SENSOR_LIDAR_OBSTACLE_INFO: 6 SENSOR | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR0 : 0|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR1 : 4|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR2 : 8|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR3 : 12|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR4 : 16|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR5 : 20|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR6 : 24|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR7 : 28|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR8 : 32|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR9 : 36|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR10 : 40|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR11 : 44|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

| + | |||

| + | BO_ 4 GEO_TURNING_ANGLE: 2 GEO | ||

| + | SG_ GEO_TURNING_ANGLE_degree : 0|9@1- (1,0) [-180|180] "" MASTER,BLE | ||

| + | |||

| + | |||

| + | The CAN DBC is available at the Gitlab link below | ||

| + | |||

https://gitlab.com/optimus_prime/optimus/blob/master/_can_dbc/243.dbc <br> | https://gitlab.com/optimus_prime/optimus/blob/master/_can_dbc/243.dbc <br> | ||

| + | |||

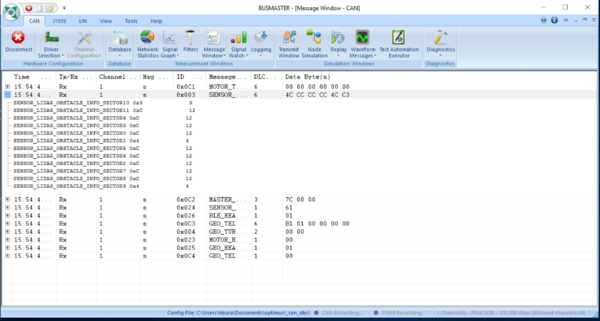

| + | === CAN Bus Debugging === | ||

| + | We used PCAN Dongle to connect to the host pc to monitor the CAN Bus traffic using BusMaster tool. The screenshot of the Bus Master log is shown below | ||

| + | |||

| + | [[ File:CMPE243_F17_Optimus_Busmaster.png|600px|thumb|center|| BusMaster CAN Signal Log]] | ||

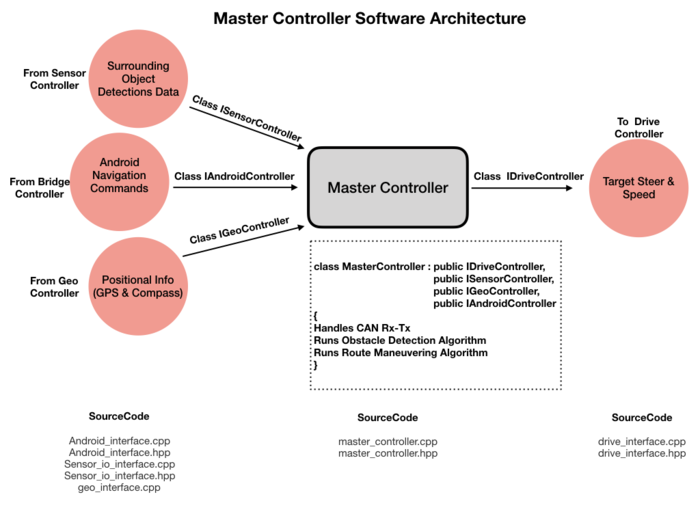

== '''Hardware & Software Architecture''' == | == '''Hardware & Software Architecture''' == | ||

| − | == Master Controller == | + | == '''Master Controller''' == |

=== Software Architecture Design === | === Software Architecture Design === | ||

| − | The Master Controller Integrates the functionality of all other controllers and it acts as the Central Control Unit of the | + | The Master Controller Integrates the functionality of all other controllers and it acts as the Central Control Unit of the Self Navigating car. Two of the major functionalities handled by Master Controller is Obstacle avoidance and Route Maneuvering. |

| − | The overview of Master Controller Software Architecture is | + | The overview of Master Controller Software Architecture is as show in the figure below. |

[[ File: CMPE243_F17_Optimus_MasterSWArchitecture.png|700px|thumb|center|| SW Architecture]] | [[ File: CMPE243_F17_Optimus_MasterSWArchitecture.png|700px|thumb|center|| SW Architecture]] | ||

| − | As an analogy to Human driving, it receives the inputs from sensors to determine the surrounding of the | + | As an analogy to Human driving, it receives the inputs from sensors to determine the surrounding of the Self Navigating car and take decisions based on the environment and current location of the car. The input received and output sent by the Master are as mentioned below: |

| − | 1. Lidar Object | + | Input to Master: |

| + | |||

| + | 1. Lidar Object Detection information - To determine if there is an obstacle in the path of navigation | ||

2. GPS and Compass Reading - To understand the Heading and Bearing angle to decide the direction of movement | 2. GPS and Compass Reading - To understand the Heading and Bearing angle to decide the direction of movement | ||

3. User command from Android - To stop or Navigate to the Destination | 3. User command from Android - To stop or Navigate to the Destination | ||

| + | |||

| + | Output from Master: | ||

| + | |||

| + | 1. Motor control information - sends the target Speed and Steering direction to the Motor. | ||

=== Software Implementation === | === Software Implementation === | ||

| − | The Master | + | The Master controller runs 2 major algorithms, Route Maneuvering and Obstacle Avoidance. The System start/stop is handled by master based on the Specific commands. |

| − | The implicit requirement is that When the user selects the destination | + | The implicit requirement is that When the user selects the destination, route is calculated and the checkpoints of the route are sent from Android through bridge controller to the Geo. Once Geo Controller receives a complete set of checkpoints, the master controller starts the system based on the "Checkpoint ID". If the ID is a non-zero value, the route has started and Master controller runs the Route Maneuvering Algorithm. |

The Overall control flow of master controller is shown in the below figure. | The Overall control flow of master controller is shown in the below figure. | ||

[[ File: CMPE243_F17_Optimus_MasterControlFlow.png|700px|thumb|center|| Process Flowchart]] | [[ File: CMPE243_F17_Optimus_MasterControlFlow.png|700px|thumb|center|| Process Flowchart]] | ||

| + | |||

| + | ==== Unit Testing ==== | ||

| + | |||

| + | Using Cgreen Unit Testing framework, the Obstacle avoidance algorithm is unit tested.The complete code for unit test is added in git project. | ||

| + | |||

| + | Ensure(test_obstacle_avoidance) | ||

| + | { | ||

| + | //Obstacle Avoidance Algorithm | ||

| + | pmaster->set_target_steer(MC::steer_right); | ||

| + | mock_obstacle_detections(MC::steer_right,MC::steer_right,false,false,false,false,false,false,true); | ||

| + | assert_that(pmaster->RunObstacleAvoidanceAlgo(obs_status),is_equal_to(expected_steer)); | ||

| + | assert_that(pmaster->get_forward(),is_equal_to(true)); | ||

| + | assert_that(pmaster->get_target_speed(),is_equal_to(MC::speed_slow)); | ||

| + | } | ||

| + | Ensure(test_obstacle_detection) | ||

| + | { | ||

| + | //Obstacle Detection Algorithm | ||

| + | mock_CAN_Rx_Lidar_Info(2,2,6,0,2,2,4,0,2,0,5,0); | ||

| + | set_expected_detection(true,false,true,false,true,false,false); | ||

| + | actual_detections = psensor->RunObstacleDetectionAlgo(); | ||

| + | assert_that(compare_detections(actual_detections) , is_equal_to(7)); | ||

| + | } | ||

| + | TestSuite* master_controller_suite() | ||

| + | { | ||

| + | TestSuite* master_suite = create_test_suite(); | ||

| + | add_test(master_suite,test_obstacle_avoidance); | ||

| + | add_test(master_suite,test_obstacle_detection); | ||

| + | return master_suite; | ||

| + | } | ||

| + | |||

| + | ==== On board debug indications ==== | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! scope="col"| Sr.No | ||

| + | ! scope="col"| LED Number | ||

| + | ! scope="col"| Debug Signal | ||

| + | |- | ||

| + | ! scope="row"| 1 | ||

| + | | LED 1 | ||

| + | | Sensor Heartbeat, Sensor Data Mia | ||

| + | |||

| + | |- | ||

| + | ! scope="row"| 2 | ||

| + | | LED 2 | ||

| + | | Geo Heartbeat, Turning Angle Signal Mia | ||

| + | |||

| + | |- | ||

| + | ! scope="row"| 3 | ||

| + | | LED 3 | ||

| + | | Bridge Heartbeat mia | ||

| + | |||

| + | |- | ||

| + | ! scope="row"| 4 | ||

| + | | LED 4 | ||

| + | | Motor Heartbeat mia | ||

| + | |- | ||

| + | |} | ||

=== Design Challenges === | === Design Challenges === | ||

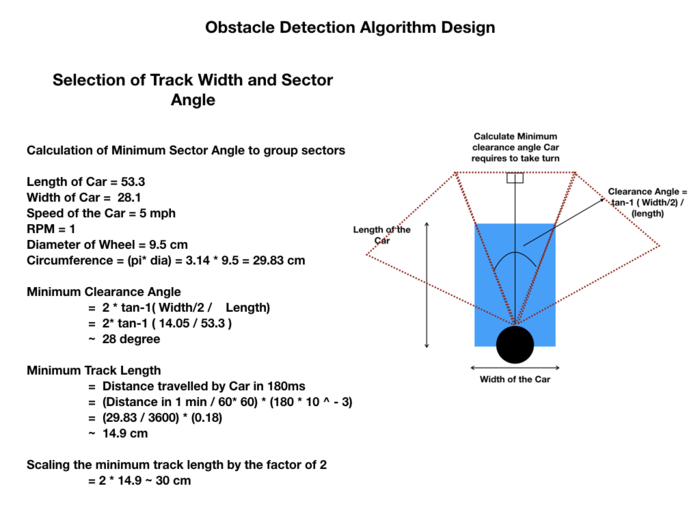

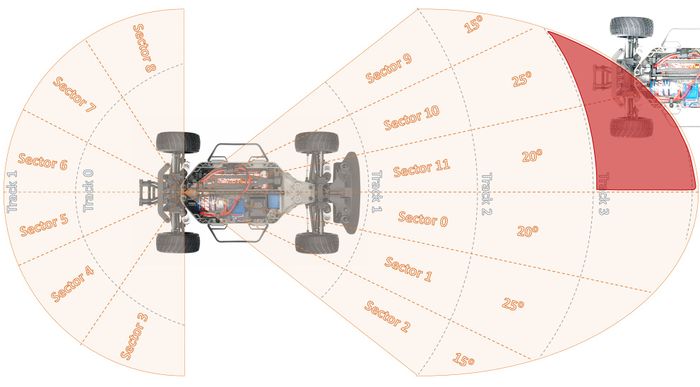

| − | The critical part in Obstacle Avoidance Algorithm is designing | + | The critical part in Obstacle Avoidance Algorithm is designing, 1. Obstacle detcetion 2. Obstacle avoidance. Since we get 360-degree view of obstacles, we need to group the zones into sectors and tracks to process the 360-degree detection and take decision accordingly. |

[[ File: CMPE243_F17_Optimus_ObstacleAvoidanceAlgo.png|700px|thumb|center|| Obstacle Avoidance Design]] | [[ File: CMPE243_F17_Optimus_ObstacleAvoidanceAlgo.png|700px|thumb|center|| Obstacle Avoidance Design]] | ||

| − | == Motor Controller == | + | == '''Motor Controller''' == |

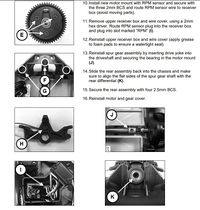

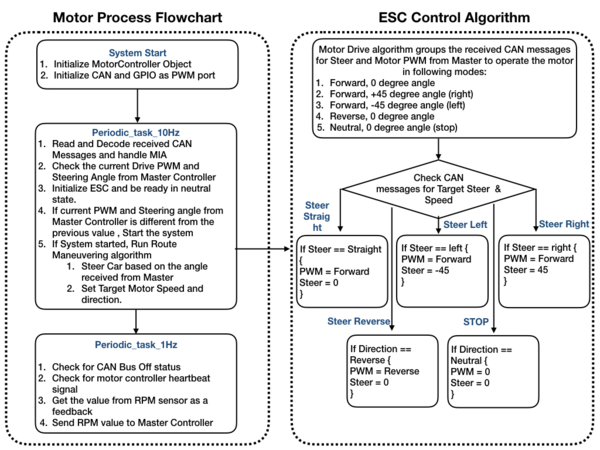

=== Design & Implementation === | === Design & Implementation === | ||

| Line 709: | Line 870: | ||

|} | |} | ||

| − | + | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

====Hardware Specifications==== | ====Hardware Specifications==== | ||

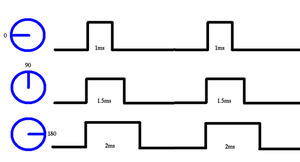

* 1. DC Motor, Servo and ESC | * 1. DC Motor, Servo and ESC | ||

This is a Traxxas Titan 380 18-turn brushed motor. The DC motor comes with the Electronic Speed Control(ESC) module. The ESC module can control both servo and DC motor using Pulse Width Modulation (PWM) control. ESC also requires an initial calibration: | This is a Traxxas Titan 380 18-turn brushed motor. The DC motor comes with the Electronic Speed Control(ESC) module. The ESC module can control both servo and DC motor using Pulse Width Modulation (PWM) control. ESC also requires an initial calibration: | ||

| − | + | ESC is operated using PWM Signals. The DC motor PWM is converted in the range of -100% to 100% where -100% means "reverse with full speed", 100% means "forward with full speed" and 0 means "Stop or Neutral". | |

| − | + | Also, the servo can also be operated in a Safe manner using PWM. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | Also, servo can also be operated in Safe manner using PWM. | ||

<br> <br> | <br> <br> | ||

As we need a locked 0 –> 180 degrees motion in certain applications like robot arm, Humanoids etc. We use these Servo motors. These are Normal motors only with a potentiometer connected to its shaft which gives us the feedback of analog value and adjusts its angle according to its given input signal. | As we need a locked 0 –> 180 degrees motion in certain applications like robot arm, Humanoids etc. We use these Servo motors. These are Normal motors only with a potentiometer connected to its shaft which gives us the feedback of analog value and adjusts its angle according to its given input signal. | ||

So… How to Operate it? | So… How to Operate it? | ||

| − | A servo usually requires 5V->6V As VCC. ( | + | A servo usually requires 5V->6V As VCC. (Industrial servos requires more.) and Ground and a signal to adjust its position. |

| − | The signal is a PWM waveform. For a servo we need to provide a PWM of frequency about 50Hz-200Hz (Refer the datasheet). | + | The signal is a PWM waveform. For a servo, we need to provide a PWM of frequency about 50Hz-200Hz (Refer the datasheet). So the time duration of a clock cycle goes to 20ms. From this 20ms if the On time is 1ms and off time is 19ms we generally get the 0 degrees position. And when we increase the duty cycle from 1ms to 2ms the angle changes from 0–> 180 degrees. |

So where can it go wrong- | So where can it go wrong- | ||

[[File:CmpE243_F17_Servo_Motor_operation.png|thumb|center|300px|Servo Motor Operation]] | [[File:CmpE243_F17_Servo_Motor_operation.png|thumb|center|300px|Servo Motor Operation]] | ||

| − | Power->> The power we provide. Generally we tend to give a higher volt batteries for our applications by pulling the voltage down through regulators to 5Vs. But we surely can-not give supply to the servo through our uC as the servo eats up a hell lot of current | + | Power->> The power we provide. Generally we tend to give a higher volt batteries for our applications by pulling the voltage down through regulators to 5Vs. But we surely can-not give supply to the servo through our uC as the servo eats up a hell lot of current. |

| − | Another way to burn the servo is at certain times the supply is given directly through the battery so the uC will not blow up. But if you Give a supply say 12Volts then boom. Your servo will go | + | Another way to burn the servo is at certain times the supply is given directly through the battery so the uC will not blow up. But if you Give a supply say 12Volts then boom. Your servo will go on for ever. |

PWM–> PWM should strictly be in the range between 1ms–> 2ms (refer datasheets) If by any mistakes the PWM goes out then boom the servo will start jittering and will heat up and heat up and will burn itself down. But this problem is easily identifiable as there is a jitter sound which if you have got enough experience with servos, you will totally notice the noise. So if the noise is there when you turn on the servo, turn it off right away and change the code ASAP. | PWM–> PWM should strictly be in the range between 1ms–> 2ms (refer datasheets) If by any mistakes the PWM goes out then boom the servo will start jittering and will heat up and heat up and will burn itself down. But this problem is easily identifiable as there is a jitter sound which if you have got enough experience with servos, you will totally notice the noise. So if the noise is there when you turn on the servo, turn it off right away and change the code ASAP. | ||

| Line 745: | Line 899: | ||

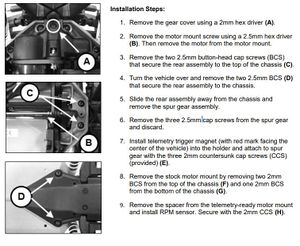

* 2. RPM Sensor | * 2. RPM Sensor | ||

| − | [[File: | + | The RPM sensor above requires a specific kind of Installation. '''STEPS ARE:''' |

| − | + | ||

| + | {| | ||

| + | | | ||

| + | | | ||

| + | |[[File:CmpE243_F17_RPM_install1.JPG |thumb|left|300px]] | ||

| + | | | ||

| + | | | ||

| + | |[[File:CmpE243_F17_RPM_install2.JPG |thumb|center|200px]] | ||

| + | | | ||

| + | |} | ||

| + | |||

| + | |||

| − | |||

| − | |||

| − | |||

Once the installation is done, the RPM can be read using the above magnetic RPM sensor. It gives a high pulse at every rotation of the wheel. Hence, to calculate the RPM, the output of the above sensor is fed to a gpio pin of SJOne board. | Once the installation is done, the RPM can be read using the above magnetic RPM sensor. It gives a high pulse at every rotation of the wheel. Hence, to calculate the RPM, the output of the above sensor is fed to a gpio pin of SJOne board. | ||

| Line 766: | Line 928: | ||

Upon detection of uphill the pulse frequency from RPM Sensor reduces, that means car is slowing down. Hence, in that scenario, car is accelerated (increase PWM) further to maintain the required speed. Similarly in case of Downhill pulse frequency increases, which means car is speeding up. Hence, brakes (reduced PWM) are applied to compensate the increased speed. | Upon detection of uphill the pulse frequency from RPM Sensor reduces, that means car is slowing down. Hence, in that scenario, car is accelerated (increase PWM) further to maintain the required speed. Similarly in case of Downhill pulse frequency increases, which means car is speeding up. Hence, brakes (reduced PWM) are applied to compensate the increased speed. | ||

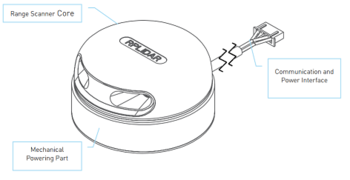

| − | == Sensor Controller == | + | == '''Sensor Controller''' == |

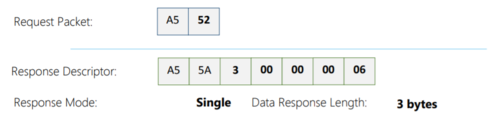

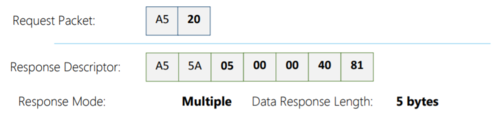

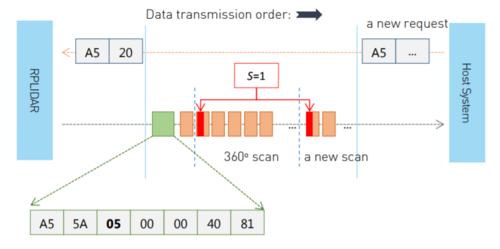

The Sensor is for detecting and avoiding obstacles. For this purpose we have used RPLIDAR by SLAMTEC. | The Sensor is for detecting and avoiding obstacles. For this purpose we have used RPLIDAR by SLAMTEC. | ||

| Line 819: | Line 981: | ||

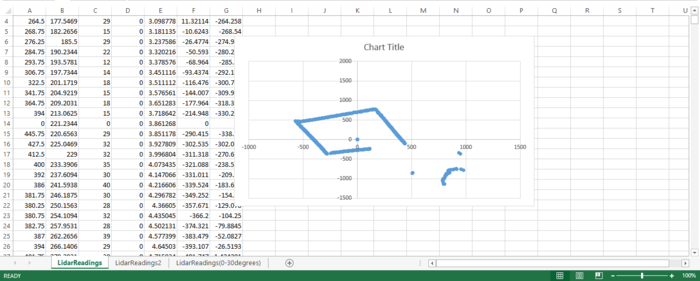

To perform the initial testing of the LIDAR and to check if we are getting the correct obstacle info, we have made a setup enclosing the LIDAR on all four sides. So, by plotting the distance info given by the LIDAR in Microsoft Excel we can visualize a map of the obstacles as detected by the LIDAR. The map plotted in Excel after closing almost all four sides of the LIDAR can be shown in the figure shown below. | To perform the initial testing of the LIDAR and to check if we are getting the correct obstacle info, we have made a setup enclosing the LIDAR on all four sides. So, by plotting the distance info given by the LIDAR in Microsoft Excel we can visualize a map of the obstacles as detected by the LIDAR. The map plotted in Excel after closing almost all four sides of the LIDAR can be shown in the figure shown below. | ||

| − | [ | + | [[File:CmpE243_F17_Optimus_LIDARobstacleMap.PNG|700px|thumb|center||Data Obtained from the LIDAR plotted on an Excel sheet]] |

====CAN DBC messages sent from the Sensor Controller==== | ====CAN DBC messages sent from the Sensor Controller==== | ||

| Line 837: | Line 999: | ||

SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR11 : 44|4@1+ (1,0) [0|12] "" MASTER,BLE | SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR11 : 44|4@1+ (1,0) [0|12] "" MASTER,BLE | ||

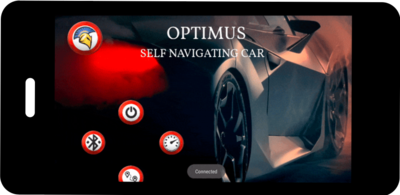

| − | == Android Application == | + | == '''Android Application''' == |

=== Description === | === Description === | ||

| Line 845: | Line 1,007: | ||

Optimus mobile Platform needs to be connected with a specific Device Address based on the BLE Chip type in use. | Optimus mobile Platform needs to be connected with a specific Device Address based on the BLE Chip type in use. | ||

| − | * OPTIMUS HOME | + | * OPTIMUS HOME |

| − | [[ File: | + | [[ File: CmpE243_F17_Optimus_Optimus App.gif|700px|thumb|center||Optimus App: OPTIMUS HOME]]<br> |

=== Features === | === Features === | ||

| Line 866: | Line 1,028: | ||

'''2. MAPS'''<br> | '''2. MAPS'''<br> | ||

| − | + | OPTIMUS App uses Google Maps for setting up the Routing Map information and to decide on the next checkpoint for the Car and the appropriate shortest route by computing the checkpoints using "Adjacency Matrix" and certain algorithms.<br> | |

| − | Google Maps are used along with other promising features to improve the navigation experience as the Route plot and Checkpoint mapping on groovy paths around campus are difficult to plan and route using Google Api(s). | + | Google Maps are used along with other promising features to improve the navigation experience as the Route plot and Checkpoint mapping on groovy paths around campus are difficult to plan and route using Google Api(s).<br> |

| + | |||

| + | * '''MAPS :: ANDROID - BLE COMMUNICATION JSON SCHEMA'''<br> | ||

| + | |||

| + | The App was also upgraded to have live tracking feature of Car's location by indicating the crossed marker with '''YELLOW_HUE''' color to distinguish the original path and the traversed path by the car.<br> | ||

| + | As soon as the car crosses a checkpoint marker the marker color will be updated to YELLOW from its original BLUE Color to indicate the checkpoint flag has been crossed.<br> | ||

| − | + | [[ File: CmpE243_F17_Optimus_Live_Track.JPG|700px|thumb|center||Optimus App: LIVE CAR TRACKING]]<br> | |

| − | [[ File: | ||

Optimus app uses interpolation schemes to calculate intermediate routes and to set checkpoints using Draggable Marker mechanism to set Destination and plot route path till the same.<br> | Optimus app uses interpolation schemes to calculate intermediate routes and to set checkpoints using Draggable Marker mechanism to set Destination and plot route path till the same.<br> | ||

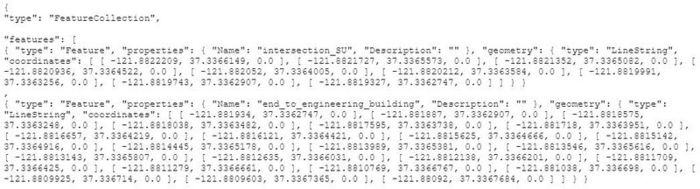

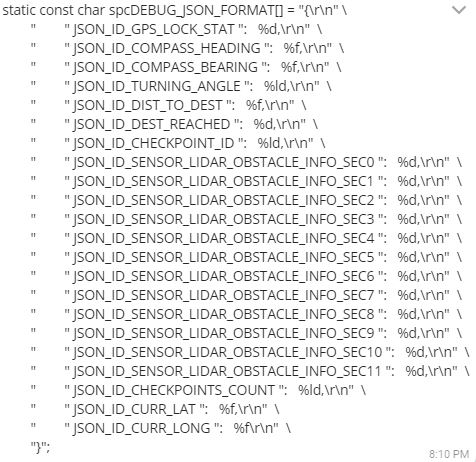

The Json Format shown has various tags for extracting checkpoint information using Json reader and plotting the points on the Map. Features of the Json Data packet are:<br> | The Json Format shown has various tags for extracting checkpoint information using Json reader and plotting the points on the Map. Features of the Json Data packet are:<br> | ||

| − | + | * '''Feature Properties:'''<br> | |

* Name : Description of the route Start Point<br> | * Name : Description of the route Start Point<br> | ||

* Description [optional] : Custom Description of the route<br> | * Description [optional] : Custom Description of the route<br> | ||

| Line 881: | Line 1,047: | ||

* coordinates : List of Lat-Long Coordinates till Next major Check point<br> | * coordinates : List of Lat-Long Coordinates till Next major Check point<br> | ||

| − | + | [[ File: CmpE243_F17_Optimus_Routes_Json.JPG|700px|thumb|center||ROUTE INTERPOLATION DATA]]<br> | |

| − | [[ File: | + | |

'''3. DASHBOARD'''<br> | '''3. DASHBOARD'''<br> | ||

| − | Dash Board was designed to have an at a glance View and to project a UI similar to a CAR Dashboard on the App wherein we have Compass Values, Bearing and Heading Angles, Lidar Maps to resonate the data obtained from LIDAR which also helps in debugging the features and the values being sent from respective Sensor Modules. | + | Dash Board was designed to have an at a glance View and to project a UI similar to a CAR Dashboard on the App wherein we have Compass Values, Bearing and Heading Angles, Lidar Maps to resonate the data obtained from LIDAR which also helps in debugging the features and the values being sent from respective Sensor Modules.<br> |

| + | |||

| + | * '''OPTIMUS DASHBOARD'''<br> | ||

| + | [[ File: CmpE243_F17_Optimus_Optimus Dashboard.gif|700px|thumb|center||Optimus App: OPTIMUS DASHBOARD]]<br> | ||

| − | * | + | * '''DASHBOARD JSON SCHEMA'''<br> |

| − | [[ File: | + | [[ File: CmpE243_F17_Optimus_Dashboard_Json.JPG|700px|thumb|center||DASHBOARD DATA]]<br> |

| − | * Dashboard Information:<br> | + | * '''Dashboard Information:'''<br> |

* JSON_ID_GPS_LOCK_STAT : Signifies the current Status of GPS LOCK on the car<br> | * JSON_ID_GPS_LOCK_STAT : Signifies the current Status of GPS LOCK on the car<br> | ||

* JSON_ID_COMPASS_HEADING : Signifies current Heading Angle from COMPASS<br> | * JSON_ID_COMPASS_HEADING : Signifies current Heading Angle from COMPASS<br> | ||

| Line 899: | Line 1,068: | ||

* JSON_ID_DEST_REACHED : Signifies whether the car has reached Destination or not!<br> | * JSON_ID_DEST_REACHED : Signifies whether the car has reached Destination or not!<br> | ||

| − | * LIDAR Information:<br> | + | * '''LIDAR Information:'''<br> |

* JSON_ID_SENSOR_LIDAR_OBSTACLE_INFO_SEC0 : Signifies Track position of the Obstacles detected on multiple Sectors by LIDAR<br> | * JSON_ID_SENSOR_LIDAR_OBSTACLE_INFO_SEC0 : Signifies Track position of the Obstacles detected on multiple Sectors by LIDAR<br> | ||

| − | + | For Example: Track 9, Sector 1 means Obstacle is detected at Sector 1 at 450 centimeters or 4.50 meters from the Current position of car at an angle range 20-45 degrees from LIDAR/CAR Front line of vision at that particular time instance<br> | |

| + | {| | ||

| + | | | ||

| + | | | ||

| + | |[[ File: CmpE243_F17_Optimus_Lidar_angle.GIF|700px|thumb|LiDAR detection of Track 9 Sector 1 i.e. 4.50 mts.||Android: LIDAR PLOT]] | ||

| + | | | ||

| + | | | ||

| + | |[[ File: CmpE243_F17_Optimus_Lidar.GIF|700px|thumb|Optimus App: Lidar Obstacle Detection||Android: LIDAR PLOT]] | ||

| + | | | ||

| + | |} | ||

| − | + | == ''' Bluetooth Controller ''' == | |

| − | |||

| − | |||

| − | == Bluetooth Controller == | ||

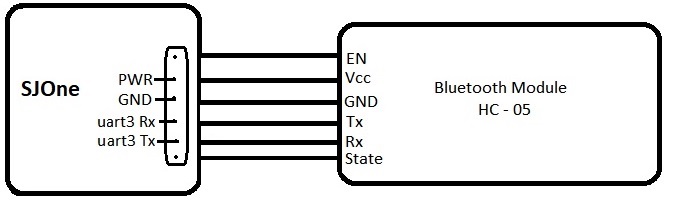

===Hardware Implementation === | ===Hardware Implementation === | ||

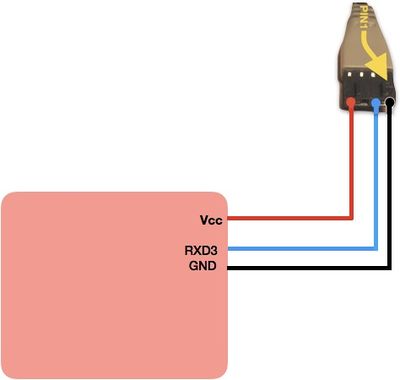

| − | ''' Bluetooth Module:'' | + | ''' Bluetooth Module Pin Configuration:’'' |

| + | |||

We are using HC-05 Bluetooth module to send and receive the data from our android application. | We are using HC-05 Bluetooth module to send and receive the data from our android application. | ||

| − | [[ File: | + | |

| − | + | [[ File: CmpE243_F17_Optimus_HC-05.jpg|200px|thumb|left|| Bluetooth Module]] | |

| − | [[ File: | + | [[ File: CmpE243_F17_Optimus_bridge_HC-05_pin_conf.jpg|679px|thumb|centre||pin configuration]] |

| + | <br> | ||

| + | <br> | ||

The Bridge controller is connected to the bluetooth module through the uart serial interface (Uart3) with 9600 baud rate 8-bit data and 1 stop bit. | The Bridge controller is connected to the bluetooth module through the uart serial interface (Uart3) with 9600 baud rate 8-bit data and 1 stop bit. | ||

| Line 934: | Line 1,112: | ||

8. Forward the Android message to GEO controller if it received checkpoints otherwise forward it to Master. | 8. Forward the Android message to GEO controller if it received checkpoints otherwise forward it to Master. | ||

| − | + | [[ File: CMPE243_F17_Optimus_BLEControlFlow.png|700px|thumb|center|| Process Flowchart]] | |

| − | |||

| − | |||

| − | |||

| − | + | DBC format for messages sent from Bluetooth controller : | |

| − | + | BO_ 1 BLE_START_STOP_CMD: 1 BLE | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | BO_ 1 BLE_START_STOP_CMD: 1 BLE | ||

SG_ BLE_START_STOP_CMD_start : 0|4@1+ (1,0) [0|1] "" MASTER | SG_ BLE_START_STOP_CMD_start : 0|4@1+ (1,0) [0|1] "" MASTER | ||

SG_ BLE_START_STOP_CMD_reset : 4|4@1+ (1,0) [0|1] "" MASTER | SG_ BLE_START_STOP_CMD_reset : 4|4@1+ (1,0) [0|1] "" MASTER | ||

| − | + | BO_ 38 BLE_HEARTBEAT: 1 BLE | |

| − | |||

| − | |||

| − | BO_ 38 BLE_HEARTBEAT: 1 BLE | ||

SG_ BLE_HEARTBEAT_signal : 0|8@1+ (1,0) [0|255] "" MASTER | SG_ BLE_HEARTBEAT_signal : 0|8@1+ (1,0) [0|255] "" MASTER | ||

| − | BO_ 212 BLE_GPS_DATA: 8 BLE | + | BO_ 212 BLE_GPS_DATA: 8 BLE |

SG_ BLE_GPS_long : 0|32@1- (0.000001,0) [0|0] "" GEO | SG_ BLE_GPS_long : 0|32@1- (0.000001,0) [0|0] "" GEO | ||

SG_ BLE_GPS_lat : 32|32@1- (0.000001,0) [0|0] "" GEO | SG_ BLE_GPS_lat : 32|32@1- (0.000001,0) [0|0] "" GEO | ||

| − | BO_ 213 BLE_GPS_DATA_CNT: 1 BLE | + | BO_ 213 BLE_GPS_DATA_CNT: 1 BLE |

SG_ BLE_GPS_COUNT : 0|8@1+ (1,0) [0|0] "" GEO,SENSOR | SG_ BLE_GPS_COUNT : 0|8@1+ (1,0) [0|0] "" GEO,SENSOR | ||

| − | ''' | + | == '''Geographical Controller''' == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | === Introduction === |

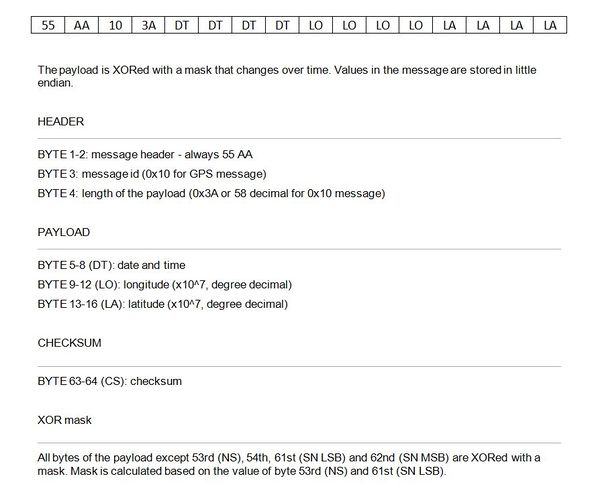

'''GPS and Compass Module:''' | '''GPS and Compass Module:''' | ||

| Line 1,028: | Line 1,144: | ||

'''Compass''': | '''Compass''': | ||

| − | [[ File: CMPE243_F17_Optimus_MagneticCompass.GIF| | + | [[ File: CMPE243_F17_Optimus_MagneticCompass.GIF|200px|thumb|right|| Compass]] |

A compass is an instrument used for navigation and orientation that shows direction relative to the geographic cardinal directions (or points). Usually, a diagram called a compass rose shows the directions north, south, east, and west on the compass face as abbreviated initials. When the compass is used, the rose can be aligned with the corresponding geographic directions; for example, the "N" mark on the rose really points northward. Compasses often display markings for angles in degrees in addition to (or sometimes instead of) the rose. North corresponds to 0°, and the angles increase clockwise, so east is 90° degrees, south is 180°, and west is 270°. These numbers allow the compass to show azimuths or bearings, which are commonly stated in this notation. | A compass is an instrument used for navigation and orientation that shows direction relative to the geographic cardinal directions (or points). Usually, a diagram called a compass rose shows the directions north, south, east, and west on the compass face as abbreviated initials. When the compass is used, the rose can be aligned with the corresponding geographic directions; for example, the "N" mark on the rose really points northward. Compasses often display markings for angles in degrees in addition to (or sometimes instead of) the rose. North corresponds to 0°, and the angles increase clockwise, so east is 90° degrees, south is 180°, and west is 270°. These numbers allow the compass to show azimuths or bearings, which are commonly stated in this notation. | ||

| − | |||

| − | |||

We are using DJI’s NAZA GPS/COMPASS to get the GPS coordinates and Heading angle. The diagram of the module is as follows: | We are using DJI’s NAZA GPS/COMPASS to get the GPS coordinates and Heading angle. The diagram of the module is as follows: | ||

| − | [[ File: CMPE243_F17_Optimus_Naza.JPG| | + | [[ File: CMPE243_F17_Optimus_Naza.JPG|200px|thumb|right|| GPS and Compass Module]] |

'''Message Structure:''' | '''Message Structure:''' | ||

| − | + | * GPS': | |

The 0x10 message contains GPS data. The message structure is as follows: | The 0x10 message contains GPS data. The message structure is as follows: | ||

| − | [[ File: CMPE243_F17_Optimus_GPS_Message.JPG|600px|thumb|center|| GPS | + | [[ File: CMPE243_F17_Optimus_GPS_Message.JPG|600px|thumb|center|| GPS Data]] |

| − | + | * Compass: | |

The 0x20 message contains compass data. The structure of the message is as follows: | The 0x20 message contains compass data. The structure of the message is as follows: | ||

| − | [[ File: CMPE243_F17_Optimus_Compass_Message.JPG|600px|thumb|center|| | + | [[ File: CMPE243_F17_Optimus_Compass_Message.JPG|600px|thumb|center|| Compass Data]] |

| − | + | * Calibration': | |

Why calibrate the compass? | Why calibrate the compass? | ||

| Line 1,067: | Line 1,181: | ||

• If electronic devices are added/removed/re-positioned. | • If electronic devices are added/removed/re-positioned. | ||

| − | ''' | + | '''Hardware Connection''' |

The Pin Configuration is as follows: | The Pin Configuration is as follows: | ||

| − | [[ File: CMPE243_F17_Pin.JPG| | + | [[ File: CMPE243_F17_Pin.JPG|400px|thumb|centre|| Block Diagram]] |

=== Software Design === | === Software Design === | ||

'''Algorithm:''' | '''Algorithm:''' | ||

| + | '''Distance calculation:''' | ||

| − | |||

| − | |||

We are using the ‘haversine’ formula to calculate the great-circle distance between two points – that is, the shortest distance over the earth’s surface | We are using the ‘haversine’ formula to calculate the great-circle distance between two points – that is, the shortest distance over the earth’s surface | ||

| − | '''Bearing Angle calculation | + | '''Bearing Angle calculation''': |

| − | ''' | + | |

| + | The bearing of a point is the number of degrees in the angle measured in a clockwise direction from the north line to the line joining the centre of the compass with the point. A bearing is used to represent the direction of one point relative to another point. The bearing angle is calculated by using the following formula: | ||

| + | [[ File: CMPE243_F17_Angle.JPG|400px|thumb|right|| Angle Information]] | ||

| + | [[ File: CMPE243_F17_Optimus_DBC_Message.JPG|400px|thumb|right|| DBC Messages]] | ||

| + | [[ File: CMPE243_F17_Optimus_geoflowchart.png|700px|thumb|centre|| Flowchart]] | ||

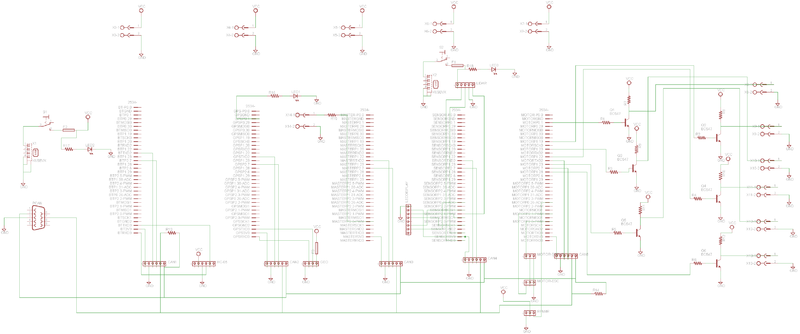

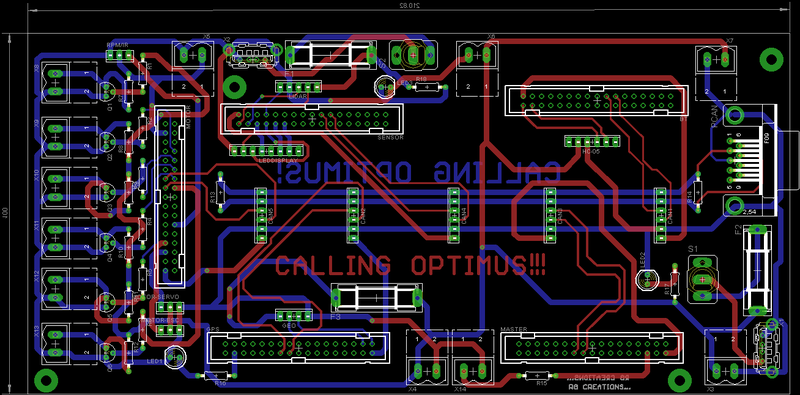

| + | == '''Package Design''' == | ||

| + | === PCB Design === | ||

| + | [[ File: CmpE243_F17_T1_HWDesign_Schematic.png|800px|thumb|center|| PCB Complete Schematic for All 5 Control Interfaces]] <br> | ||

| + | [[ File: CmpE243_F17_T1_HWDesign_Board.png|800px|thumb|center|| PCB Complete Board design for All 5 Control Interfaces]] | ||

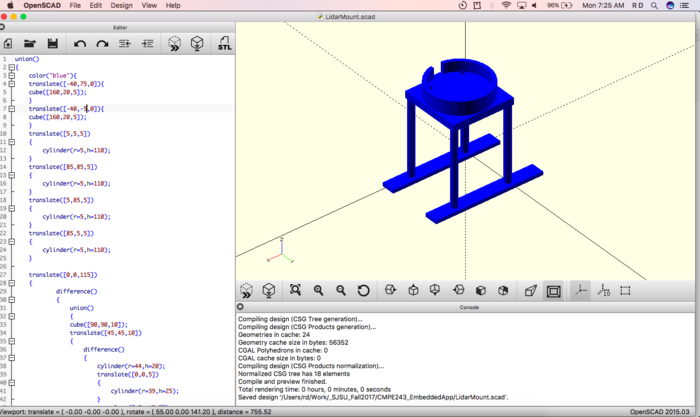

| − | The | + | === 3D Printed Sensor Mounts === |

| + | We designed 3D printing Models for holding the Sensor LiDAR and GPS using OpenScad Software. | ||

| + | |||

| + | [[ File: CMPE243_F17_Optimus_3DMount.png|700px|thumb|center|| LiDAR Mount]] <br> | ||

| + | [[ File: CMPE243_F17_Optimus_3DGPS.png|700px|thumb|center|| GPS Mount]] | ||

| + | |||

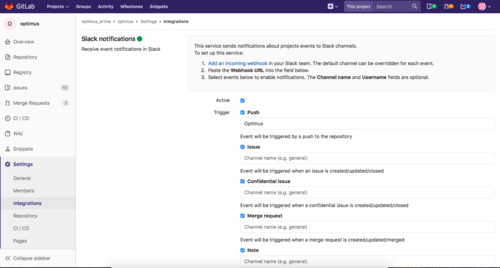

| + | == '''Git Project Management''' == | ||

| + | |||

| + | The Gitlab project is managed using working on different branches for different controllers and restricting access to all users to merge the branch to master branch. | ||

| + | To get easy notification of all git activity, we created a webhook for git notifications in CMPE243 Slack Channel. | ||

| + | The useful features of git such as Issues List, Milestone tracks are used for easy management | ||

| + | |||

| + | [[ File: CMPE243_F17_Optimus_Webhook.png|500px|thumb|center|| GitLab WebHooks]] | ||

| + | |||

| + | The project git repository is below. | ||

| + | |||

| + | https://gitlab.com/optimus_prime/optimus/tree/master | ||

| + | |||

| + | == '''Technical Challenges''' == | ||

| + | |||

| + | === Motor Technical Challenges === | ||

| + | 1) ESC Calibration <br> | ||

| + | We messed up the calibration on the ESC.<br> | ||

| + | XL 5 had a long press option to calibrate the ESC, where the ESC shall:<br> | ||

| + | a) After long press, glow green and start taking PWM signals for neutral (1.5).<br> | ||

| + | b) Glow green once again where we shall feed in PWM signals for Forward (2ms).<br> | ||

| + | b) Glow green twice again where we shall feed in PWM signals for Reverse (1ms)."<br> | ||

| + | -We wrote code to calibrate using EXT-INT (EINT3) over P0.1 - switch to calibrate the ESC this way!<br> | ||

| + | <br> | ||

| + | |||

| + | 2) ESC Reverse<br> | ||

| + | The ESC was not activating reverse if we directly - as in the datasheet (no formal datasheet - only XL 5 forums - talked about 1ms pulse width at 50Hz for reverse).<br> | ||

| + | We figured out that Reverse is actually 3 steps:<br> | ||

| + | a) goNeutral()<br> | ||

| + | b) goReverse()<br> | ||

| + | c) goNeutral()<br> | ||

| + | d) goReverse()<br> | ||

| + | |||

| + | <br> | ||

| + | |||

| + | 3) RPM Sensor Installation:<br> | ||

| + | After following the steps to install RPM sensor (as steps above), the RPM sensor was not detecting the Rotation (magnet) of the wheel. <br> | ||

| + | The reason for that was Machine steeled pinion gear and slipper clutch. The Machine steeled pinion gear and slipper clutch that came with the RC car was big. That increased the distance between Magnet and RPM sensor. That's why we were not able to detect RPM of wheel.<br> | ||

| + | We even checked the activity using Digital Oscilloscope. <br> | ||

| + | Then we changed the smaller Machine steeled pinion gear and slipper clutch and reinstalled the RPM sensor and it worked. <br><br> | ||

| + | |||

| + | === Android Issues Undergone === | ||

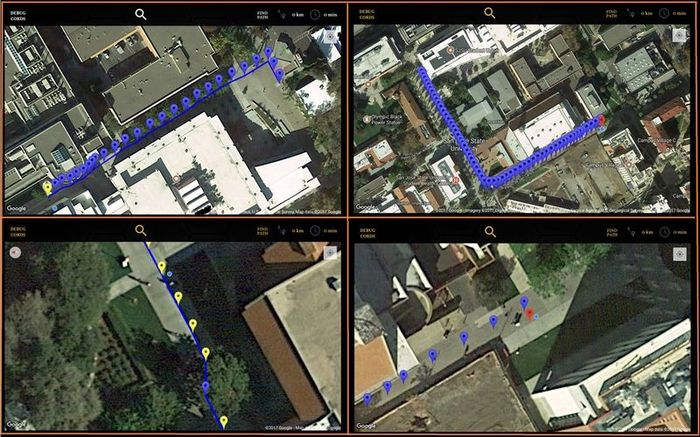

| + | * '''MAPS: Plotting Routes and Offline Check Points Calculation'''<br> | ||

| + | |||

| + | With our initial implementation using Google Android API we were able to route maps but sooner during testing of the route navigation we faced a couple of issues as follows:<br> | ||

| + | |||

| + | 1. For Straight Line Routes, often the intermediate checkpoints were not received, as according to Google Api's checkpoints are only generated at the intersections where the route bends.<br> | ||

| + | 2. Due to the aforesaid drawback on straight routes it was hard to navigate and interpolation was required to make sure the GEO has enough checkpoints to redefine the heading angle before the car goes too far from its destined straight route path.<br> | ||

| + | 3. Google Route's are calculated from any point on the ground to the nearest offset point on the pre-drawn custom Google poly-line path, as a result the route from certain locations ended up to be on the sharp edge routes rather than smooth curves which also led to little longer routes and our car ended up in side walks or side bushes while correcting its course to follow the main route.<br> | ||

| + | |||

| + | [[ File: CmpE243_F17_Optimus_Routes.jpg|700px|thumb|center||Optimus App: Navigation and Route Selection]]<br> | ||

| + | * '''Application Compatibility'''<br> | ||

| + | |||

| + | During Implementation one of the issues faced were the security features of Android applications and permissions to use Geo Locations and App Storage.<br> Every time after fresh app Installation the permissions had to be revisited and enabled for the app to access them, something which still can be upgraded further. | ||

| + | |||

| + | ==== Testing and Procedures to Overcome Challenges ==== | ||

| + | |||

| + | '''MAP DEBUGGING & ROUTE CALCULATION''' <br> | ||

| + | |||

| + | For overcoming the problem of placing routes and calculating the shortest path we decided to interpolate routes in the university premises.<br> | ||

| + | Steps involved:<br> | ||

| + | |||

| + | * Draw polylines routes over saved checkpoint coordinates by reading and parsing a json file at the app level to get the next checkpoint coordinates.<br> | ||

| + | * Use Dijkstra's Algorithm to calculate shortest path between those routes.<br> | ||

| + | * For longer routes two approaches could be taken to calculate the intermediate checkpoints:<br> | ||

| + | ** a. Straightline Approach<br> | ||

| + | ** b. Geodesy Engineering Approach.<br> | ||

| + | |||

| + | [[ File: CmpE243_F17_Optimus_Nearest_Route_Algorithm.gif|700px|thumb|center||Source:wikipedia.org::Dijkstra's algorithm]]<br> | ||

| + | |||

| + | Geodesy approach is complex and can be implemented using 'Haversine' technique to calculate the intermediate points between two points along the geographic surface of the earth but since the distances for the demo were not so long enough that can be significantly impacted by the curvature we decided to go with the primary approach.<br> | ||

| + | |||

| + | We used '''Vincenty''' formula to compute the interpolated points between two checkpoints when the distance between the two exceeded ~(10±5)meters the algorithm will interpolate the route to give intermediate checkpoints which will be marked on the map using BLUE Markers.<br> | ||

| + | |||

| + | ''' For easy user view we added Hybrid TYPE MAP on the app so that user can have a 3D feel of the route.<br> | ||

| + | |||

| + | ''' MARKERS '''<br> | ||

| + | * We also added colored Markers for denoting following:<br> | ||

| + | |||

| + | >>> '''START/STOP''' : Custom Markers<br> | ||

| + | >>> '''CAR LOCATION''' : Yellow Markers<br> | ||

| + | >>> '''INTERMEDIATE CHECKPOINTS''' : HUE_BLUE Markers<br> | ||

| + | |||

| + | [[ File: CmpE243_F17_Optimus_Map Markers.png|700px|thumb|center||Optimus App: Map Markers]]<br> | ||

| + | |||

| + | === Sensor Controller === | ||

| + | |||

| + | 1. LIDAR is not able to detect black colored objects sometimes as the light from the LASER is completely absorbed by black and nothing is reflected back. | ||

| + | |||

| + | [https://www.youtube.com/watch?v=xQFsSSVI3TE&feature=youtu.be LIDAR doesn't detect black objects] | ||

| + | |||

| + | 2. LIDAR object detection will be the plane where it is mounted. So, if the object height is less than the height the LIDAR is mounted then the object will not be detected. | ||

| − | + | [https://www.youtube.com/watch?v=kNBofrklUgs&feature=youtu.be LIDAR doesn't detect objects lower than it's height] | |

| − | + | 3. If there is very high ramp then ramp will also come in the plane of the LIDAR and it will be considered as an obstacle. | |

| − | + | 4. LIDAR's Exposure to direct sunlight will cause noise creation in the obstacle detection. | |

| − | === | + | === Geo Technical Challenges === |

The first and the major issue we faced with the GEO module was selecting the proper hardware for GPS and Compass. We tried with Sparkfun, Adafruit and Ublox GPS modules. We observed a lot of time taken by the GPS to get a lock and also the error was high. Then we switched to DJI Naza GPS and we found that it was pretty accurate and the lock up time was hardly a minute. The software issue which we faced with Naza GPS was that it did not have a proper software documentation. We tried to understand the message packets and went through the forums to understand the message layout. After this we were able to integrate the module successfully. | The first and the major issue we faced with the GEO module was selecting the proper hardware for GPS and Compass. We tried with Sparkfun, Adafruit and Ublox GPS modules. We observed a lot of time taken by the GPS to get a lock and also the error was high. Then we switched to DJI Naza GPS and we found that it was pretty accurate and the lock up time was hardly a minute. The software issue which we faced with Naza GPS was that it did not have a proper software documentation. We tried to understand the message packets and went through the forums to understand the message layout. After this we were able to integrate the module successfully. | ||

| Line 1,107: | Line 1,324: | ||

[[ File: CMPE243_F17_Optimus_App.JPG|300px|thumb|center|| GPS Route]] | [[ File: CMPE243_F17_Optimus_App.JPG|300px|thumb|center|| GPS Route]] | ||

| − | === | + | == '''Project Videos''' == |

| − | + | ||

| − | + | https://youtu.be/lzW2ASbNfYo | |

| + | |||

| + | == '''Conclusion''' == | ||

| + | As a team we were able to achieve the set of goals and requirements within the required time frame. Over the course of this project, we learnt cutting edge industry standards and techniques such as: | ||

| + | *Team Work: Working in a team with so many people gave us a real sense of what happens in the industry when a large number of people work together. | ||

| + | *GIT: Our source code versioning, code review sessions and test management was using GIT. | ||

| + | *CAN: A simple and robust broadcast bus which works with a pair of differential signals. We were able to use the CAN bus to interconnect five LPC1758 micro controllers powered by FreeRTOS. | ||

| + | |||

| + | *Accountability: Dealing with both software and hardware is not an easy task and nothing can be taken for granted, especially the hardware. | ||

| + | *Hardware issues: | ||

| + | ** Power Issues: Initially we were using a single port from the Power bank power up everything (all the boards) including the LIDAR. This caused the LIDAR to stop working due to insufficient current. It took a while for us to figure this out. | ||

| + | ** GPS: Calibrating the GPS and getting accurate data from the GPS was a challenging task. | ||

| + | **Android Application: Using google maps to obtain checkpoints did not workout as google maps was giving a single checkpoint. So we created a database of checkpoints for navigating the car across SJSU campus. | ||

| + | **Debugging: Connecting the PCAN dongle to the car and moving around with it is a difficult way to debug. Hence we created a dashboard on the android application to view all the useful information on the tab without any hassles. | ||

| + | |||

| + | To the teams that are designing their car: | ||

| + | * If using a LIDAR for obstacle avoidance make sure to test it in all lighting conditions. | ||

| + | * It is better to have PCB instead of soldering everything on a wire-wrapping board. | ||

| + | * Start with the implementation for the Geo module early. | ||

| − | == | + | == '''Project Source Code''' == |

| + | The source code is available in the below github link | ||

| − | + | https://gitlab.com/optimus_prime/optimus | |

| − | == | + | == '''References''' == |

| + | *[https://traxxas.com/support/Programming-Your-Traxxas-Electronic-Speed-Control ESC Calibration] | ||

| + | *[http://www.instructables.com/id/ESC-Programming-on-Arduino-Hobbyking-ESC/ ESC PWM information] | ||

| + | *[https://forums.traxxas.com/showthread.php?8923102-PWM-in-XL-5 ESC XL-5 PWM information] | ||

| + | *[https://www.movable-type.co.uk/scripts/latlong.html GEO Bearing information] | ||

| + | *[http://bucket.download.slamtec.com/351a5409ddfba077ad11ec5071e97ba5bf2c5d0a/LR002_SLAMTEC_rplidar_sdk_v1.0_en.pdf LIDAR SDK which helped us in coding for the LIDAR] | ||

| + | *[http://bucket.download.slamtec.com/004eb70efdaba0d30a559d7efc60b4bc6bc257fc/LD204_SLAMTEC_rplidar_datasheet_A2M4_v1.0_en.pdf LIDAR Data Sheet] | ||

| + | *[http://bucket.download.slamtec.com/351a5409ddfba077ad11ec5071e97ba5bf2c5d0a/LR002_SLAMTEC_rplidar_sdk_v1.0_en.pdf LIDAR User Manual] | ||

| − | == | + | == '''Acknowledgement''' == |

| + | We are thankful for the guidance and support by | ||

| − | + | Professor | |

| − | + | * Preetpal Kang | |

| − | + | ISA | |

| − | + | * Prashant Aithal | |

| + | |||

| + | * Saurabh Ravindra Deshmukh | ||

| + | |||

| + | * Purvil Kamdar | ||

| − | + | * Shruthi Narayan | |

| − | + | * Parth Pachchigar | |

| − | + | * Abhishek Singh | |

| − | + | For 3D printing | |

| − | + | * Our sincere thanks to Marvin Flores <marvin.flores@sjsu.edu> for printing our 3D print models. | |

| − | + | For Sponsoring R/C car | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Professor Kaikai, Liu | |

Latest revision as of 05:23, 23 December 2017

Optimus - An Android app controlled Self Navigating Car powered by SJOne(LPC1758) microcontroller. Optimus manuevers through the selected Routes using LIDAR and GPS Sensors. This wiki page covers the detailed report on how Optimus is built by Team Optimus.

Contents

- 1 Abstract

- 2 Objectives & Introduction

- 3 Team Members & Responsibilities

- 4 Project Schedule

- 5 Parts List & Cost

- 6 CAN Communication

- 7 Hardware & Software Architecture

- 8 Master Controller

- 9 Motor Controller

- 10 Sensor Controller

- 10.1 Introduction

- 10.2 Hardware Implementation

- 10.3 Software Implementation

- 10.3.1 Approach for obtaining the data from the LIDAR

- 10.3.2 Algorithm for interfacing LIDAR to SJOne board and obtaining the obstacle info

- 10.3.3 Flowchart for Communicating with the LIDAR and receiving obstacle information

- 10.3.4 Testing the data obtained from the LIDAR

- 10.3.5 CAN DBC messages sent from the Sensor Controller

- 11 Android Application

- 12 Bluetooth Controller

- 13 Geographical Controller

- 14 Package Design

- 15 Git Project Management

- 16 Technical Challenges

- 17 Project Videos

- 18 Conclusion

- 19 Project Source Code

- 20 References

- 21 Acknowledgement

Abstract

Embedded Systems are omnipresent and one of its unique, yet powerful application is Self Driving Car. In this project we to build a Self-Navigating Car named Optimus, that navigates from a source location to a selected destination by avoiding obstacles in its path.

The key features the system supports are

1. Android Application with Customized map and Dashboard Information.

2. LIDAR powered obstacle avoidance.

3. Route Calculation and Manuvering to the selected destination

4. Self- Adjusting the speed of the car on Ramp.

The system is built on FreeRTOS running on LPC1758 SJOne controller and Android application. The building block of Optimus are the five controllers communicating through High Speed CAN network designed to handle dedicated tasks. The controllers integrates various sensors that is used for navigation of the car.

1. Master Controller - handles the Route Manuevering and Obstacle Avoidance

2. Sensor Controller - detects the surrounding objects

3. Geo Controller - provides current location

4. Drive Controller - controls the ESC

5. Bridge controller - Interfaces the system to Android app

Objectives & Introduction

Our Objective is to build and integrate the functionality of these five controllers to develop fully functioning self-driving system.

Sensor Controller: Sensor controller uses RPLIDAR to scan its 360-degree environment within 6-meter range. It sends the scanned obstacle data to master controller and bridge controller.

Geo Controller: Geo controller uses NAZA GPS module that provides car current GPS location and compass angle. It calculates heading and bearing angle that helps the car to turn with respect to destination direction.

Drive Controller: Drive controller drives the motor based on the commands it receives from the Master.

Bridge Controller: Bridge controller works as a gateway between the Android application and Self-driving car and passes information to/from between them.

Master Controller: Master controller controls all other controllers and takes decision of drive.

Android Application: Android application communicates with the car through Bridge controller. It sends the destination location to be reached to the Geo controller and also provides all the Debugging information of the Car like

1. Obstacles information around the car

2. Car's turning angle

3. Compass value

4. Bearing angle

5. Car's GPS location

6. Destination reached status

7. Total checkpoints in the route

8. Current checkpoint indication

Team Members & Responsibilities

- Master Controller:

- Revathy

- Motor Controller:

- Android and Communication Bridge:

- Geographical Controller:

- Integration Testing:

- Revathy

- Kripanand Jha

- Unnikrishnan

- PCB Design:

Project Schedule

Legend:

Major Feature milestone , CAN Master Controller , Sensor & IO Controller , Android Controller, Motor Controller , Geo , Testing, Ble controller, Team Goal

| Week# | Date | Planned Task | Actual | Status |

|---|---|---|---|---|

| 1 | 9/23/2017 |

|

|

Complete. |

| 2 | 9/30/2017 |

|

|

Complete |

| 3 | 10/14/2017 |

|

|

Complete |

| 4 | 10/21/2017 |

|

|

complete |

| 5 | 10/28/2017 |

|

|

Complete |

| 6 | 11/07/2017 |

|

|

Complete |

| 7 | 11/14/2017 |

|

|

Complete. |

| 8 | 11/21/2017 |

|

|

Complete. |

| 9 | 11/28/2017 |

|

|

Complete. |

| 10 | 12/5/2017 |

|

|

Complete |

| 11 | 12/12/2017 |

|

|

complete |

Parts List & Cost

The Project bill of materials is as listed in the table below.

| SNo. | Component | Units | Total Cost |

|---|---|---|---|

| General System Components | |||

| 1 | SJ One Board (LPC 1758) | 5 | $400 |

| 2 | Traxaas RC Car | 1 | From Prof. Kaikai Liu |

| 3 | CAN Transceivers | 15 | $55 |

| 4 | PCAN dongle | 1 | From Preet |

| 5 | PCB Manufacturing | 5 | $70 |

| 6 | 3D printing | 2 | From Marvin |

| 6 | General Hardware components( Connectors,standoffs,Soldering Kits) | 1 | $40 |

| 7 | Power Bank | 1 | $41.50 |

| 8 | LED Digital Display | 1 | From Preet |

| 9 | Acrylic Board | 1 | $12.53 |

| Sensor/IO Controller Components | |||

| 10 | RP Lidar | 1 | $449 |

| Geo Controller Components | |||

| 11 | GPS Module | 1 | $69 |

| Bluetooth Bridge Controller Component | |||

| 12 | Bluetooth Module | 1 | $34.95 |

| Drive Controller Component | |||

| 13 | RPM Sensor | 1 | $20 |

CAN Communication

The controllers are connected in a CAN bus at 100K baudrate. System Nodes: MASTER, MOTOR, BLE, SENSOR, GEO

| SNo. | Message ID | Message from Source Node | Receivers |

|---|---|---|---|

| Master Controller Message | |||

| 1 | 2 | System Stop command to stop motor | Motor |

| 2 | 17 | Target Speed-Steer Signal to Motor | Motor |

| 3 | 194 | Telemetry Message to Display it on Android | BLE |

| Sensor Controller Message | |||

| 4 | 3 | Lidar Detections of obstacles in 360 degree grouped as sectors | Master,BLE |

| 5 | 36 | Heartbeat | Master |

| Geo Controller Message | |||

| 6 | 195 | Compass, Destination Reached flag, Checkpoint id signals | Master,BLE |

| 7 | 196 | GPS Lock | Master,BLE |

| 8 | 4 | Turning Angle | Master,BLE |

| 9 | 214 | Current Coordinate | Master,BLE |

| 10 | 37 | Heartbeat | Master |

| Bluetooth Bridge Controller Message | |||

| 11 | 1 | System start/stop command | Master |

| 12 | 38 | Heartbeat | Master |

| 13 | 213 | Checkpoint Count from AndroidApp | Geo |

| 14 | 212 | Checkpoints (Lat, Long) from Android App | Geo |

| Drive Controller Message | |||

| 15 | 193 | Telemetry Message | BLE |

| 16 | 35 | Heartbeat | Master |

DBC File

The CAN message id's transmitted and received from all the controllers are designed based on the priority of the CAN messages. The priority is as follows

Priority Level 1 - User Commands

Priority Level 2 - Sensor data

Priority Level 3 - Status Signals

Priority Level 4 - Heartbeat

Priority Level 5 - Telemetry signals to display in I/O

BU_: DBG DRIVER IO MOTOR SENSOR MASTER GEO BLE

BO_ 1 BLE_START_STOP_CMD: 1 BLE SG_ BLE_START_STOP_CMD_start : 0|4@1+ (1,0) [0|1] "" MASTER SG_ BLE_START_STOP_CMD_reset : 4|4@1+ (1,0) [0|1] "" MASTER

BO_ 2 MASTER_SYS_STOP_CMD: 1 MASTER SG_ MASTER_SYS_STOP_CMD_stop : 0|8@1+ (1,0) [0|1] "" MOTOR

BO_ 212 BLE_GPS_DATA: 8 BLE SG_ BLE_GPS_long : 0|32@1- (0.000001,0) [0|0] "" GEO SG_ BLE_GPS_lat : 32|32@1- (0.000001,0) [0|0] "" GEO

BO_ 213 BLE_GPS_DATA_CNT: 1 BLE SG_ BLE_GPS_COUNT : 0|8@1+ (1,0) [0|0] "" GEO,SENSOR

BO_ 214 GEO_CURRENT_COORD: 8 GEO SG_ GEO_CURRENT_COORD_LONG : 0|32@1- (0.000001,0) [0|0] "" MASTER,BLE SG_ GEO_CURRENT_COORD_LAT : 32|32@1- (0.000001,0) [0|0] "" MASTER,BLE

BO_ 195 GEO_TELECOMPASS: 6 GEO SG_ GEO_TELECOMPASS_compass : 0|12@1+ (0.1,0) [0|360.0] "" MASTER,BLE SG_ GEO_TELECOMPASS_bearing_angle : 12|12@1+ (0.1,0) [0|360.0] "" MASTER,BLE SG_ GEO_TELECOMPASS_distance : 24|12@1+ (0.1,0) [0|0] "" MASTER,BLE SG_ GEO_TELECOMPASS_destination_reached : 36|1@1+ (1,0) [0|1] "" MASTER,BLE SG_ GEO_TELECOMPASS_checkpoint_id : 37|8@1+ (1,0) [0|0] "" MASTER,BLE

BO_ 194 MASTER_TELEMETRY: 3 MASTER SG_ MASTER_TELEMETRY_gps_mia : 0|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_sensor_mia : 1|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_sensor_heartbeat : 2|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_ble_heartbeat : 3|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_motor_heartbeat : 4|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_geo_heartbeat : 5|1@1+ (1,0) [0|1] "" BLE SG_ MASTER_TELEMETRY_sys_status : 6|2@1+ (1,0) [0|3] "" BLE SG_ MASTER_TELEMETRY_gps_tele_mia : 8|1@1+ (1,0) [0|1] "" BLE

BO_ 196 GEO_TELEMETRY_LOCK: 1 GEO SG_ GEO_TELEMETRY_lock : 0|8@1+ (1,0) [0|0] "" MASTER,SENSOR,BLE BO_ 3 SENSOR_LIDAR_OBSTACLE_INFO: 6 SENSOR SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR0 : 0|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR1 : 4|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR2 : 8|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR3 : 12|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR4 : 16|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR5 : 20|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR6 : 24|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR7 : 28|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR8 : 32|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR9 : 36|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR10 : 40|4@1+ (1,0) [0|12] "" MASTER,BLE SG_ SENSOR_LIDAR_OBSTACLE_INFO_SECTOR11 : 44|4@1+ (1,0) [0|12] "" MASTER,BLE

BO_ 4 GEO_TURNING_ANGLE: 2 GEO SG_ GEO_TURNING_ANGLE_degree : 0|9@1- (1,0) [-180|180] "" MASTER,BLE

The CAN DBC is available at the Gitlab link below

https://gitlab.com/optimus_prime/optimus/blob/master/_can_dbc/243.dbc

CAN Bus Debugging

We used PCAN Dongle to connect to the host pc to monitor the CAN Bus traffic using BusMaster tool. The screenshot of the Bus Master log is shown below

Hardware & Software Architecture

Master Controller

Software Architecture Design

The Master Controller Integrates the functionality of all other controllers and it acts as the Central Control Unit of the Self Navigating car. Two of the major functionalities handled by Master Controller is Obstacle avoidance and Route Maneuvering.

The overview of Master Controller Software Architecture is as show in the figure below.

As an analogy to Human driving, it receives the inputs from sensors to determine the surrounding of the Self Navigating car and take decisions based on the environment and current location of the car. The input received and output sent by the Master are as mentioned below:

Input to Master:

1. Lidar Object Detection information - To determine if there is an obstacle in the path of navigation

2. GPS and Compass Reading - To understand the Heading and Bearing angle to decide the direction of movement

3. User command from Android - To stop or Navigate to the Destination

Output from Master:

1. Motor control information - sends the target Speed and Steering direction to the Motor.

Software Implementation

The Master controller runs 2 major algorithms, Route Maneuvering and Obstacle Avoidance. The System start/stop is handled by master based on the Specific commands. The implicit requirement is that When the user selects the destination, route is calculated and the checkpoints of the route are sent from Android through bridge controller to the Geo. Once Geo Controller receives a complete set of checkpoints, the master controller starts the system based on the "Checkpoint ID". If the ID is a non-zero value, the route has started and Master controller runs the Route Maneuvering Algorithm.

The Overall control flow of master controller is shown in the below figure.

Unit Testing

Using Cgreen Unit Testing framework, the Obstacle avoidance algorithm is unit tested.The complete code for unit test is added in git project.

Ensure(test_obstacle_avoidance)

{

//Obstacle Avoidance Algorithm

pmaster->set_target_steer(MC::steer_right);

mock_obstacle_detections(MC::steer_right,MC::steer_right,false,false,false,false,false,false,true);

assert_that(pmaster->RunObstacleAvoidanceAlgo(obs_status),is_equal_to(expected_steer));

assert_that(pmaster->get_forward(),is_equal_to(true));

assert_that(pmaster->get_target_speed(),is_equal_to(MC::speed_slow));

}

Ensure(test_obstacle_detection)

{

//Obstacle Detection Algorithm

mock_CAN_Rx_Lidar_Info(2,2,6,0,2,2,4,0,2,0,5,0);

set_expected_detection(true,false,true,false,true,false,false);

actual_detections = psensor->RunObstacleDetectionAlgo();

assert_that(compare_detections(actual_detections) , is_equal_to(7));

}

TestSuite* master_controller_suite()

{

TestSuite* master_suite = create_test_suite();

add_test(master_suite,test_obstacle_avoidance);

add_test(master_suite,test_obstacle_detection);

return master_suite;

}

On board debug indications

| Sr.No | LED Number | Debug Signal |

|---|---|---|

| 1 | LED 1 | Sensor Heartbeat, Sensor Data Mia |

| 2 | LED 2 | Geo Heartbeat, Turning Angle Signal Mia |

| 3 | LED 3 | Bridge Heartbeat mia |

| 4 | LED 4 | Motor Heartbeat mia |

Design Challenges

The critical part in Obstacle Avoidance Algorithm is designing, 1. Obstacle detcetion 2. Obstacle avoidance. Since we get 360-degree view of obstacles, we need to group the zones into sectors and tracks to process the 360-degree detection and take decision accordingly.

Motor Controller

Design & Implementation

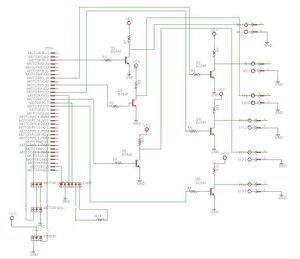

The Motor Controller is responsible for the Movement and Steering action of the Car. It includes two types of motors, DC motor for movement and DC Servo motor for Steering. The Motor has an inbuilt driver called ESC (Electronic Speed Control) Circuit used the manipulate the speed and steering of the Car. It has a PWM input for both Servo Motor and DC Motor. We are using RPM sensor to take the feedback from the motor to monitor the speed.

Hardware Design

| SJOne Pin Diagram | ||

|---|---|---|

| Sr.No | Pin Number | Pin Function |

| 1 | P0.1 | HEADlIGHTS |

| 2 | P1.19 | BRAKELIGHTS |

| 3 | P1.20 | LEFT INDICATORS |

| 4 | P1.22 | RIGHT INDICATORS |

| 5 | P0.26 | RPM SENSOR |

| 6 | P2.0 | SERVO PWM |

| 7 | P2.1 | MOTOR PWM |

Hardware Specifications

- 1. DC Motor, Servo and ESC

This is a Traxxas Titan 380 18-turn brushed motor. The DC motor comes with the Electronic Speed Control(ESC) module. The ESC module can control both servo and DC motor using Pulse Width Modulation (PWM) control. ESC also requires an initial calibration:

ESC is operated using PWM Signals. The DC motor PWM is converted in the range of -100% to 100% where -100% means "reverse with full speed", 100% means "forward with full speed" and 0 means "Stop or Neutral".

Also, the servo can also be operated in a Safe manner using PWM.

As we need a locked 0 –> 180 degrees motion in certain applications like robot arm, Humanoids etc. We use these Servo motors. These are Normal motors only with a potentiometer connected to its shaft which gives us the feedback of analog value and adjusts its angle according to its given input signal.

So… How to Operate it? A servo usually requires 5V->6V As VCC. (Industrial servos requires more.) and Ground and a signal to adjust its position. The signal is a PWM waveform. For a servo, we need to provide a PWM of frequency about 50Hz-200Hz (Refer the datasheet). So the time duration of a clock cycle goes to 20ms. From this 20ms if the On time is 1ms and off time is 19ms we generally get the 0 degrees position. And when we increase the duty cycle from 1ms to 2ms the angle changes from 0–> 180 degrees. So where can it go wrong-

Power->> The power we provide. Generally we tend to give a higher volt batteries for our applications by pulling the voltage down through regulators to 5Vs. But we surely can-not give supply to the servo through our uC as the servo eats up a hell lot of current.

Another way to burn the servo is at certain times the supply is given directly through the battery so the uC will not blow up. But if you Give a supply say 12Volts then boom. Your servo will go on for ever.

PWM–> PWM should strictly be in the range between 1ms–> 2ms (refer datasheets) If by any mistakes the PWM goes out then boom the servo will start jittering and will heat up and heat up and will burn itself down. But this problem is easily identifiable as there is a jitter sound which if you have got enough experience with servos, you will totally notice the noise. So if the noise is there when you turn on the servo, turn it off right away and change the code ASAP.

Load— Hobby servos don’t have high load bearing capacities and as it is designed that way it always tries to adjust its angle according to signal. But here is the catch. As there is too much off load the servo cannot go further and the signal is forcing it to. So again.. heat… heat and boom. How to avoid this. Give load to the servo only in the figure of safety.

- 2. RPM Sensor

The RPM sensor above requires a specific kind of Installation. STEPS ARE:

Once the installation is done, the RPM can be read using the above magnetic RPM sensor. It gives a high pulse at every rotation of the wheel. Hence, to calculate the RPM, the output of the above sensor is fed to a gpio pin of SJOne board.

Motor Module Hardware Interface

The Hardware connections of Motor Module is shown in above Schematic. The motor receive signals through CAN bus from the Master and feedback is sent via RPM sensor to the Master as current speed of the Car. The speed sent from a RPM sensor over a CAN bus is also utilized by I/O Module and BLE module to print the values on LED display and Android App.

Software Design

The following diagram describes the flow of the software implementation for the motor driver and speed feedback mechanism.

Motor Module Implementation

The motor controller is operated based on the CAN messages received from the Master. The CAN messages for Drive and Steer commands are sent from the Master Controller. Motor controller converts the value received from Master (+100 to -100 for Drive Speed percent and +100 to -100 for Steer angle in the range of 1 to 180 degrees turn) into specific PWM value as required by DC motor and Servo.

- Speed Regulation:

Upon detection of uphill the pulse frequency from RPM Sensor reduces, that means car is slowing down. Hence, in that scenario, car is accelerated (increase PWM) further to maintain the required speed. Similarly in case of Downhill pulse frequency increases, which means car is speeding up. Hence, brakes (reduced PWM) are applied to compensate the increased speed.

Sensor Controller

The Sensor is for detecting and avoiding obstacles. For this purpose we have used RPLIDAR by SLAMTEC.

Introduction