Difference between revisions of "S21: UTAH"

Proj user13 (talk | contribs) (→Hardware Design) |

Proj user13 (talk | contribs) (→Challenges) |

||

| (135 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== UTAH: Unit Tested to Avoid Hazards == | == UTAH: Unit Tested to Avoid Hazards == | ||

| + | [[File:Group pic.jpg|thumb|700x700px|Team UTAH|center]] | ||

== Abstract == | == Abstract == | ||

| Line 5: | Line 6: | ||

== Objectives & Introduction == | == Objectives & Introduction == | ||

| − | |||

==== Objectives ==== | ==== Objectives ==== | ||

* RC car can communicate with an Android application to: | * RC car can communicate with an Android application to: | ||

| Line 25: | Line 25: | ||

# Motor | # Motor | ||

| − | + | === Team Members & Responsibilities === | |

| − | |||

[[File:Akash Vachhani profilepic.jpg|200px|middle]] | [[File:Akash Vachhani profilepic.jpg|200px|middle]] | ||

*'''''[https://www.linkedin.com/in/akash-vachhani/ Akash Vachhani]''''' '''''[https://gitlab.com/infallibleprogrammer Gitlab]''''' | *'''''[https://www.linkedin.com/in/akash-vachhani/ Akash Vachhani]''''' '''''[https://gitlab.com/infallibleprogrammer Gitlab]''''' | ||

** Leader | ** Leader | ||

** Geographical Controller | ** Geographical Controller | ||

| + | ** Master Controller | ||

[[File:jbeardphoto.jpg|200px|middle]] | [[File:jbeardphoto.jpg|200px|middle]] | ||

| Line 38: | Line 38: | ||

** Communication Bridge Controller | ** Communication Bridge Controller | ||

| − | *Ameer Ali | + | [[File:AmeerAli.jpg|200px|middle]] |

| + | *'''''[https://www.linkedin.com/in/ameer-ali-13703b127/ Ameer Ali]''''' '''''[https://gitlab.com/Ameer12 Gitlab]''''' | ||

** Master Controller | ** Master Controller | ||

| Line 44: | Line 45: | ||

*Jonathan Tran '''''[https://gitlab.com/jtran1028 Gitlab]''''' | *Jonathan Tran '''''[https://gitlab.com/jtran1028 Gitlab]''''' | ||

** Sensors Controller | ** Sensors Controller | ||

| − | |||

| − | |||

| − | |||

[[File:Download.jpeg|200px|middle]] | [[File:Download.jpeg|200px|middle]] | ||

*Shreevats Gadhikar '''''[https://gitlab.com/Shreevats Gitlab]'''' | *Shreevats Gadhikar '''''[https://gitlab.com/Shreevats Gitlab]'''' | ||

| + | ** Motor Controller | ||

| + | ** Hardware Integration | ||

| + | ** PCB Designing | ||

| + | |||

| + | *Amritpal Sidhu | ||

** Motor Controller | ** Motor Controller | ||

| Line 136: | Line 139: | ||

*<span style="color:magenta">Plan motor controller API and create basic software flow of API</span> | *<span style="color:magenta">Plan motor controller API and create basic software flow of API</span> | ||

| | | | ||

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 5 | ! scope="row"| 5 | ||

| Line 153: | Line 156: | ||

*<span style="color:red">Begin coding and digesting adafruit Compass data. Print Compass data.</span> | *<span style="color:red">Begin coding and digesting adafruit Compass data. Print Compass data.</span> | ||

| | | | ||

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 6 | ! scope="row"| 6 | ||

| Line 179: | Line 182: | ||

*<span style="color:magenta">Write motor controller modules and tests</span> | *<span style="color:magenta">Write motor controller modules and tests</span> | ||

| | | | ||

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | + | * <span style="color:green">Completed</span> | |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | + | * <span style="color:green">Completed</span> | |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | ||

| − | * <span style="color:green"> | ||

|- | |- | ||

! scope="row"| 7 | ! scope="row"| 7 | ||

| Line 202: | Line 203: | ||

*<span style="color:orange">Create a Checkpoint Navigation algorithm for Driver</span> | *<span style="color:orange">Create a Checkpoint Navigation algorithm for Driver</span> | ||

| | | | ||

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 8 | ! scope="row"| 8 | ||

| Line 219: | Line 220: | ||

*<span style="color:orange">Establish Communication between the LCD display and Master Board over I2C</span> | *<span style="color:orange">Establish Communication between the LCD display and Master Board over I2C</span> | ||

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 9 | ! scope="row"| 9 | ||

| Line 237: | Line 238: | ||

*<span style="color:orange">Create an algorithm to account for speed when the car is on an incline</span> | *<span style="color:orange">Create an algorithm to account for speed when the car is on an incline</span> | ||

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

|- | |- | ||

| Line 263: | Line 264: | ||

*<span style="color:magenta">Tuned and tested PID on RC Car (More refinement needed)</span> | *<span style="color:magenta">Tuned and tested PID on RC Car (More refinement needed)</span> | ||

| | | | ||

| − | *<span style="color: | + | * <span style="color:green">Completed</span> |

| − | *<span style="color: | + | * <span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

| − | *<span style="color: | + | * <span style="color:green">Completed</span> |

| − | *<span style="color:green"> | + | *<span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 11 | ! scope="row"| 11 | ||

| Line 283: | Line 284: | ||

*<span style="color:black">Integrate all the parts on the PCB. </span> | *<span style="color:black">Integrate all the parts on the PCB. </span> | ||

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:Green"> | + | * <span style="color:Green">Completed</span> |

|- | |- | ||

! scope="row"| 12 | ! scope="row"| 12 | ||

| Line 299: | Line 300: | ||

*<span style="color:magenta">Finished tuning PID and tested RC Car driving on slope</span> | *<span style="color:magenta">Finished tuning PID and tested RC Car driving on slope</span> | ||

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 13 | ! scope="row"| 13 | ||

| Line 312: | Line 313: | ||

| | | | ||

*<span style="color:black">Update Wiki schedule and begin draft for individual controller documentation</span> | *<span style="color:black">Update Wiki schedule and begin draft for individual controller documentation</span> | ||

| − | |||

| | | | ||

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

| − | |||

|- | |- | ||

! scope="row"| 14 | ! scope="row"| 14 | ||

| Line 324: | Line 323: | ||

| | | | ||

*<span style="color:black">Update Wiki individual controller and general documentation</span> | *<span style="color:black">Update Wiki individual controller and general documentation</span> | ||

| − | |||

*<span style="color:orange">Last minute bug fixes/refining</span> | *<span style="color:orange">Last minute bug fixes/refining</span> | ||

*<span style="color:orange">Waypoint algorithm integration and test</span> | *<span style="color:orange">Waypoint algorithm integration and test</span> | ||

*<span style="color:magenta">Last minute bug fixes/refining & code cleanup</span> | *<span style="color:magenta">Last minute bug fixes/refining & code cleanup</span> | ||

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color:green"> | + | * <span style="color:green">Completed</span> |

|- | |- | ||

! scope="row"| 15 | ! scope="row"| 15 | ||

| Line 341: | Line 339: | ||

<div style="text-align:center">05/30/2021</div> | <div style="text-align:center">05/30/2021</div> | ||

| | | | ||

| − | *<span style="color:black"></span> | + | *<span style="color:black">Demo Project</span> |

| − | *<span style="color:black"></span> | + | *<span style="color:black">Finalize Wiki Documentation</span> |

| | | | ||

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

| − | * <span style="color: | + | * <span style="color:green">Completed</span> |

|- | |- | ||

|} | |} | ||

| Line 414: | Line 412: | ||

| 1 | | 1 | ||

| $40.00 | | $40.00 | ||

| + | |- | ||

| + | ! scope="row"|10 | ||

| + | | CAN transceiver | ||

| + | | Amazon [https://www.amazon.com/SN65HVD230-CAN-Board-Communication-Development/dp/B00KM6XMXO/ref=sr_1_1?dchild=1&keywords=can+waveshare&qid=1622233988&sr=8-1] | ||

| + | | 4 | ||

| + | | $40.00 | ||

| + | |- | ||

| + | ! scope="row"|11 | ||

| + | | Maxbotix MB1010 LV-MaxSonar-EZ1 Ultra Sonic Sensors | ||

| + | | Maxbotix [https://www.maxbotix.com/Ultrasonic_Sensors/MB1010.htm] | ||

| + | | 5 | ||

| + | | $121.50 | ||

|} | |} | ||

| Line 422: | Line 432: | ||

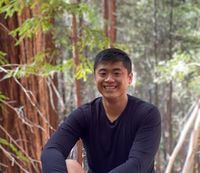

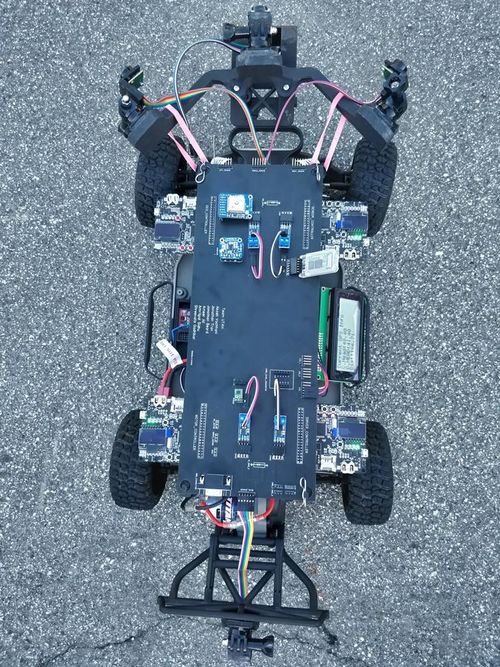

We started with a very basic design for our RC car on a breadboard. All the components were integrated on the breadboard for testing purposes. | We started with a very basic design for our RC car on a breadboard. All the components were integrated on the breadboard for testing purposes. | ||

| + | |||

[[File:Breadboard Wiring.jpeg|1000px|center|thumb|Breadboard Wiring]] | [[File:Breadboard Wiring.jpeg|1000px|center|thumb|Breadboard Wiring]] | ||

| − | We later had a PCB manufactured. In our design we minimized the use of surface mount components and additional passive components. We reused the CAN transceiver modules we all purchased and the only passive components on the board were the two 120Ω termination resistors needed at each end of the CAN bus. The remainder of the PCB used a combination of male and female pin headers to connect all components together. | + | |

| + | === Challenges === | ||

| + | The wiring on the breadboard succeeded on the first attempt but later when we kept adding the components as per the requirements the wiring became a complete mess and was entangling everywhere due to which the car could not navigate properly. Every time the car collided, the wires used to get disconnected. So reconnecting the wires and debugging the hardware every time after the collision was the main challenge. So we decided to go with PCB. | ||

| + | |||

| + | <hr> | ||

| + | <br> | ||

| + | |||

| + | |||

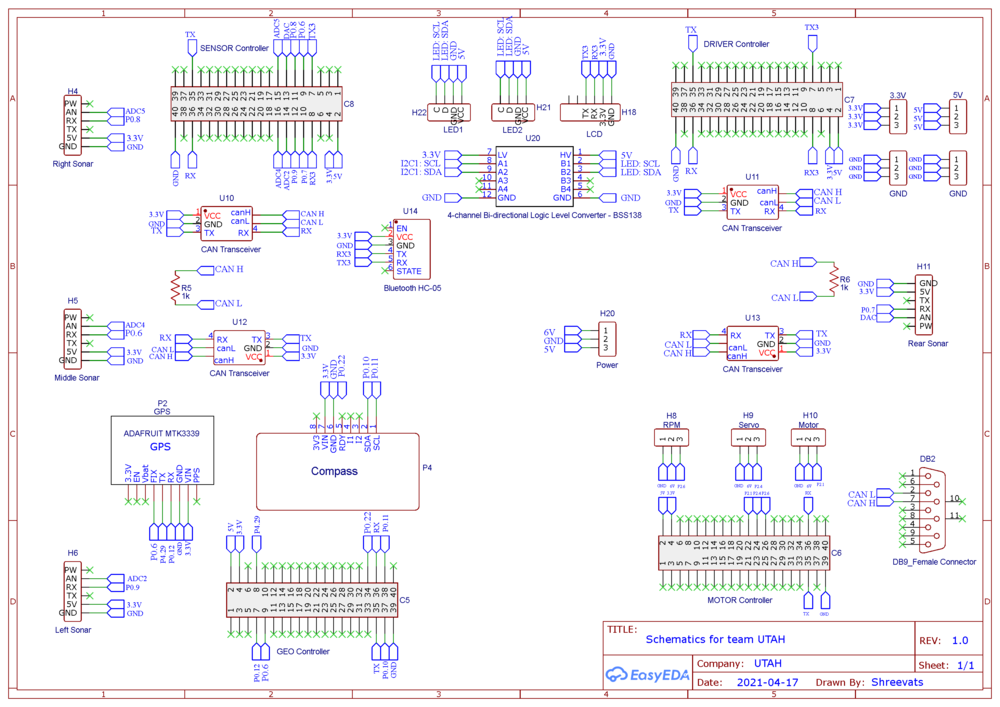

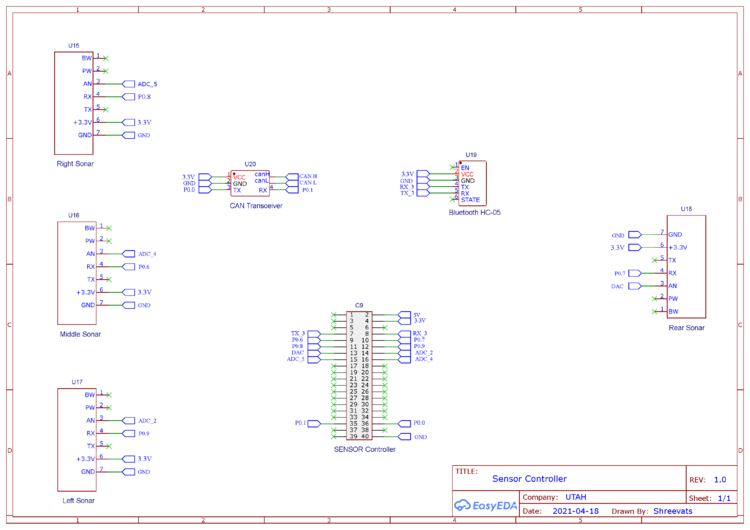

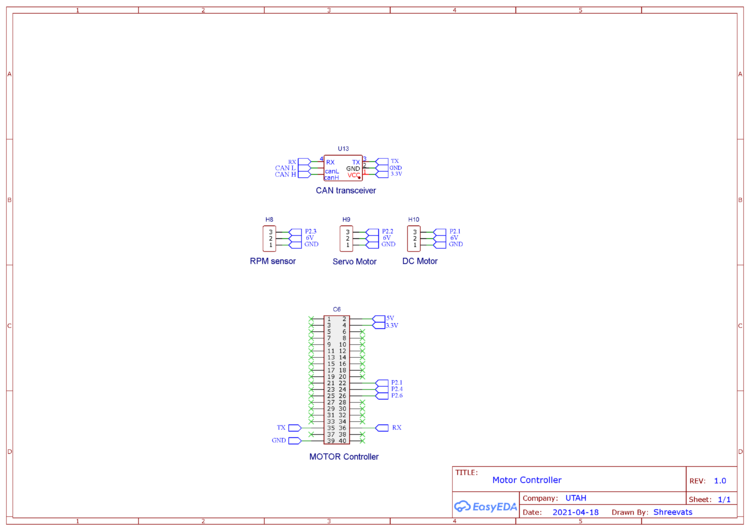

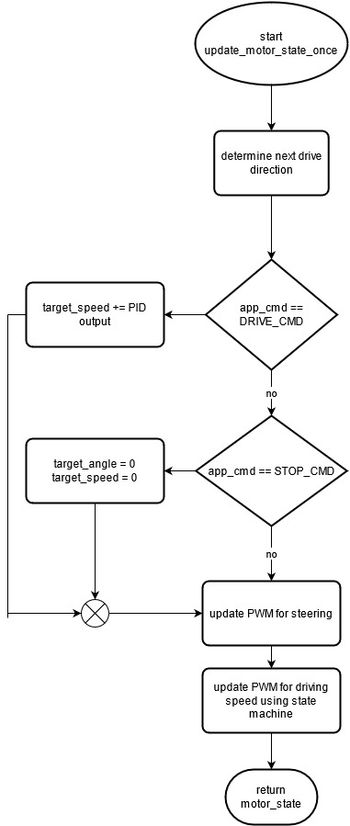

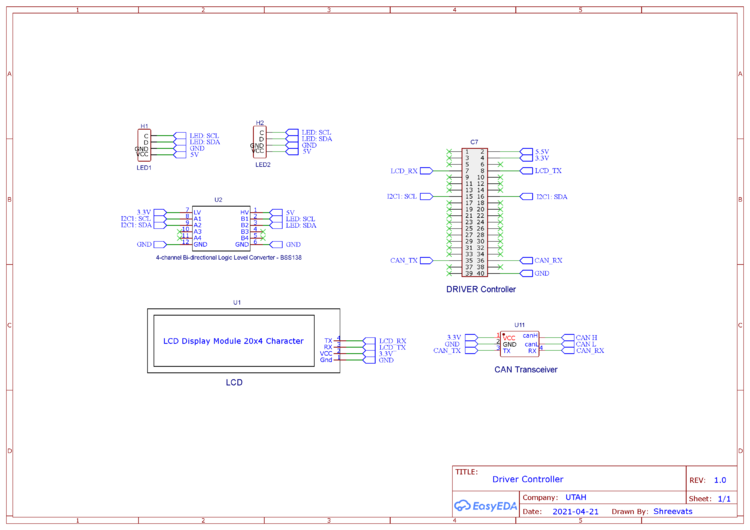

| + | We later had a PCB manufactured. In our design we minimized the use of surface mount components and additional passive components. We reused the CAN transceiver modules we all purchased and the only passive components on the board were the two 120Ω termination resistors needed at each end of the CAN bus. The remainder of the PCB used a combination of male and female pin headers to connect all components together. This design and implementation were succeeded in the first attempt. | ||

| + | |||

[[File:Schematic UTAH final.png|1000px|center|thumb|PCB Schematic]] | [[File:Schematic UTAH final.png|1000px|center|thumb|PCB Schematic]] | ||

| Line 434: | Line 454: | ||

[[File:PCB.PNG|1000px|center|thumb|PCB Rendering front side]] | [[File:PCB.PNG|1000px|center|thumb|PCB Rendering front side]] | ||

| − | + | [[File:Car11.jpeg|500px|center|thumb|Final design of car]] | |

| − | |||

| − | |||

| − | |||

| − | + | To overcome all the challenges which were faced on the breadboard we designed the PCB on EasyEDA. EasyEDA allows the creation and editing of schematic diagrams, and also the creation and editing of printed circuit board layouts and, optionally, the manufacture of printed circuit boards. So we implemented the custom PCB using EasyEDA software in which we implemented all the four controller connections. The four controllers included Master Controller, Geo Controller, Sensor Controller, and Motor Controller. The communication of these four controllers is done via a CAN bus. The GPS and Compass are connected to the Geo controller. The four Matbotix Ultrasonic sensors (three in front and one in rear) and the Bluetooth module are connected to the sensor controller board, while the LCD and LEDs are connected to Master Controller. RPM sensor, DC motor, and Servo motor are connected to Motor Controller. Also, all four CAN transceivers are connected to their respective board. | |

| − | + | ||

| + | ==Fabrication== | ||

| + | |||

| + | Using the EasyEDA we designed a 2 layer PCB. PCB was sent to fabrication to JLCPCB China which provided PCB within 7 days with MOQ of 5. The dimension of the PCB was 12.5" * 4.5" (332.61mm * 129.54mm). The four controllers were placed at the four corners of the PCB while the other components were placed at the center. | ||

| − | < | + | '''PCB Properties:''' <br/> |

| − | <br> | + | Size: 332.61mm * 129.54mm <br/> |

| + | Signal Layers: 2 <br/> | ||

| + | Componenets: 35 <br/> | ||

| + | Routing width: 0.254mm <br/> | ||

| + | Track Width: 1mm <br/> | ||

| + | Clearance: 0.3mm <br/> | ||

| + | Via diameter: 0.61mm <br/> | ||

| + | Via Drill Diameter: 0.31mm <br/> | ||

| + | Vias: 20 <br/> | ||

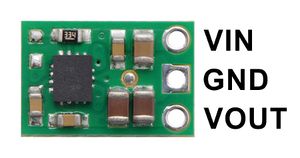

==Power Management== | ==Power Management== | ||

| Line 453: | Line 481: | ||

| − | + | [[File:Power IC.jpg|300px|center|Pololu 5V Power IC]] | |

| − | + | ==Challenges== | |

| − | |||

1. Calculating the power requirement for each device. <br/> | 1. Calculating the power requirement for each device. <br/> | ||

| − | 2. Using only one battery was a challenge.<br/> | + | 2. Getting the pinout information for every controller. <br/> |

| − | + | 3. Using only one battery was a challenge.<br/> | |

| − | + | 4. Splitting the power to 3.3V, 5V, 6V from one battery to different components.<br/> | |

| − | + | 5. Finding the pitch and dimensions of each component and placing them in the correct place and orientation.<br/> | |

| − | + | 6. Finding the correct power IC which can serve all the requirements.<br/> | |

| + | 7. Routing the wires on the PCB. <br/> | ||

<HR> | <HR> | ||

| Line 468: | Line 496: | ||

== CAN Communication == | == CAN Communication == | ||

| − | |||

Our message IDs were chosen based upon an agreed priority scheme. We assigned high priority IDs to messages that controlled the movement of the car, followed by sensor and GEO messages, and lowest priority messages were for debug and status. | Our message IDs were chosen based upon an agreed priority scheme. We assigned high priority IDs to messages that controlled the movement of the car, followed by sensor and GEO messages, and lowest priority messages were for debug and status. | ||

| Line 629: | Line 656: | ||

== Sensor ECU == | == Sensor ECU == | ||

| − | + | https://gitlab.com/infallibleprogrammer/utah/-/tree/user/jtran1028/%2311/refresh-one-sensor-per-20hz | |

=== Hardware Design === | === Hardware Design === | ||

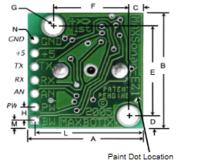

| − | + | The sensor of choice for UTAH, was the LV-MAXSonar-EZ1 MB1010. The LV-MAXSonar-EZ1 comes in five different models for the ultrasonic sensor. These models are the MB1000, MB1010, MB1020, MB1030, and MB1040. The models are based on the beam width of the sensor. MB1000 has the widest beam pattern where as MB1040 has the narrowest. It was decided that the MB1010 model was better suited for object detection. If the beam pattern is too wide given the MB1000, there is a greater chance of cross talk between sensors. If the beam pattern is too narrow, there may be blind spots which must be accounted for. Thus, four LV-MAXSonar-EZ1 MB1010 sensors were used. Three sensors were placed in the front and one in the rear of the vehicle. It will be noted that the SJTWO board is configured to have three ADC channels. Thus to account for a fourth sensor, the DAC was configured to be an ADC channel. More of this configuration will be discussed in the software design. | |

| − | + | ||

<br/> | <br/> | ||

{| style="margin-left: auto; margin-right: auto; border: none;" | {| style="margin-left: auto; margin-right: auto; border: none;" | ||

| − | |[[File:LV-EZ-Ultrasonic-Range-Finder-600x600.jpg|165px|left|thumb|Front view of the MB1010 LV-MAXSonar-EZ1]] | + | |[[File:LV-EZ-Ultrasonic-Range-Finder-600x600.jpg|165px|left|thumb|upright=0.4|Front view of the MB1010 LV-MAXSonar-EZ1]] |

| | | | ||

|[[File:LV-MaxSonar-EZ1_PINS.png|200px|left|thumb|upright=0.4|Rear view of the MB1010 LV-MAXSonar-EZ1 with pin labels]] | |[[File:LV-MaxSonar-EZ1_PINS.png|200px|left|thumb|upright=0.4|Rear view of the MB1010 LV-MAXSonar-EZ1 with pin labels]] | ||

| | | | ||

|} | |} | ||

| + | [[File:Sensor Controller.png|750px|center|thumb|Sensor Hardware Design]] | ||

| + | |||

| + | <h5>Sensor Mounts and Placement</h5> | ||

| + | Sensor mounts were 3D printed and attached to the front and rear bumper of the RC car. The prints were created such that GoPro mounts were attachable. The GoPro mounts allowed the sensors to tilt and rotate to a desired position. The 3D prints were created by David Brassfield. David was not a part of team UTAH, however, his contribution to our project was crucial. Thus, we deeply appreciate his craftsmanship and his contribution to our project. | ||

| + | |||

| + | The sensors were positioned and angled away from eachother to minimize cross talk. Whereas, the GoPro mounts allowed the sensors to be tilted away from the ground to avoid an object being "detected". During initial testing without the mounts, objects were being "detected" as the pulse of the sensors would collide with the ground. However, tilting the sensors upward may cause objects to not be detected or be seen as further away. Through trial and error, the correct tilt was found and fixed to that position for each sensor. | ||

| + | |||

| + | <br/> | ||

| + | {| style="margin-left: auto; margin-right: auto; border: none;" | ||

| + | |[[File:Front_Bumper_View.jpg|400px|left|thumb|Front Sensor Mount]] || [[File:Rear_Bumper_View.jpg|300px|right|thumb|Rear Sensor Mount]] | ||

| + | | | ||

| + | |} | ||

| + | |||

| + | <h5>Sensor Range Readings Extraction</h5> | ||

| + | |||

| + | To extract range readings from the MB1010, the "AN" pin which represents analog output was utilized. A range reading is commanded by triggering the "RX" pin high for 20us or more. The output analog voltage is based on a scaling factor of (Vcc/512) per inch. All four of the sensors were powered from the SJTWO board, thus a 3.3v VCC was supplied to each sensor. According to the LV-MaxSonar-EZ datasheet, with 3.3v source, the analog output was expected to be roughly 6.4mV/inch. However, when initially testing the output range readings, the output was completely inaccurate. It was soon found out that the ADC channels for the SJTWO are configured at start up to have an internal pull up resistor. Disabling this pull up resistor will be discussed more in Software Design. Once this solution was resolved, the range readings were more accurate. The readings were tested by having multiple objects placed in front of each sensor and measuring the distance of the object to the sensors. Although the range readings were more accurate, it was found that the range readings did not necessarily follow the expected 6.4mV/inch. As a result, range readings were off by roughly +/- 6 inches. For instance, an object placed 25 inches could have been detected as 31 inches. To account for this, the scaling was adjusted to 8mV/inch. This value was extracted by placing objects around the sensors and checking if the analog output to inch conversion matched to the actual measured distance. The readings with stationary objects were roughly +/- 1 inch which was deemed suitable for object detection. | ||

=== Software Design === | === Software Design === | ||

| − | < | + | The software design is split into two parts. An ultra sonic sensor module (1.) and a sensor controller module (2.). The ultra sonic module contains functions to initialize the adc channels, initialize the sensors sensors ,and to extract range readings. The sensor controller utilizes the ultra sonic sensor module to initialize and extract range readings from the ultra sonic sensors. In addition, the sensor controller contains the logic to transmit the readings for the front, left, right, and rear sensors over CAN to the driver controller. |

| + | |||

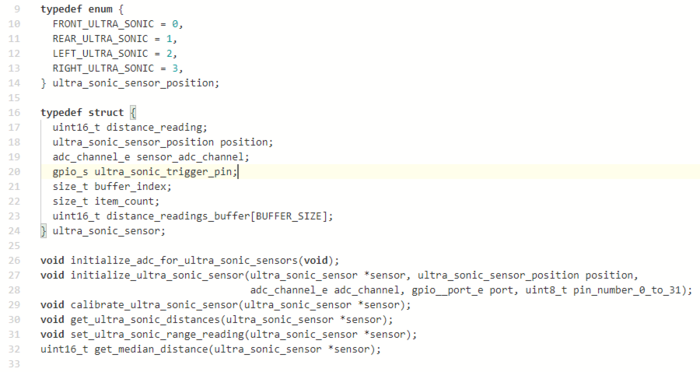

| + | <h5>1. Ultra Sonic Sensor Module</h5> | ||

| + | The ultra_sonic_sensors.h file can be seen below. This module contains an enumeration to specify the position of each sensor. In addition, an ultra_sonic_sensor struct was created to specify trigger pins, positions using the enum, and etc. The module contains basic functions to initialize the adc channels, to initialize the sensors,and to extract range readings. In addition, a median function was added to return the median of the last three data range readings for a given ultra_sonic_sensor. | ||

| + | |||

| + | <br/> | ||

| + | {| style="margin-left: auto; margin-right: auto; border: none;" | ||

| + | |[[File:ultra_sonic_sensors_header_file.PNG|700px|left|thumb|ultra_sonic_sensors.h]] | ||

| + | | | ||

| + | |} | ||

| + | |||

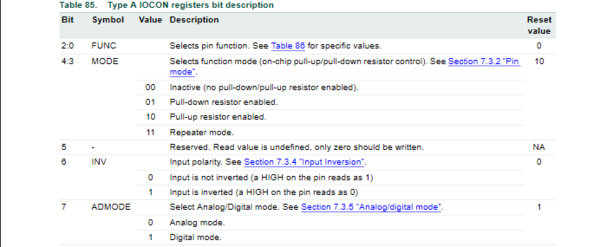

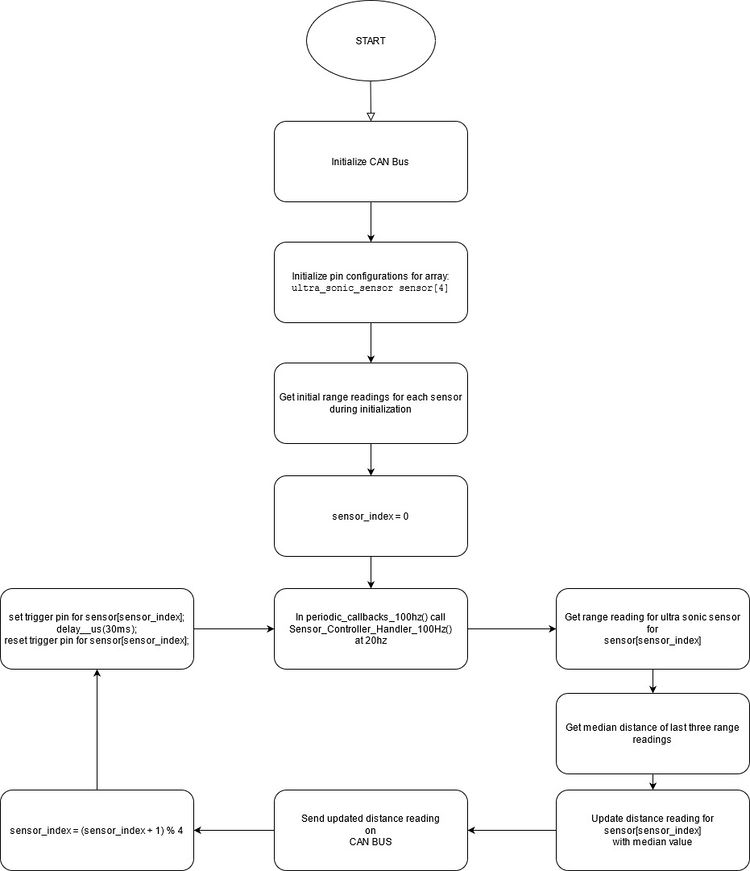

| + | <h6>Initializing the ADC channels</h6> | ||

| + | This section is added as a tip for future CMPE 243 students. The SJ2 board is configured for three ADC channels and a single DAC channel. To modify the DAC to be a fourth ADC channel, Preet's ADC API was modified in adc.c. In addition, the source file was modified to disable pull-up resistors. The IOCON registers can be configured following the table descriptions below. Table 86. shows that P0.26 which was configured as a DAC, can be configured for ADC0[3]. Table 85., shows the bit patterns to disable pull-up resistors and to enable analog mode. | ||

| + | |||

| + | <br/> | ||

| + | {| | ||

| + | |[[File:IOCON_register_ADC.PNG |600px|left|thumb|LPC User Manual Table Showing ADC configurations]] || [[File:ADC_pin_configuration.PNG|700px|center|thumb|LPC User Manual Table Showing Pin Configurations]] | ||

| + | | | ||

| + | |} | ||

| + | |||

| + | |||

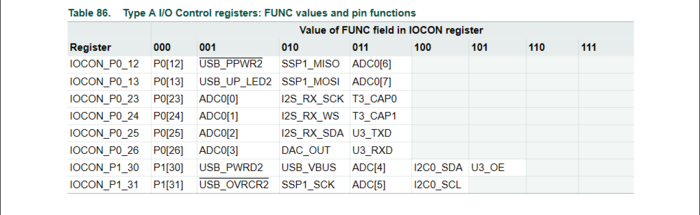

| + | <h5>2. Sensor Controller</h5> | ||

| + | The sensor controller module contains logic to initialize the sensors using the ultra_sonic_sensor_module. In addition, it contains the logic to transmit the range readings over CAN to the driver controller. Sensor_Controller_Init() initializes the adc channels and the ultra sonic sensors using the ultra_sonic_sensor module. Sensor_Controller_100hz_handler contains the logic to trigger sensor pins to command a range reading and to transmit range readings for each sensor over CAN to the driver controller. Each range reading per sensor is updated every 50ms to avoid cross talk. More on this is discussed in the "Accounting for Cross Talk" section. | ||

| + | |||

| + | To trigger each sensor for each call to Sensor_Controller_100hz_handler, an array ultra_sonic_sensor sensor[4] was created for the front, left, right, and rear sensor. A count labeled current_index, keeps track of which sensor distance reading to update. | ||

| + | |||

| + | The logic for Sensor_Controller_100hz_handler goes as follows: | ||

| + | |||

| + | * 1.) get_ultra_sonic_distances(&sensor[current_sensor_index]); | ||

| + | * 2.) get_median_distance(&sensor[current_sensor_index]); | ||

| + | * 3.) Update the distance reading for the ultra sonic sensor and transmit it over the CAN BUS | ||

| + | * 4.) set current_sensor_index equal to (current_sensor_index + 1) % 4 | ||

| + | * 5.) Command a range reading for sensor[current_sensor_index]. The reading will be available after 50ms, thus the next callback for get_ultra_sonic_distances() will have an updated range reading | ||

| + | |||

| + | <h5>Algorithm</h5> | ||

| + | The following functions are called in periodic callbacks. Discussion on the bridge controller is found in its respective section. The flow chart of the software can be seen below. | ||

| + | |||

| + | <h6>Periodic Callbacks Initialize:</h6> | ||

| + | * initialize can bus | ||

| + | * Call Sensor_Controller_init() | ||

| + | * Call Bridge_Controller_init() | ||

| + | |||

| + | <h6>Periodic Callbacks 1Hz:</h6> | ||

| + | * Call Bridge_Controller__1hz_handler() | ||

| + | * Call can_Bridge_Controller__manage_mia_msgs_1hz() | ||

| + | |||

| + | <h6>Periodic Callbacks 100Hz:</h6> | ||

| + | * Call Sensor_Controller__100hz_handler() at 20Hz | ||

| + | |||

| + | |||

| + | <br/> | ||

| + | {| | ||

| + | |[[File:Sensor_flow_chart.jpg |750px|center|thumb|Sensor flow chart]] | ||

| + | | | ||

| + | |} | ||

| + | |||

| + | |||

| + | <h5>Accounting for Cross Talk</h5> | ||

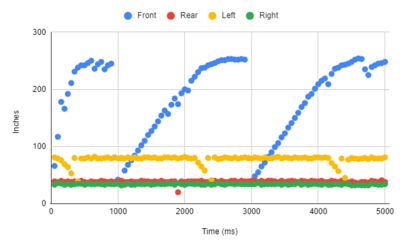

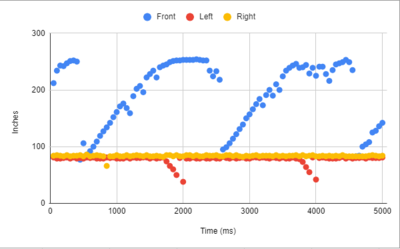

| + | Originally, each sensor was triggered simultaneously within a 50ms time frame. By doing this, there seemed to be an increase in cross talk between sensors. The range readings can be seen in the plot below, for when no object was placed in front of the sensors and for when an object was placed about 30 inches away from the right sensor. From these plots, we can see the cross talk occurs when an object was placed in front of a sensor. The cross talk causes the left sensor to see an object near the given range in which the object was placed in front of the right sensor. Although the cross talk occurs a few times, this will cause an object to be "detected", thus the car will sway to dodge the object. As a result, if an object is detected on the right sensor, the left sensor may detect an object. Thus, for example, the object avoidance algorithm will call for the RC car to reverse instead of moving left to dodge the object on the right. It will be noted that the rear sensor can be ignored in the plot as it was isolated and moved away for testing purposes. To minimize the cross talk, each sensor are individually triggered every 50ms. This gives each sensor a 50ms time frame to extract a range reading with less interference. | ||

| + | <br/> | ||

| + | {| style="margin-left: auto; margin-right: auto; border: none;" | ||

| + | |[[File:Cross_talk_plot_object_on_right.PNG |400px|left|thumb|Range Readings showing cross talk between sensors with an object placed 30 inches away from the right sensor]] || [[File:Cross_talk_plot_no_object.PNG|400px|center|thumb|Range Readings showing cross talk between sensors without objects near sensors]] | ||

| + | | | ||

| + | |} | ||

=== Technical Challenges === | === Technical Challenges === | ||

| − | + | The biggest challenge was cross talk. The median function was added as a filtering mechanism. Although cross talk was minimized by having the sensors angled away from each other, there was still occurrences of objects being "detected" even when no objects were within a threshold for object avoidance. This may be a result of movement of the vehicle. Thus, if an object was to be "detected", the RC car will maneuver to avoid the object even if no object was in close proximity. The median function would return the median of the last three range readings. This minimized the chance of false positives occurring for object avoidance. However, this introduced new issues. As each sensor is updated individually every 50ms, all sensors will have updated values after 200ms. With the median filter taking into account the pass three range readings, this will cause a huge delay between readings. Thus, if an object is to be detected below a threshold, the previous value must have also been below the threshold in order for the object to be avoided. Therefore, two range readings will be needed to trigger a response. Which worse case can be 400ms. A better approach would have been to keep track of the previous value and keep a delta value in which the current sensor reading can be deemed as valid compared to the previous value. | |

| − | |||

<HR> | <HR> | ||

<BR/> | <BR/> | ||

| + | |||

== Motor ECU == | == Motor ECU == | ||

| − | + | ||

| + | [https://gitlab.com/infallibleprogrammer/utah/-/tree/user/amritpal_sidhu/%2310/Motor_Controller_Refine_PID Motor Source Code] | ||

=== Hardware Design === | === Hardware Design === | ||

| + | |||

| + | |||

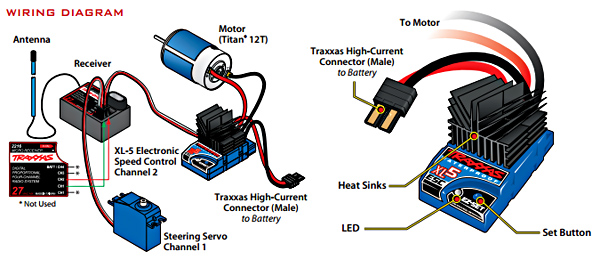

| + | [[File:Wiring diagram.jpg|750px|center|thumb|Motor hardware wiring]] | ||

| + | |||

| + | |||

[[File:Motor controller.png|750px|center|thumb|Motor hardware Design]] | [[File:Motor controller.png|750px|center|thumb|Motor hardware Design]] | ||

| Line 664: | Line 776: | ||

The job of the DC motor is to control the spinning of the rear 2 wheels through the utilization of ESC and wheel encoder whereas the job of the servo motor is to control the steering of the front 2 wheels. | The job of the DC motor is to control the spinning of the rear 2 wheels through the utilization of ESC and wheel encoder whereas the job of the servo motor is to control the steering of the front 2 wheels. | ||

| − | {| class="wikitable" style="margin- | + | {| class="wikitable" style="margin-center: auto; margin-center: auto; text-align:center;" |

|+ Motor Board Pinout | |+ Motor Board Pinout | ||

|- | |- | ||

| Line 690: | Line 802: | ||

The DC motor and ESC were provided with RC car. The DC motor is controlled by the ESC using PWM signals which were provided by the motor controller board for forward, neutral, and reverse movements. The ESC is powered ON using a 7.4 LiPo battery. The ESC converts this 7.4V to 6V and provides input to DC Motor. | The DC motor and ESC were provided with RC car. The DC motor is controlled by the ESC using PWM signals which were provided by the motor controller board for forward, neutral, and reverse movements. The ESC is powered ON using a 7.4 LiPo battery. The ESC converts this 7.4V to 6V and provides input to DC Motor. | ||

| + | |||

| + | |||

| + | [[File:DC motor.jpg|350px|center|thumb|DC Motor]] | ||

| + | |||

{| class="wikitable" style="text-align: center;" | {| class="wikitable" style="text-align: center;" | ||

| Line 695: | Line 811: | ||

! scope="col"| ESC wires | ! scope="col"| ESC wires | ||

! scope="col"| Description | ! scope="col"| Description | ||

| − | ! scope="col"| Wire Color | + | ! scope="col"| Wire Color |

|- | |- | ||

! scope="row"| Vout | ! scope="row"| Vout | ||

| Line 723: | Line 839: | ||

==== Servo Motor ==== | ==== Servo Motor ==== | ||

| + | |||

| + | [[File:Servo motor.jpg|350px|center|thumb|Servo Motor]] | ||

| + | |||

{| class="wikitable" style="text-align: center;" | {| class="wikitable" style="text-align: center;" | ||

| Line 749: | Line 868: | ||

==== Wheel Encoder ==== | ==== Wheel Encoder ==== | ||

| − | For speed sensing we purchased a Traxxas RPM sensor as it mounted nicely in the | + | For speed sensing we purchased a Traxxas RPM sensor as it mounted nicely in the gearbox. The RPM sensor works by mounting a magnet to the spur gear and a hall effect sensor fixed to the gearbox. To get the revolutions per second we used Timer2 as an input capture. <br> |

| + | |||

| + | |||

| + | [[File:RPM.jpg|350px|center|thumb|Servo Motor]] | ||

| + | |||

{| class="wikitable" style="text-align: center;" | {| class="wikitable" style="text-align: center;" | ||

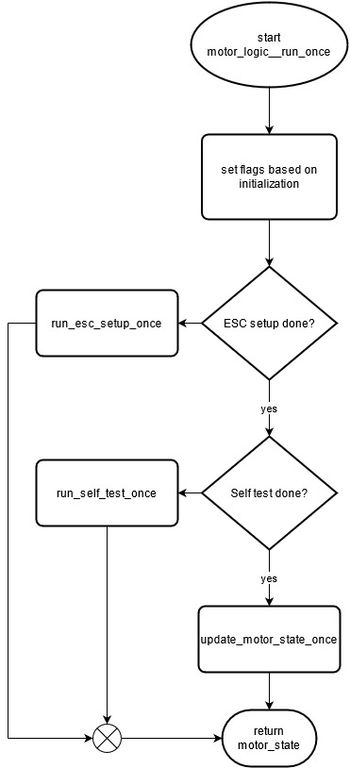

| Line 821: | Line 944: | ||

* At 20Hz receive all messages from CAN bus and update local motor data structure | * At 20Hz receive all messages from CAN bus and update local motor data structure | ||

</div> | </div> | ||

| + | |||

| + | [[File:motor_logic__run_once__picture.jpg|350px|left|thumb|motor_logic__run_once() flowchart]] | ||

| + | [[File:motor_logic__update_motor_state_once_picture.jpg|350px|center|thumb|motor_logic__update_motor_state_once() flowchart (see code, or description above, for more details on state machine)]] | ||

=== Technical Challenges === | === Technical Challenges === | ||

| Line 836: | Line 962: | ||

== Geographical Controller == | == Geographical Controller == | ||

| − | + | https://gitlab.com/infallibleprogrammer/utah/-/tree/master/projects/GEO_Controller | |

<Picture and link to Gitlab> | <Picture and link to Gitlab> | ||

=== Hardware Design === | === Hardware Design === | ||

| + | The geographical controller runs all the processing for compass data as well as GPS data. The purpose of the controller is to interface with the Adafruit Ultimate GPS Breakout using UART which provides accurate GPS data formatted in GPGGA. The controller utilizes I2C protocol to interface with the Adafruit Magnetometer with tilt compensation for the purpose of finding our heading and where the car needs to point in order to get closer to its destination. The Adafruit magnetometer was able to give us accurate data with a possible deviation of up to 3 degrees in any direction. This was possible by using a software filter to normalize our data and create a standard. | ||

[[File:Geo Akash.png|750px|center|thumb|Geo Hardware Design]] | [[File:Geo Akash.png|750px|center|thumb|Geo Hardware Design]] | ||

| + | {| class="wikitable" width="auto" style="text-align: center; margin-left: auto; margin-right: auto; border: none;" | ||

| + | |+Table 5. Geographical Node Pinout | ||

| + | |- | ||

| + | ! scope="col"| SJTwo Board | ||

| + | ! scope="col"| GPS/Compass Module | ||

| + | ! scope="col"| Description | ||

| + | ! scope="col"| Protocol/Bus | ||

| + | |- | ||

| + | | P4.28 (TX3) | ||

| + | | RX | ||

| + | | Adafruit GPS Breakout | ||

| + | | UART 3 | ||

| + | |- | ||

| + | | P4.29 (RX3) | ||

| + | | TX | ||

| + | | Adafruit GPS Breakout | ||

| + | | UART 3 | ||

| + | |- | ||

| + | | P0.10 (SDA2) | ||

| + | | SDA | ||

| + | | Adafruit Magnetometer | ||

| + | | I2C 2 | ||

| + | |- | ||

| + | | P0.10 (SCL2) | ||

| + | | SCL | ||

| + | | Adafruit Magnetometer | ||

| + | | I2C 2 | ||

| + | |- | ||

| + | | P0.1 (SCL1) | ||

| + | | CAN transceiver (Tx) | ||

| + | | CAN transmit | ||

| + | | CAN | ||

| + | |- | ||

| + | | P0.0 (SDA1) | ||

| + | | CAN transceiver (Rx) | ||

| + | | CAN receive | ||

| + | | CAN | ||

| + | |- | ||

| + | | Vcc 3.3V | ||

| + | | Vcc | ||

| + | | Vcc | ||

| + | | | ||

| + | |- | ||

| + | | GND | ||

| + | | GND | ||

| + | | Ground | ||

| + | | | ||

| + | |- | ||

| + | |} | ||

=== Software Design === | === Software Design === | ||

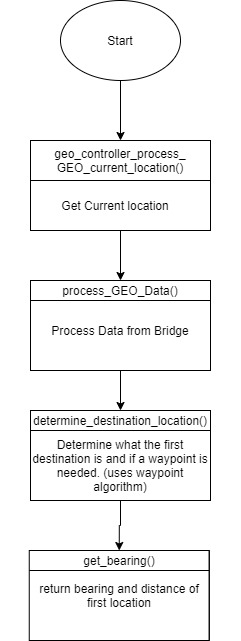

| − | < | + | The GEO controller consisted of 4 main parts which are: 1. GPS Driver and Processing, 2. Compass Processing, 3.Waypoints Algorithm, and 4. Geo Logic. The geo logic takes data from parts 1 to 3 and determines where the car should be moving. This data is sent to the driver controller via the canbus. |

| + | |||

| + | <h5>1. GPS Driver and Processing</h5> | ||

| + | The GPS module uses UART3 to communicate with our SJ2 board. The API call for the GPS driver requires setting up the gps registers to only allow GPGGA data as well as refresh data every 10hz(100ms) instead of 1hz(1 second). This solve a lot of initial issues with getting stale data as the car moved. The main API function is called gps__run_once() which digests data from the physical GPS hardware. Inside gps__run_once(), we call two functions that help with parsing the gps coordinates. The first is taking using a line buffer for the UART characters that are digested during operation and checking if they are in fact a full GPGGA string. The second function parses those coordinates from the line buffer and converts them from GPGGA minutes data to polar coordinates. Once the function is called, global static variables located in gps.c are used while a gps__get_coorindate() function is called whenever the API's data wants to be used outside of the gps.c file. In order to check if there is a gps fix, we created a function that checks if certain GPGGA bits are set or not. If the are set, than we have a fix. This was more accurate than the FIX pin located on the GPS module because of that pin toggles every 150ms even when their is a fix. | ||

| + | |||

| + | <h5>2.Compass Processing</h5> | ||

| + | The compass module was one of the trickiest parts of the GEO controller due to the necessary calibrations that came with the code. The compass is initialized to use I2C as a method for communicating with the SJ2 board. The API is primarily one function to get the latest, compass__run_once_get_heading(). This float function is split into three parts: get magnetometer data, get accelerometer data, and use both of those datasets to figure out the heading. The accelerometer was not used in the initial stages of development but was required to accommodate for tilt when the car was moving at high speeds. The compass required a lot of calculations to determine heading, and accommodate for tilt. | ||

| + | |||

| + | <h6>Heading Computation:</h6> | ||

| + | The lsm303DLHC magnetometer and acceletometer require tilt compensation to ensure accurate readings. If there is not tilt compensation, the compass heading can be up to 60 degrees off making it widely inaccurate. Once proper software based calculations were made, the compass was at most 3 degrees off. A software model was used to normalize the data and reduced noise related to bad readings. | ||

| + | [https://www.pololu.com/file/0J434/LSM303DLH-compass-app-note.pdf#page=6 Compass Tilt Calculation] | ||

| + | [https://www.instructables.com/Tilt-Compensated-Compass-With-LSM303DHLC/ Software Model for Compass] | ||

| + | |||

| + | <h6>Normalize Data: </h6> | ||

| + | float alpha = 0.25; // alpha to create base for software optimizations | ||

| + | static float Mxcnf; | ||

| + | static float Mycnf; | ||

| + | static float Mzcnf; | ||

| + | static float Axcnf; | ||

| + | static float Aycnf; | ||

| + | static float Azcnf; | ||

| + | |||

| + | float norm_m = | ||

| + | sqrtf(magnetometer_processed.x * magnetometer_processed.x + magnetometer_processed.y * magnetometer_processed.y + | ||

| + | magnetometer_processed.z * magnetometer_processed.z); // normalize data between all 3 axis | ||

| + | float Mxz = magnetometer_processed.z / norm_m; | ||

| + | float Mxy = magnetometer_processed.y / norm_m; | ||

| + | float Mxx = magnetometer_processed.x / norm_m; | ||

| + | float norm_a = sqrtf(accel_raw_data.x * accel_raw_data.x + accel_raw_data.y * accel_raw_data.y + | ||

| + | accel_raw_data.z * accel_raw_data.z); | ||

| + | float Axz = accel_raw_data.z / norm_a; | ||

| + | float Axy = accel_raw_data.y / norm_a; | ||

| + | float Axx = accel_raw_data.x / norm_a; | ||

| + | |||

| + | Mxcnf = Mxx * alpha + (Mxcnf * (1.0 - alpha)); | ||

| + | Mycnf = Mxy * alpha + (Mycnf * (1.0 - alpha)); | ||

| + | Mzcnf = Mxz * alpha + (Mzcnf * (1.0 - alpha)); | ||

| + | |||

| + | // Low-Pass filter accelerometer | ||

| + | Axcnf = Axx * alpha + (Axcnf * (1.0 - alpha)); | ||

| + | Aycnf = Axy * alpha + (Aycnf * (1.0 - alpha)); | ||

| + | Azcnf = Axz * alpha + (Azcnf * (1.0 - alpha)); | ||

| + | *Notes: | ||

| + | ** Mxcnf: Normalized Magnetometer X Data | ||

| + | ** Mycnf: Normalized Magnetometer Y Data | ||

| + | ** Mzcnf: Normalized Magnetometer Z Data | ||

| + | ** Axcnf: Normalized Accelerometer X Data | ||

| + | ** Aycnf: Normalized Accelerometer Y Data | ||

| + | ** Azcnf: Normalized Accelerometer Z Data | ||

| + | |||

| + | The normalize data model shown above takes the magnetometer and accelerometer data that has been bit shifted and saved to a static function located in compass.c. The data is divided by the normalized data set for all 3 axis. This occurs for both the accelerometer and magnetometer. Once this data is taken, a low-pass filter is applied where the previous value of the data is used to check for deviation and offsets. An alpha of .25 is used to accommodate for a 75% threshold for deviations in the data during a given time frame. This data is later fed into the tilt compensation algorithm. | ||

| + | |||

| + | <h6>Tilt Calculations: </h6> | ||

| + | float pitch = asin(-Axcnf); | ||

| + | float roll = asin(Aycnf / cos(pitch)); | ||

| + | float Xh = Mxcnf * cos(pitch) + Mzcnf * sin(pitch); | ||

| + | float Yh = Mxcnf * sin(roll) * sin(pitch) + Mycnf * cos(roll) - | ||

| + | Mzcnf * sin()roll) * cos(pitch); | ||

| + | current_compass_heading = (atan2(Yh, Xh)) * 180 / PI; | ||

| + | current_compass_heading += 13; | ||

| + | if (current_compass_heading < 0) { | ||

| + | current_compass_heading = 360 + current_compass_heading; | ||

| + | } | ||

| + | |||

| + | Pitch and roll are a fundamental part of calculating the tilt. Pitch accounts for deviations in terms of the Y axis while roll is around the X axis. In the calculation seen above, pitch takes the asin of the negation of Axcnf(normalized x axis data for the accelerometer). Roll on the other hand is the ration of normalized y axis data over the cosine of pitch. These are used for the formula seen directly below it. The compass heading is adjusted 13 degrees due to our offset from true north. We need to use the true north value because the data sent to us by google maps compensates for true north. If we encounter an angle below 360, than we get its complement by adding 360 to ensure the rest of our calculations are correct. | ||

| + | |||

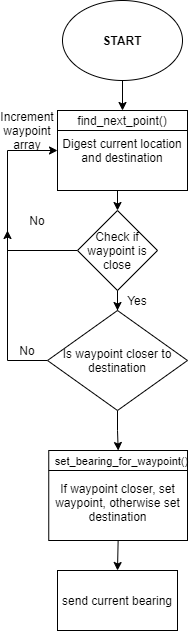

| + | <h5>3.Waypoints</h5> | ||

| + | The waypoints algorithm worked in a very simple way but was proven to be affective. The usage of waypoints are used as a way to 3D map obstacles related to the terrain of the SJSU 10th street garage. As shown in the map below, we had 11 overall waypoints and a majority of them are located around the ramp located on the left side of the map. As shown in the map, we utilized waypoints in such a way to ensure that they went around the ramp, rather than through it. This was to avoid the hazard of the RC car believing it can go straight when in fact it can not due to the circular ramp. The points were also created in a line so that the RC car did not unnecessarily go towards the ramp because it was the closest waypoint. If we had a line of points, than it would calculate the point closest to itself and go towards the one. We also wanted to avoid redundant waypoints so we reduced the original 20 waypoints to 11. The checkpoint API looked flow is shown below. | ||

| + | |||

| + | [[File:Waypoint algorithm.png|200px|middle|Figure #. Waypoint API Flow]] | ||

| + | [[File:UTAH Waypoints map.png|500px|middle|Figure #. Waypoint Map]] | ||

| + | |||

| + | <h6>Distance Calculations: </h6> | ||

| + | // a = sin²(Δlatitude/2) + cos(destination_lat) * cos(origin_lat) * sin²(Δlongitude/2) | ||

| + | // c = 2 * atan2(sqrt(a), sqrt(1−a)) | ||

| + | // d = (6371 *1000) * c | ||

| + | const int Earth_Radius_in_km = 6371; | ||

| + | const float delta_lat = (origin.latitude - destination.latitude) * (PI / 180); | ||

| + | const float delta_long = (origin.longitude - destination.longitude) * (PI / 180); | ||

| + | float a = pow(sinf(delta_lat / 2), 2) + | ||

| + | cosf(destination.latitude * (PI / 180)) * cosf(origin.latitude * (PI / 180)) * pow(sin(delta_long / 2), 2); | ||

| + | float c = 2 * atan2f(sqrt(a), sqrt(1 - a)); | ||

| + | return (float)(Earth_Radius_in_km * c * 1000); | ||

| + | |||

| + | In order to get the calculation for distance, we must accommodate for the curvature of the Earth as well as the radius. In the equation above, we see haversine translated into C code which allows us the compute the distance in meter. This is done in the find_next_point() API call where we calculate the distance from origin to waypoint and waypoint to destination. We are looking for closest point that reduce the distance to the destination. If both of those statements are true, then we set that as our waypoint. | ||

| + | |||

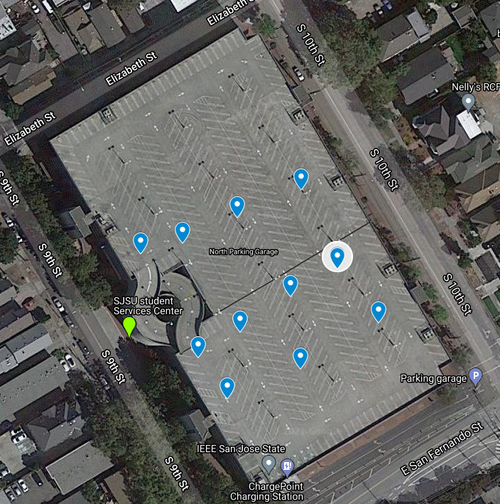

| + | <h5>4.Geo Logic</h5> | ||

| + | The geo logic was the primary API that allows the GEO controller to function. It takes data from waypoints.c, gps.c, and compass.c. The data is used and processed such that it may be send on the canbus. The geo_logic.c file primarily outputs dbc structs that are utilized in the can_module.c. With the implementation of waypoints, a lot of the calculation in geo_logic.c is no longer needed and will be editted out in the near future. At its current state the main functions in the API are geo_controller_processs_GEO_current_location() which gets the current GPS location for the RC car, geo_controllerprocess_geo_dat() which takes in the gps_destination sent by the bridge controller, and determine_destination_location() which tells the car where to go. | ||

| + | |||

| + | [[File:GEO logic.jpg|250px|center|Figure #. GEO_Logic]] | ||

| + | |||

| + | The geo logic was kept simple on purpose. We get our current location, then find out if a destination was sent to our static array. If no, new destination was received than we continue to determine if a waypoint is required to the destination. If the waypoint is used, then those values will be used for get_bearing(). We continue to process the read repeatedly for the 200ms cycle of the periodic scheduler. The task is that simple. If a new destination is appended then we add it and compute if we should go to that new destination first. The maximum allowed destinations is 5 by user design. | ||

| + | |||

| + | Geo Controller Periodics: | ||

| + | <div style="margin-left:1.5%;"> | ||

| + | <h6>Periodic Callbacks Initialize:</h6> | ||

| + | * Initialize: | ||

| + | ** CAN bus | ||

| + | ** gps__init | ||

| + | ** compass__init | ||

| + | |||

| + | <h6>Periodic Callbacks 1Hz</h6> | ||

| + | * If not sent yet, send the GPS instructions to only receive GPGGA data and 10hz refresh rate | ||

| + | * Get compass update values | ||

| + | * Send debug messages every 1 second | ||

| + | |||

| + | <h6>Periodic Callbacks 10Hz</h6> | ||

| + | * Run the API gps__run_once() to fetch the latest data. | ||

| + | * Use data to determine bearing and heading of first waypoint/destination | ||

| + | ** Send this information to the driver for processing | ||

| + | *Receive all messages on the canbus that are designated for us | ||

| + | |||

| + | </div> | ||

=== Technical Challenges === | === Technical Challenges === | ||

| − | + | * Adafruit GPS | |

| + | ** Problem: The data from the GPS was being refreshed every second which was causing issues for the RC car. It was swerving too much because of the stale data. | ||

| + | *** Solution: Send the command to the GPS for a higher refresh rate(10hz) and only send the SJ2 board GPGGA data. | ||

| + | ** Problem: It would take way too long for the GPS to have a fix causing a 3-5 minute way when indoors and over 45 seconds when outside | ||

| + | *** Solution: Utilize the external antenna. It was able to get a fix inside in under a minute while outside within 25 seconds. | ||

| − | + | * Compass | |

| − | + | ** Problem: The heading was widely inaccurate when attempting to see compass reading | |

| + | *** Solution: When calibrating the sensor, do not have any electronics near you. Ensure you have enough distance between the controller and the compass when in the calibration step. This ensures accurate calibration and readings. | ||

| + | ** Problem: The data on the compass was very inaccurate when compensating for tilt | ||

| + | *** Solution: Utilized magviewer to get proper bias rating for the compass sensor which we used. Data was with 3 degrees of the intended degree | ||

| − | |||

| − | |||

== Communication Bridge Controller & LCD == | == Communication Bridge Controller & LCD == | ||

| − | https://gitlab.com/ | + | https://gitlab.com/infallibleprogrammer/utah/-/tree/master/projects/Sensor_Controller |

=== Hardware Design === | === Hardware Design === | ||

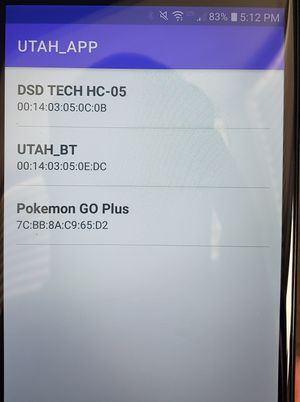

The bridge controller and sensor controllers were merged together on one board. For the hardware pinout, view the schematic located in the sensor controller section. | The bridge controller and sensor controllers were merged together on one board. For the hardware pinout, view the schematic located in the sensor controller section. | ||

| − | The hardware for the controller is a very straight forward design. | + | The hardware for the controller is a very straight forward design. The HC-05 Bluetooth modules is connected to the bridge controller via UART. The phone used for testing purposes must have a Bluetooth capabilities. For this reason, an emulated phone was unable to be used for anything except graphics and viewing purposes. |

To configure the HC-05, a Raspberry Pi 4 was setup to terminal into the HC-05 over UART. This site was helpful in the setup: https://www.instructables.com/Modify-The-HC-05-Bluetooth-Module-Defaults-Using-A/. One note, a few sites showed the command for setting the baud rate to be +AT+BAUD=____. This was invalid for the HC-05 and requires the +AT_UART= Baud, stop, parity. | To configure the HC-05, a Raspberry Pi 4 was setup to terminal into the HC-05 over UART. This site was helpful in the setup: https://www.instructables.com/Modify-The-HC-05-Bluetooth-Module-Defaults-Using-A/. One note, a few sites showed the command for setting the baud rate to be +AT+BAUD=____. This was invalid for the HC-05 and requires the +AT_UART= Baud, stop, parity. | ||

| Line 871: | Line 1,164: | ||

All messages are decoded in the 10hz periodic every cycle. The Bluetooth messages are sent in chunks in the 10 Hz periodic to avoid the overlapping of the messages, sending groups of messages at one time. The grouping is as follows: Driver and motor messages, GEO, then Sensors. | All messages are decoded in the 10hz periodic every cycle. The Bluetooth messages are sent in chunks in the 10 Hz periodic to avoid the overlapping of the messages, sending groups of messages at one time. The grouping is as follows: Driver and motor messages, GEO, then Sensors. | ||

MIA checks for all messages is done in the 1 Hz periodic. This setup was extremely helpful for identifying if a board was flashed with the incorrect code or stopped sending messages that were supposed to be sent. | MIA checks for all messages is done in the 1 Hz periodic. This setup was extremely helpful for identifying if a board was flashed with the incorrect code or stopped sending messages that were supposed to be sent. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Technical Challenges === | === Technical Challenges === | ||

| − | There were a few | + | There were only a few challenges faced with the Bridge Controller. |

The largest problem was actually sending data to the App in a timely manner. Converting all of the messages to strings to send through bluetooth was found to be too slow and would result in messages crossing over on top of one another. Even with the UART baud rate increased to the maximum speed of 115,200 for the HC-05, the messages would blur together at times. The easiest fix was to only populate the some of the messages in a given 10hz callback. Only two of the boards were able to be grouped together to send, motor and driver. The other controllers' messages were sent in one time slot. | The largest problem was actually sending data to the App in a timely manner. Converting all of the messages to strings to send through bluetooth was found to be too slow and would result in messages crossing over on top of one another. Even with the UART baud rate increased to the maximum speed of 115,200 for the HC-05, the messages would blur together at times. The easiest fix was to only populate the some of the messages in a given 10hz callback. Only two of the boards were able to be grouped together to send, motor and driver. The other controllers' messages were sent in one time slot. | ||

| − | + | The other difficult part was working in tandem with the Sensor Controller code and ensure both of us were using the correct branch and most updated code. | |

| − | |||

| − | |||

<HR> | <HR> | ||

| Line 892: | Line 1,178: | ||

== Master Module == | == Master Module == | ||

| − | + | https://gitlab.com/infallibleprogrammer/utah/-/tree/master/projects/Master_Controller | |

=== Hardware Design === | === Hardware Design === | ||

| + | The Master node is primarily responsible for issuing commands to the Motor node to go towards target waypoints. It takes information from both the Geo and Sensor nodes and preforms its own calculations based on that information to make the proper turns and speed changes. It is also responsible for outputting relevant data such as Distance to target, Current Heading, and Speed to the connected RPIgear LCD screen for easy viewing of the data while the car is in motion | ||

[[File:Master controller.png|750px|center|thumb|Master controller Hardware Design]] | [[File:Master controller.png|750px|center|thumb|Master controller Hardware Design]] | ||

| + | |||

| + | {| style="margin-left: auto; margin-right: auto; border: none;" | ||

| + | |- | ||

| + | | | ||

| + | {| class="wikitable" | ||

| + | |+ Table 6. Driver Node Pinout: CAN Transceiver | ||

| + | |- | ||

| + | ! SJTwo Board | ||

| + | ! CAN Board | ||

| + | |- | ||

| + | | P0.0 | ||

| + | | CAN RX | ||

| + | |- | ||

| + | | P0.1 | ||

| + | | CAN TX | ||

| + | |- | ||

| + | | Vin | ||

| + | | 3.3 | ||

| + | |- | ||

| + | | GND | ||

| + | | GND | ||

| + | |} | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | {| class="wikitable" | ||

| + | |+ Table 7. Driver Node Pinout: RPIgear LCD Screen | ||

| + | |- | ||

| + | ! SJTwo Board | ||

| + | ! CAN Board | ||

| + | |- | ||

| + | | P4.28 | ||

| + | | UART - RX | ||

| + | |- | ||

| + | | P4.29 | ||

| + | | UART - TX | ||

| + | |- | ||

| + | | Vin | ||

| + | | 3.3 | ||

| + | |- | ||

| + | | GND | ||

| + | | GND | ||

| + | |} | ||

| + | |} | ||

=== Software Design === | === Software Design === | ||

| − | [[File:Driver_logic_UTAH.jpg|center]] | + | [[File:Driver_logic_UTAH.jpg|center|thumb|Master controller Code Logic]] |

| + | |||

| + | Driver begins its navigation when the Geo controller locks onto a waypoint to move towards and begins its navigation process there, any other time it remains still awaiting mobile commands. When it is sent this waypoint it goes through the navigation process | ||

| + | |||

| + | <h3>Navigation code: </h3> | ||

| + | void driver__process_geo_controller_directions(dbc_COMPASS_HEADING_DISTANCE_s *geo_heading) { | ||

| + | received_heading.CURRENT_HEADING = geo_heading->CURRENT_HEADING; | ||

| + | received_heading.DESTINATION_HEADING = geo_heading->DESTINATION_HEADING; | ||

| + | received_heading.DISTANCE = geo_heading->DISTANCE; | ||

| + | } | ||

| + | dbc_MOTOR_CHANGE_SPEED_AND_ANGLE_MSG_s driver__get_motor_commands(void) { | ||

| + | bool is_object_on_right = false; | ||

| + | if (check_whether_obstacle_detected()) { | ||

| + | if (internal_sensor_data.SENSOR_SONARS_left <= distance_for_object && | ||

| + | internal_sensor_data.SENSOR_SONARS_middle <= distance_for_object && | ||

| + | internal_sensor_data.SENSOR_SONARS_right <= distance_for_object) { | ||

| + | reverse_car_and_turn(); | ||

| + | } else if ((internal_sensor_data.SENSOR_SONARS_left <= distance_for_object && | ||

| + | internal_sensor_data.SENSOR_SONARS_middle <= distance_for_object) || | ||

| + | internal_sensor_data.SENSOR_SONARS_left <= distance_for_object) { | ||

| + | is_object_on_right = false; | ||

| + | change_angle_of_car(is_object_on_right); | ||

| + | } else if ((internal_sensor_data.SENSOR_SONARS_right <= distance_for_object && | ||

| + | internal_sensor_data.SENSOR_SONARS_middle <= distance_for_object) || | ||

| + | internal_sensor_data.SENSOR_SONARS_right <= distance_for_object) { | ||

| + | is_object_on_right = true; | ||

| + | change_angle_of_car(is_object_on_right); | ||

| + | } else if (internal_sensor_data.SENSOR_SONARS_rear <= distance_for_object) { | ||

| + | is_object_on_right = | ||

| + | (internal_sensor_data.SENSOR_SONARS_right < internal_sensor_data.SENSOR_SONARS_left) ? true : false; | ||

| + | change_angle_of_car(is_object_on_right); | ||

| + | forward_drive(); | ||

| + | } else if (internal_sensor_data.SENSOR_SONARS_middle <= distance_for_object) { | ||

| + | is_object_on_right = | ||

| + | (internal_sensor_data.SENSOR_SONARS_right < internal_sensor_data.SENSOR_SONARS_left) ? true : false; | ||

| + | // change_angle_of_car(is_object_on_right); | ||

| + | // forward_drive(); | ||

| + | reverse_car_and_turn(); | ||

| + | } else { | ||

| + | // do nothing, should not reach this point, object is detected case should be handled | ||

| + | } | ||

| + | } else { | ||

| + | // Drive towards what geo points me to | ||

| + | turn_towards_destination(); | ||

| + | set_ideal_forward(); | ||

| + | } | ||

| + | return internal_motor_data; | ||

| + | } | ||

| + | |||

| + | In this Code block Sensor Information is first processed in the initial if statement. Each of the 4 sensors is evaluated to see what combination have been tripped. Depending on this combination the car will have to either turn left or right if free or reverse and backup. If all sensors return no obstacles, then the turn_towards_destination() function is run to turn the car to a checkpoint. | ||

| + | |||

| + | <h3>Calculate Turning angle </h3> | ||

| + | static void turn_towards_destination(void) { | ||

| + | turn_direction = right; | ||

| + | heading_difference = received_heading.DESTINATION_HEADING - received_heading.CURRENT_HEADING; | ||

| + | if (heading_difference > 180) { | ||

| + | turn_direction = left; | ||

| + | heading_difference = 360 - heading_difference; | ||

| + | } else if (heading_difference < 0 && heading_difference > -180) { | ||

| + | turn_direction = left; | ||

| + | heading_difference = fabs(heading_difference); | ||

| + | } else if (heading_difference < -180) { | ||

| + | turn_direction = right; | ||

| + | heading_difference = fabs(360 + heading_difference); | ||

| + | } else if (heading_difference > 0 && heading_difference <= 180) { | ||

| + | turn_direction = right; | ||

| + | } | ||

| + | tell_motors_to_move(turn_direction, heading_difference, received_heading.DISTANCE); | ||

| + | } | ||

| + | This function takes the current heading and subtracts out destination heading. The absolute value is then taken to get the magnitude of the turn required. The direction is determined by the sign of the difference. These are then sent to the tell_motors_to_move function. | ||

| − | < | + | <h3>Tell Motors To Move </h3> |

| + | static void tell_motors_to_move(direction_t direction_to_turn, float turn_magnitude, float distance_magnitude) { | ||

| + | if (distance_magnitude > 10) { | ||

| + | if (turn_magnitude > max_angle_threshold) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = max_angle_threshold / 2; | ||

| + | } else if (turn_magnitude > mid_angle_threshold) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = mid_angle_threshold / 2; | ||

| + | } | ||

| + | if (turn_magnitude > mid_angle_threshold) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = mid_angle_threshold; | ||

| + | } else if (turn_magnitude > 5) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = 0; | ||

| + | } | ||

| + | } else { | ||

| + | if (internal_motor_data.SERVO_STEER_ANGLE_sig > 20) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = 20; | ||

| + | } | ||

| + | else { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig = turn_magnitude; | ||

| + | } | ||

| + | } | ||

| + | if (direction_to_turn == left) { | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig *= -1; | ||

| + | internal_motor_data.SERVO_STEER_ANGLE_sig -= 5; | ||

| + | } | ||

| + | /** | ||

| + | * The idea with this is to go slower on sharp turns. Going faster on straight aways | ||

| + | * is fine too. | ||

| + | */ | ||

| + | if (abs(internal_motor_data.SERVO_STEER_ANGLE_sig) <= min_angle_threshold) { | ||

| + | internal_motor_data.DC_MOTOR_DRIVE_SPEED_sig = go_fast; | ||

| + | } else if (abs(internal_motor_data.SERVO_STEER_ANGLE_sig) <= mid_angle_threshold) { | ||

| + | internal_motor_data.DC_MOTOR_DRIVE_SPEED_sig = go_medium; | ||

| + | } else if (abs(internal_motor_data.SERVO_STEER_ANGLE_sig) <= max_angle_threshold) { | ||

| + | internal_motor_data.DC_MOTOR_DRIVE_SPEED_sig = go_slow; | ||

| + | } | ||

| + | if (distance_magnitude < 10) { | ||

| + | internal_motor_data.DC_MOTOR_DRIVE_SPEED_sig = go_slow; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | There are a few peculiarities that were run into when testing driver code. First was the reliability of the compass. We found the compass to be quite noisy and not as reliable as first anticipated. The result was to only go half of the angle calculated before, and only at discrete angles, as shown above. This was one of the largest contributing factor to the smoothness of the drive of the car. The variables were all set at the top of the file and allowed for ease of change for testing purposes. | ||

=== Technical Challenges === | === Technical Challenges === | ||

| − | + | The biggest challenge face with the Master Controller is the reliability of the data coming in. We incorrectly assumed that the data would be nearly perfect and that descisions could be made quickly on that data. Once we began to filter most of the data and not allow the Master to over compensate, the driving became a lot smoother. | |

<HR> | <HR> | ||

<BR/> | <BR/> | ||

| + | |||

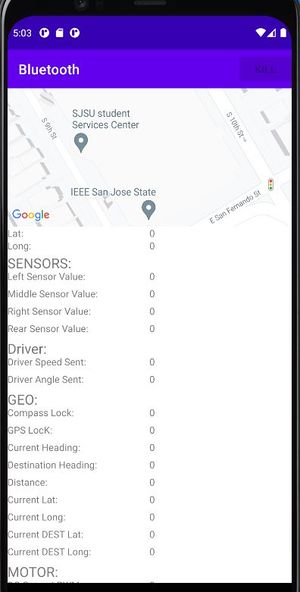

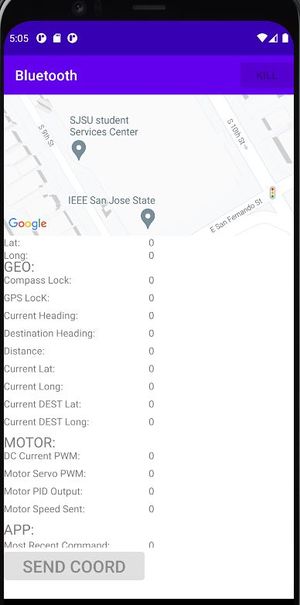

== Mobile Application == | == Mobile Application == | ||

| − | + | https://gitlab.com/jbeard79/UTAH_APP/-/tree/user/jbeard79/%231/SensorReading | |

| + | [[File: UTAH_App1.JPG|300px]] | ||

| + | [[File: UTAH_App2.jpg|300px]] | ||

| + | [[File:UTAH AppConnectBT.jpg|300px]] | ||

=== Hardware Design === | === Hardware Design === | ||

| + | The phone used was an older LG Tracfone running Android OS. | ||

=== Software Design === | === Software Design === | ||

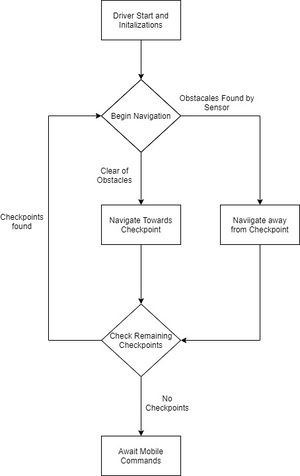

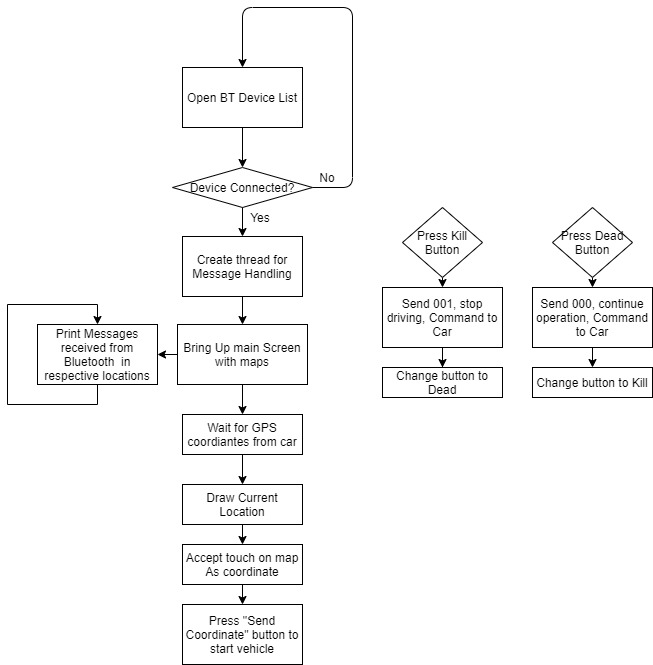

| − | + | [[File: UTAH_AppSoftwareFlow.jpg]] | |

| + | |||

| + | When the app first opens the Bluetooth socket that is created is checked if it is NULL, which it should be with startup. If this occurs the Bluetooth connection screen will be brought up, shown as the far right picture above, and the user will need to tap a device to attempt a connection. If the connection fails, the screen will again be brought up. Once a connection is established with the Bridge Controller the main screen, shown as the far left picture, will be brought up. | ||

| + | The first thing that can be seen is the Map. A few precautions were taken so nothing would happen to the map's camera when the user attempts to click on the map while the GPS is not sending accurate data. A blue pin is used to represent the car's current location once good data is being received. When this data is updated, this pin's location is refreshed and redrawn onto the map. A default destination is used for the 10th street garage for some ease of testing and to accommodate camera repositioning. The camera is repositioned to the averaged of the destination and the current location. | ||

| + | Once the desired destination is selected, the latitude and longitude of the selection will be displayed under the map. The car can be started by pressing the Send Coordinates button located at the bottom of the scroll window. This will send coordinates, truncated down to a float instead of a double, onto the can bus. | ||

| + | |||

| + | For the messaging system, the can bus ID's were used along with a sub number to tell which part of the message was being sent: XXXY_____ where xxx is the can message id, y is which portion of the message it is, and the underline s are the message data. The messages all corresponded to a TextView and a data structure was made to hold up to 4 views. All of these message blocks of views was put into an array for ease of operation. A simple for loop was then used to check the ID of the incoming message against the IDs in the array. The sub ID then was used to identify which view. | ||

| + | |||

| + | The last key piece to the app is the kill button. When pressed the app will send a 1 to the bridge controller, which will relay that onto the bus. The motor's logic has checking built in that will understand if the command is a 1 stop operation. | ||

=== Technical Challenges === | === Technical Challenges === | ||

| − | + | Connecting to google maps was much easier than anticipated. The tutorial here: https://developers.google.com/maps/documentation/android-sdk/start, is a great way to add the map portion in. | |

| + | |||

| + | Thinking about message format and structure early was key. Setting up with good modular design saved a lot of time after it was implemented and made the code much easier to read. | ||

| + | One other thing to note when using google maps, an account must be setup and a key is used for the app to connect. That key is located in the googlemapsapi file, and is ignored in git by default when using Android Studios. Double checking that your key is not included in your pushes is helpful to ensure your key is not leaked. | ||

<BR/> | <BR/> | ||

| − | + | ||

| − | |||

| − | |||

== Conclusion == | == Conclusion == | ||

| − | |||

| − | + | This is a challenging project on its own, but despite the additional difficulties that came with remote learning we were able to finish many of our objectives and learned many lessons along the way. This project covers multiple disciplines, from mechanical engineering, electrical engineering, computer science, and even project management, some of which none of us had prior experience in. During our time working on this project we furthered our understanding of embedded systems and good software design patterns, such as test driven development, while learning new information as well. Interfacing with ultrasonic sensors and doing signal processing on the data, interfacing with a GPS module, determining heading from a magnetometer and incorporate tilt compensation using an accelerometer, write firmware for a Bluetooth module and communicate with and Android application that we made, interface with electric motors using PWM and create a PID to control speed, designing a PCB, not to mention our deep dive into the CAN protocol and not only learning the intricacies of the protocol but designing our own CAN messages in a DBC file and writing firmware to have our controllers communicate. Many of these tasks required research to get an understanding of concepts before we could even implement anything. | |

| + | |||

| + | All these tasks were made more challenging with the remote learning as some of us were not always in close proximity to the campus so that we could troubleshoot problems quickly and with access to labs. Some of us were working full time jobs while taking the course, making time management hard and creating exhaustion. We had moments of frustration when integration testing threw roadblocks our way, but we managed to persevere and get a functional demo by the end of the class. | ||

| + | |||

| + | Looking back, we could have probably created a better plan earlier on. Defining milestones for different nodes, and if they were not reached, shift our attention to get them done to progress the overall project. We overlooked a lot of the mechanical aspects of the RC car and how it would impact the compass and accelerometer. Improving our mounting and spending more time refining the process of determining heading would have likely helped a lot. Refining driver logic to deal with some variability in steering, that we did not notice until integration testing near the final weeks, earlier on could also have helped in the performance of the car in the final demo. These are learning points that we will keep in mind for future projects and add to the takeaways from the course. | ||

| + | |||

| + | |||

| + | |||

=== Project Video === | === Project Video === | ||

| + | [https://www.youtube.com/watch?v=iyOFE1iFbg4 Youtube] | ||

| + | [https://www.youtube.com/watch?v=ZJXLpv5U6vM Youtube link for test drive] | ||

=== Project Source Code === | === Project Source Code === | ||

| + | https://gitlab.com/infallibleprogrammer/utah - Boards | ||

| + | |||

| + | https://gitlab.com/jbeard79/UTAH_APP - Application | ||

=== Advise for Future Students === | === Advise for Future Students === | ||

| − | < | + | 1. It is advised to order the parts as soon as possible and determine the pin/ports which are needed to begin the designing and ordering of the PCB. <br/> |

| + | 2. Select good quality components. Don't go for cheap quality components. The cheaper the component, the more you'll have to compensate typically. <br/> | ||

| + | 3. Always have extra components. You never know which component will stop working. Having extra components saves a lot of time.<br/> | ||

| + | 4. Use all the CAN transceivers of the same manufacturer.<br/> | ||

| + | 5. Try to use one power source instead of two for the entire project. In this way, you can reduce the overall weight of your RC car.<br/> | ||

| + | 6. Select your Power Regulator IC wisely. You need to have sufficient current to drive all the controllers and the components.<br/> | ||

| + | 7. Don't underestimate the magnitude of mechanical design and work that is required to get things working smoothly.<br/> | ||

| + | 8. Buy a physical compass to use while testing to ensure your seeing the correct headings.<br/> | ||

=== Acknowledgement === | === Acknowledgement === | ||

| + | We would like to thank Preet and Vidushi for making the best out of the bad situation of remote learning. | ||

| + | |||

| + | We would also like to thank David Brassfield, for assisting with 3D printing and design. | ||

=== References === | === References === | ||

| + | |||

| + | '''Motor Controller''' | ||

| + | |||

| + | * [https://traxxas.com/support/Programming-Your-Traxxas-Electronic-Speed-Control ESC] | ||

Latest revision as of 17:36, 5 April 2022

Contents

- 1 UTAH: Unit Tested to Avoid Hazards

- 2 Abstract

- 3 Objectives & Introduction

- 4 Schedule

- 5 Parts List & Cost

- 6 Printed Circuit Board

- 7 Fabrication

- 8 Power Management

- 9 Challenges

- 10 CAN Communication

- 11 Sensor ECU

- 12 Motor ECU

- 13 Geographical Controller

- 14 Communication Bridge Controller & LCD

- 15 Master Module

- 16 Mobile Application

- 17 Conclusion

UTAH: Unit Tested to Avoid Hazards

Abstract

The UTAH (Unit Testing to Avoid Hazards) project is a path finding and obstacle avoiding RC car. UTAH can interface with an Android application to get new coordinates to travel to, and will do so all while avoiding obstacles visible by ultrasonic sensors.

Objectives & Introduction

Objectives

- RC car can communicate with an Android application to:

- Receive new coordinates to travel to

- Send diagnostic information to the application

- Emergency stop and start driving

- RC car can travel to received coordinates in an efficient path while avoiding obstacles

- RC car can maintain speed when driving on sloped ground

- Design printed circuit board (PCB) to neatly connect all SJ2 boards

- Design and 3D print sensor mounts for the ultrasonic sensors

- Design a simple and intuitive user interface for the Android application

- Design a DBC file

Introduction

The UTAH RC car uses 4 SJ2 boards as nodes on the CAN bus

- Driver and LCD

- GEO and path finding

- Sensors and bridge app

- Motor

Team Members & Responsibilities

- Akash Vachhani Gitlab

- Leader

- Geographical Controller

- Master Controller

- Jonathan Beard Gitlab

- Android Application Developer

- Communication Bridge Controller

- Jonathan Tran Gitlab

- Sensors Controller

- Shreevats Gadhikar Gitlab'

- Motor Controller

- Hardware Integration

- PCB Designing

- Amritpal Sidhu

- Motor Controller

Schedule

| Description | Color |

|---|---|

| Administrative | Black |

| Sensor | Cyan |

| Bluetooth & App | Blue |

| GEO | Red |

| Motor | Magenta |

| Main | Orange |

| Week# | Start Date | End Date | Task | Status |

|---|---|---|---|---|

| 1 |

02/15/2021

|

02/21/2021

|

|

|

| 2 |

02/22/2021

|

02/28/2021

|

|

|

| 3 |

03/01/2021

|

03/07/2021

|

|

|

| 4 |

03/08/2021

|

03/14/2021

|

|

|

| 5 |

03/15/2021

|

03/21/2021

|

|

|

| 6 |

03/22/2021

|

03/28/2021

|

|

|

| 7 |

03/29/2021

|

04/04/2021

|

|

|

| 8 |

04/05/2021

|

04/11/2021

|

|

|

| 9 |

04/12/2021

|

04/18/2021

|

|

|

| 10 |

04/19/2021

|

05/25/2021

|

|